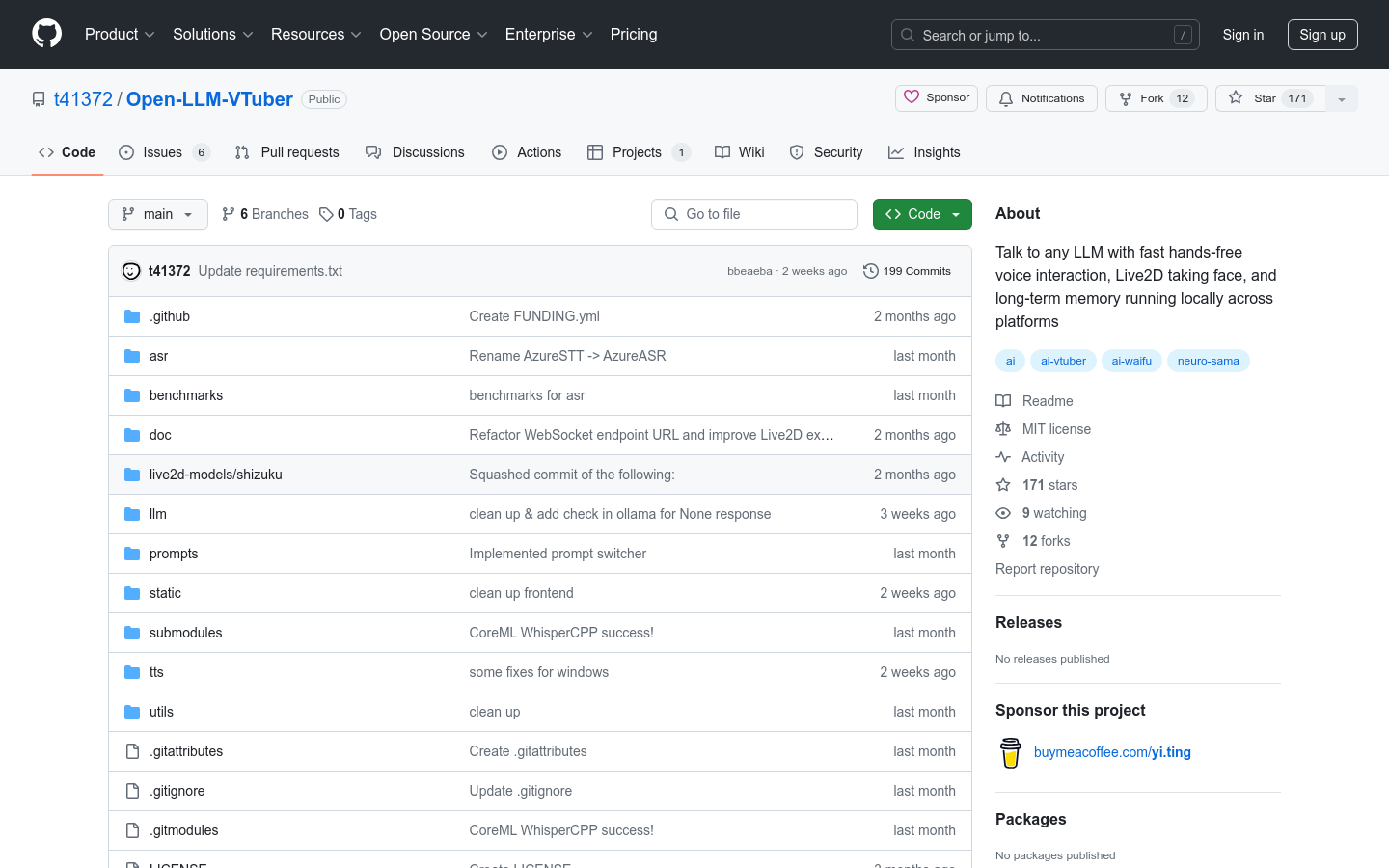

Open LLM VTuber

Overview :

Open-LLM-VTuber is an open-source project designed for interaction with large language models (LLMs) via voice, featuring real-time Live2D facial capture and cross-platform long-term memory capabilities. This project supports macOS, Windows, and Linux platforms, allowing users to select different speech recognition and speech synthesis backends, as well as customized long-term memory solutions. It is particularly suited for developers and enthusiasts looking to implement natural language conversations with AI across various platforms.

Target Users :

The target audience includes developers, tech enthusiasts, and AI researchers who can utilize Open-LLM-VTuber to create their own virtual characters, conduct research in natural language processing and machine learning, or develop applications for AI interaction.

Use Cases

Developers use Open-LLM-VTuber to create a virtual assistant capable of multi-language conversations.

Educational institutions leverage the project to teach students the fundamentals of natural language processing.

Tech enthusiasts use Open-LLM-VTuber to develop personalized AI chatbots.

Features

Supports voice interaction with any large language model backend compatible with the OpenAI API.

Customizable selection of speech recognition and text-to-speech providers.

Integrates MemGPT for long-term memory functionality, providing a continuous chat experience.

Supports Live2D models that automatically control facial expressions based on LLM responses.

Utilizes GPU acceleration on macOS to significantly reduce latency.

Supports multiple languages including Chinese.

Allows complete offline operation, ensuring user privacy.

How to Use

Install required dependencies such as FFmpeg and Python virtual environment.

Clone the Open-LLM-VTuber code repository to your local machine.

Configure the conf.yaml file in the project as needed, selecting the desired speech recognition and speech synthesis backends.

Run server.py to start the WebSocket communication server.

Open the index.html file to launch the front-end interface.

Execute launch.py or main.py to start the back-end processing.

Interact with large language models using voice and observe the real-time responses of the Live2D model.

Featured AI Tools

Alice

Alice is a lightweight AI agent designed to create a self-contained AI assistant similar to JARVIS. It achieves this by building a "text computer" centered around a large language model (LLM). Alice excels in tasks like topic research, coding, system administration, literature reviews, and complex mixed tasks that go beyond these basic capabilities. Alice has achieved near-perfect performance in everyday tasks using GPT-4 and is leveraging the latest open-source models for practical application.

AI Agents

459.8K

Feshua Smart Assistant

Feshua Smart Assistant is an intelligent assistant product that allows users to choose their favorite avatar, set a name, and remember user behavior on Feshua. It supports the deployment of business applications on Feshua, enabling cross-system task completion and a unified user experience. The product aims to enhance work efficiency and creativity, serving as a new type of digital employee for enterprises.

AI Agents

206.2K