Videoretalking

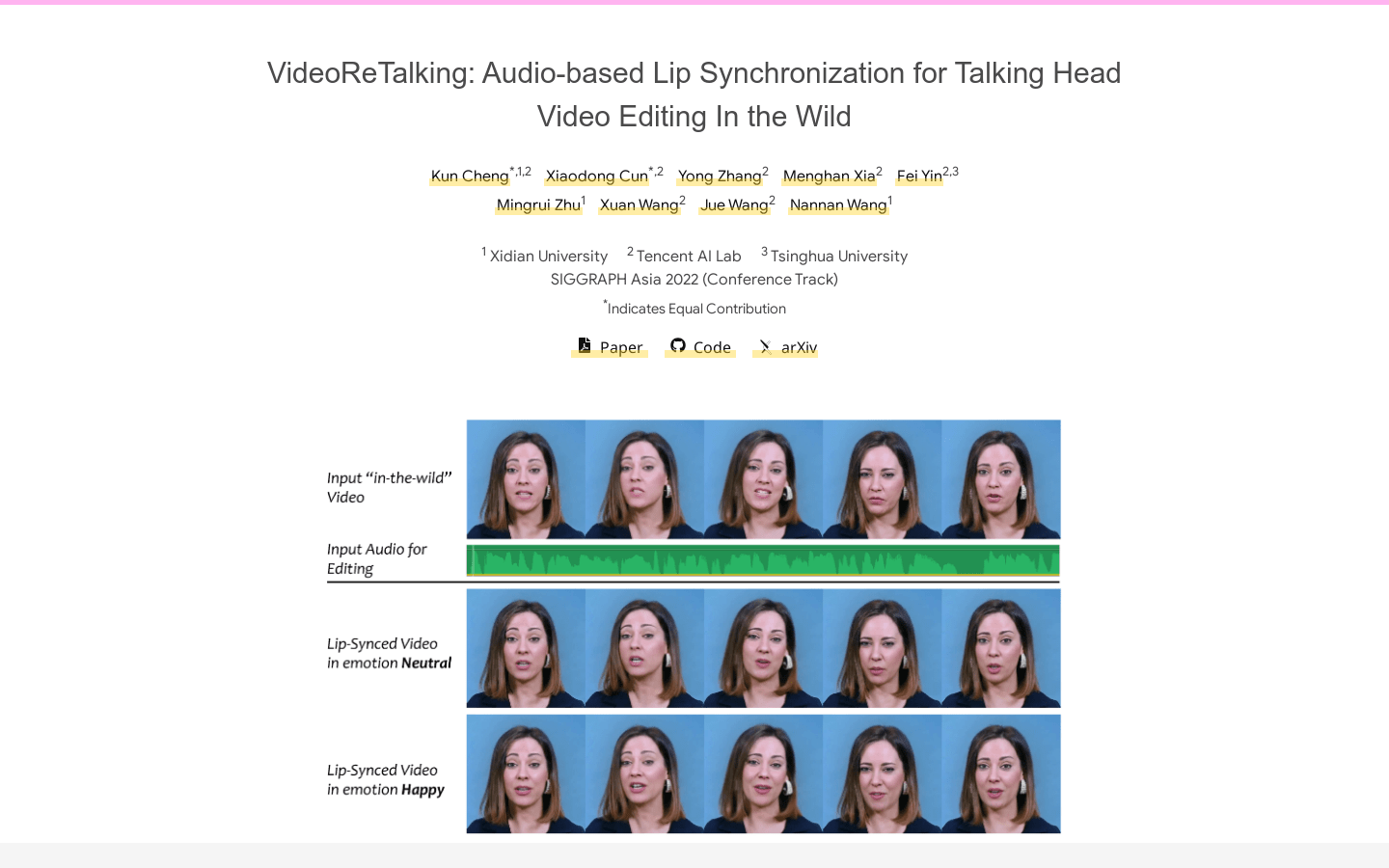

Overview :

VideoReTalking is a novel system that can edit real-world talking head videos to produce high-quality lip-sync output videos based on input audio, even with varying emotions. The system breaks down this goal into three consecutive tasks: (1) Generating facial videos with normalized expressions using an expression editing network; (2) Audio-driven lip-sync synchronization; (3) Facial enhancement to improve photorealism. Given a talking head video, we first use an expression editing network to modify the expressions of each frame according to a standardized expression template, resulting in a video with normalized expressions. This video is then input into a lip-sync network along with the given audio to generate a lip-sync video. Finally, we use an identity-aware facial enhancement network and post-processing to enhance the photorealism of the synthesized face. We utilize learning-based methods for all three steps, and all modules can be processed sequentially in a pipeline without any user intervention.

Target Users :

Suitable for video editing scenarios requiring audio-driven lip-sync, applicable to film, TV, advertising and more.

Use Cases

A film producer uses VideoReTalking to edit the dialogue of characters in a film, achieving high-quality lip-sync.

An advertising company uses VideoReTalking to create advertisements, ensuring the actors' mouth movements perfectly match the audio.

A TV show producer uses VideoReTalking to edit the dialogue of characters in a TV show, achieving high-quality lip-sync.

Features

Audio-driven lip-sync

Facial enhancement

Expression editing

High-quality lip-sync video generation

No user intervention required

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M