MIDI

Overview :

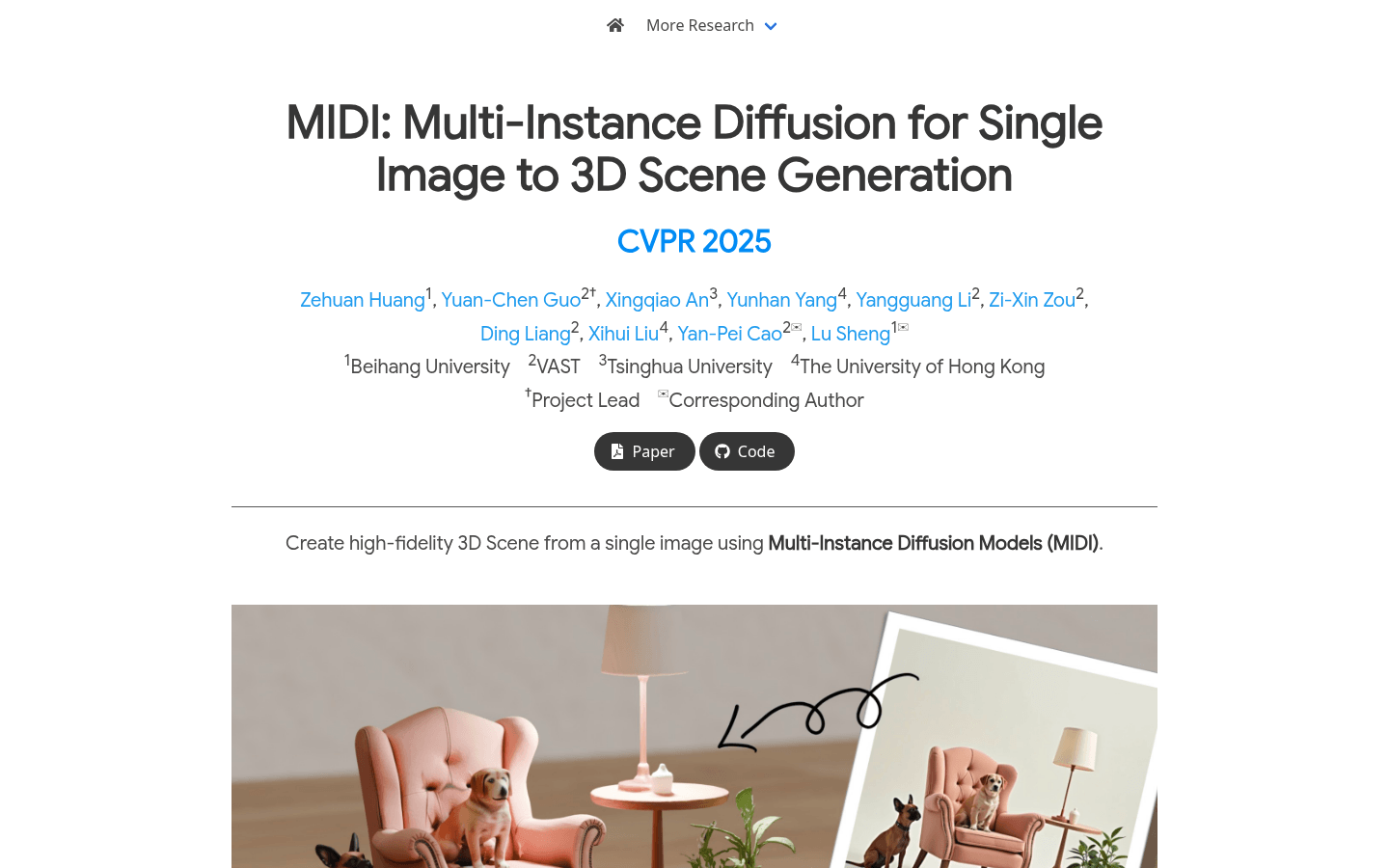

MIDI is an innovative image-to-3D scene generation technology that utilizes a multi-instance diffusion model to directly generate multiple 3D instances with accurate spatial relationships from a single image. The core of this technology lies in its multi-instance attention mechanism, which effectively captures inter-object interactions and spatial consistency without complex multi-step processing. MIDI excels in image-to-scene generation, suitable for synthetic data, real-world scene data, and stylized scene images generated by text-to-image diffusion models. Its main advantages include efficiency, high fidelity, and strong generalization ability.

Target Users :

This product primarily targets researchers and developers in computer vision, 3D modeling, and graphics, as well as industry professionals interested in generating 3D scenes from a single image. It provides an innovative solution for users who need efficient, high-quality 3D scene generation, suitable for academic research, content creation, virtual reality, and game development.

Use Cases

In academic research, researchers can use MIDI to generate 3D scenes for validating new algorithms or models.

In game development, developers can quickly generate 3D scenes from concept images to accelerate the construction of game worlds.

In virtual reality applications, MIDI can transform user-provided images into immersive 3D scenes, enhancing user experience.

Features

Generates multiple 3D instances from a single image, supporting direct scene composition.

Employs a multi-instance attention mechanism to capture inter-object interactions and spatial consistency.

Uses partial object images and global scene context as input to directly model object completion.

Supervises the interaction between 3D instances through limited scene-level data, while using single-object data for regularization.

Supports various data types, including synthetic data, real-world scene data, and stylized scene images.

The texture of the generated 3D scene can be further optimized using MV-Adapter.

Efficient training and generation process, with a total processing time of only 40 seconds.

The model code is open-source, facilitating use and extension by researchers and developers.

How to Use

1. Visit the MIDI project page to learn about its features and capabilities.

2. Download and install the relevant code libraries and dependencies.

3. Prepare the input image, which can be synthetic data, real-world scene images, or stylized images.

4. Use the MIDI model to process the input image and generate multiple 3D instances.

5. Combine the generated 3D instances into a complete 3D scene.

6. If necessary, use MV-Adapter to further optimize scene textures.

7. Post-process or apply the generated 3D scene as needed.

Featured AI Tools

Meshpad

MeshPad is an innovative generative design tool that focuses on creating and editing 3D mesh models from sketch input. It achieves complex mesh generation and editing through simple sketch operations, providing users with an intuitive and efficient 3D modeling experience. The tool is based on triangular sequence mesh representation and utilizes a large Transformer model to implement mesh addition and deletion operations. Simultaneously, a vertex alignment prediction strategy significantly reduces computational cost, making each edit take only a few seconds. MeshPad surpasses existing sketch-conditioned mesh generation methods in mesh quality and has received high user recognition in perceptual evaluation. It is primarily aimed at designers, artists, and users who need to quickly perform 3D modeling, helping them create artistic designs in a more intuitive way.

3D modeling

180.2K

Spatiallm

SpatialLM is a large language model designed for processing 3D point cloud data. It generates structured 3D scene understanding outputs, including semantic categories of building elements and objects. It can process point cloud data from various sources, including monocular video sequences, RGBD images, and LiDAR sensors, without requiring specialized equipment. SpatialLM has significant application value in autonomous navigation and complex 3D scene analysis tasks, significantly improving spatial reasoning capabilities.

3D modeling

152.1K