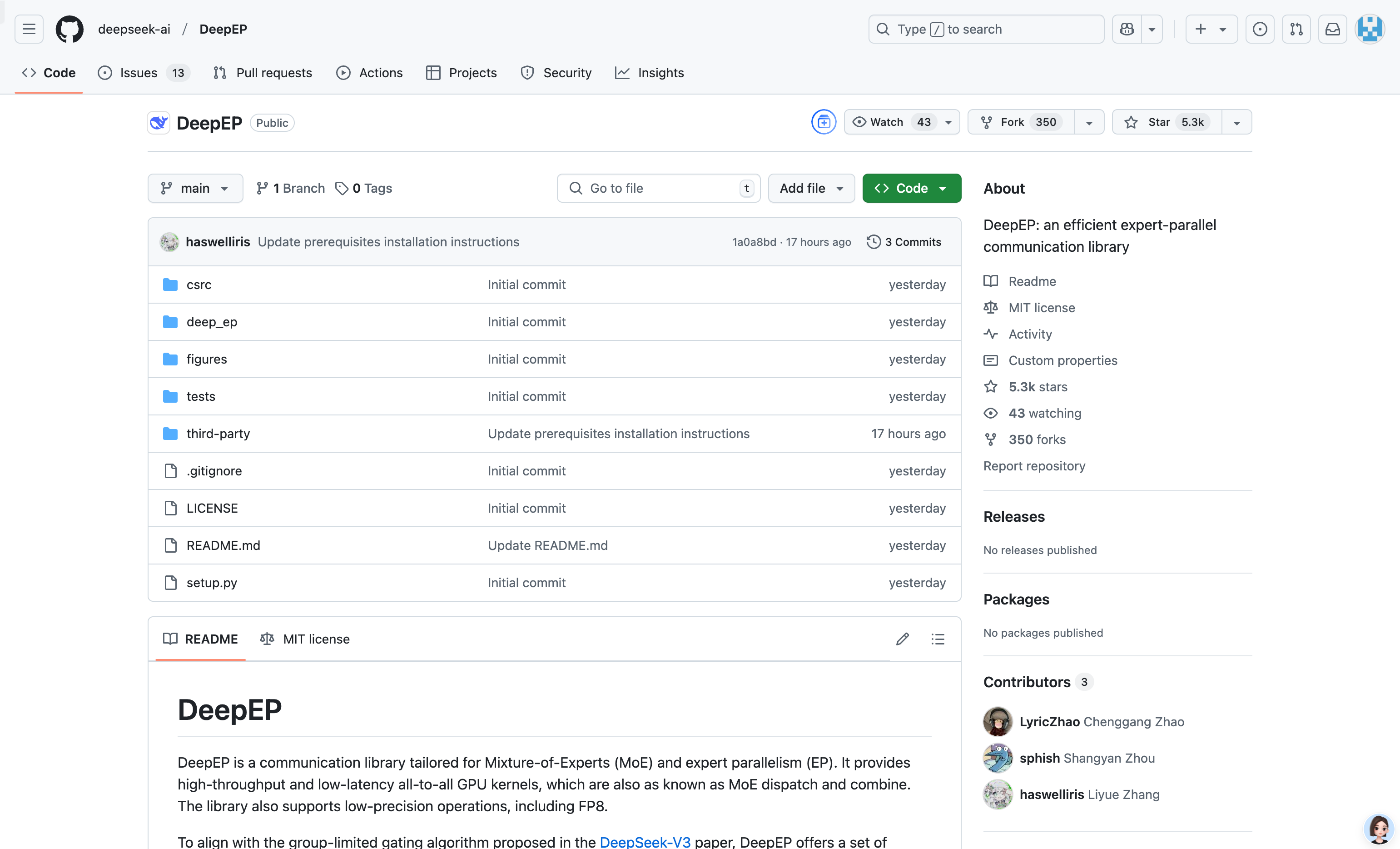

Deepep

Overview :

DeepEP is a communication library specifically designed for Mixture-of-Experts (MoE) and Expert Parallel (EP) models. It provides high-throughput and low-latency fully connected GPU kernels, supporting low-precision operations (such as FP8). The library is optimized for asymmetric domain bandwidth forwarding, making it suitable for training and inference pre-filling tasks. Furthermore, it supports Stream Multiprocessor (SM) count control and introduces a hook-based communication-computation overlap method that doesn't consume any SM resources. While its implementation differs slightly from the DeepSeek-V3 paper, DeepEP's optimized kernels and low-latency design enable excellent performance in large-scale distributed training and inference tasks.

Target Users :

DeepEP is designed for researchers, engineers, and enterprise users needing to efficiently run Mixture-of-Experts (MoE) models in large-scale distributed environments. It's particularly well-suited for deep learning projects requiring optimized communication performance, reduced latency, and improved compute resource utilization. Whether training large language models or performing efficient inference tasks, DeepEP delivers significant performance improvements.

Use Cases

In large-scale distributed training, utilize DeepEP's high-throughput kernels to accelerate MoE model dispatch and combine operations, significantly improving training efficiency.

During inference, leverage DeepEP's low-latency kernels for rapid decoding, suitable for applications with stringent real-time requirements.

Through the communication-computation overlap method, DeepEP further optimizes inference task performance without consuming additional GPU resources.

Features

Supports high-throughput and low-latency fully connected GPU kernels for MoE model dispatch and combine operations.

Optimized for asymmetric domain bandwidth forwarding, such as data transfer from NVLink to RDMA domains.

Supports low-latency kernels using pure RDMA communication, ideal for latency-sensitive inference decoding tasks.

Provides a hook-based communication-computation overlap method, consuming no GPU SM resources and improving resource utilization.

Supports various network configurations, including InfiniBand and RDMA over Converged Ethernet (RoCE).

How to Use

1. Ensure your system meets the hardware requirements, including Hopper architecture GPUs and RDMA-capable network devices.

2. Install dependencies, including Python 3.8 or higher, CUDA 12.3 or higher, and PyTorch 2.1 or higher.

3. Download and install DeepEP's dependency library NVSHMEM, following the official installation guide.

4. Install DeepEP using the command `python setup.py install`.

5. Import the `deep_ep` module into your project and call its provided functions, such as `dispatch` and `combine`, as needed.

Featured AI Tools

Pseudoeditor

PseudoEditor is a free online pseudocode editor. It features syntax highlighting and auto-completion, making it easier for you to write pseudocode. You can also use our pseudocode compiler feature to test your code. No download is required, start using it immediately.

Development & Tools

3.8M

Coze

Coze is a next-generation AI chatbot building platform that enables the rapid creation, debugging, and optimization of AI chatbot applications. Users can quickly build bots without writing code and deploy them across multiple platforms. Coze also offers a rich set of plugins that can extend the capabilities of bots, allowing them to interact with data, turn ideas into bot skills, equip bots with long-term memory, and enable bots to initiate conversations.

Development & Tools

3.8M