Wan2.1 T2V 14B

Overview :

Wan2.1-T2V-14B is an advanced text-to-video generation model based on a diffusion transformer architecture, incorporating innovative spatiotemporal variational autoencoders (VAEs) and large-scale data training. It generates high-quality video content at various resolutions, supports both Chinese and English text input, and surpasses existing open-source and commercial models in performance and efficiency. This model is suitable for scenarios requiring efficient video generation, such as content creation, advertising production, and video editing. Currently, this model is freely available on the Hugging Face platform to promote the development and application of video generation technology.

Target Users :

This model is designed for creators, advertisers, video editors, and researchers who need to efficiently generate high-quality video content. It rapidly transforms text or images into vivid videos, saving time and costs. Support for multiple languages expands its global applicability.

Use Cases

Generate a 5-second 480P video based on a given text description.

Transform a static image into a video with dynamic effects.

Generate video content containing Chinese or English text based on text prompts.

Features

Supports various video generation tasks, including text-to-video and image-to-video.

Supports video generation at 480P and 720P resolutions.

Possesses strong spatiotemporal compression capabilities, enabling efficient processing of 1080P videos.

Supports Chinese and English text input, expanding application scenarios.

Provides single-GPU and multi-GPU inference code to accommodate different hardware requirements.

How to Use

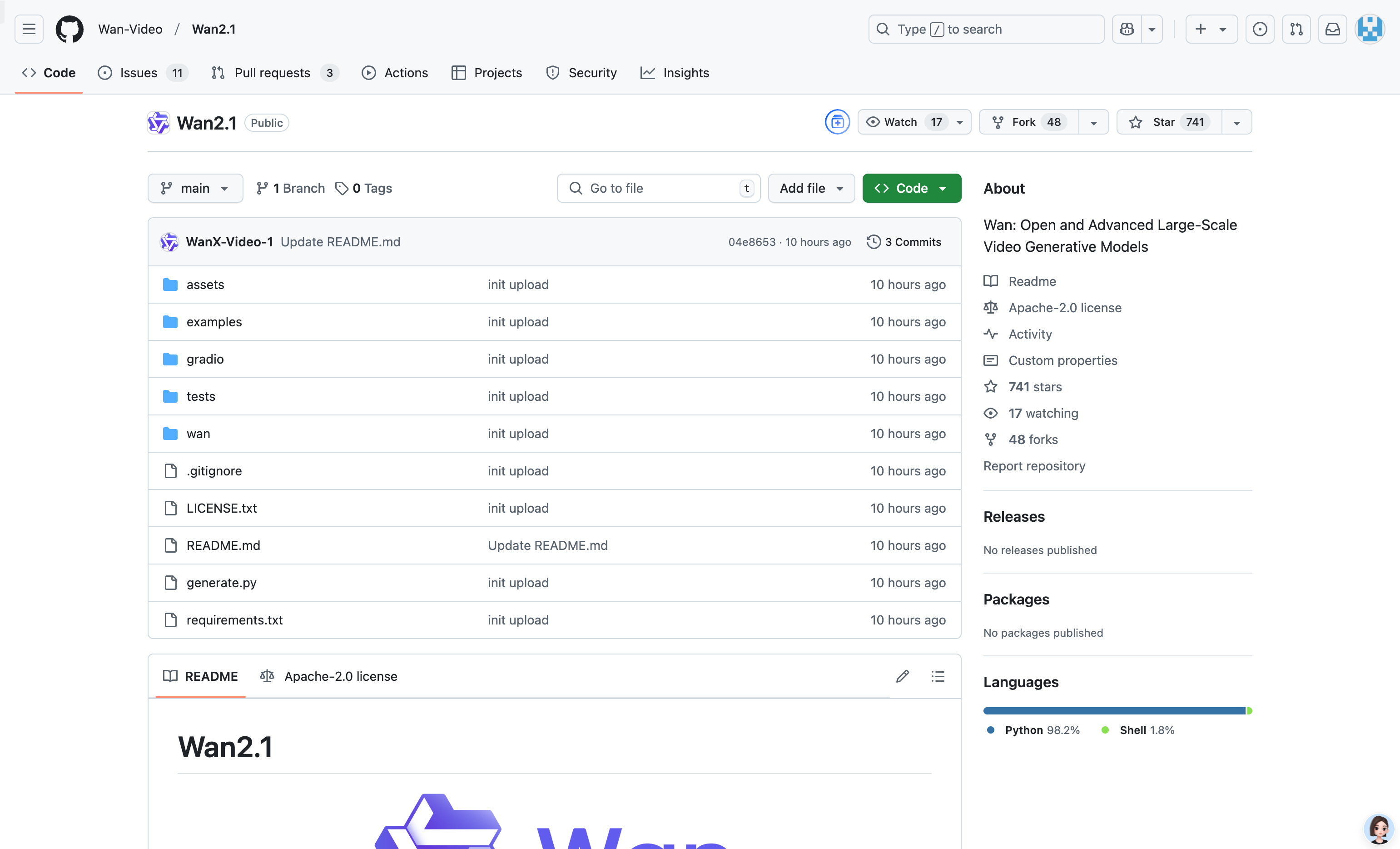

1. Clone the project repository: `git clone https://github.com/Wan-Video/Wan2.1.git`

2. Install dependencies: `pip install -r requirements.txt`

3. Download model weights: `huggingface-cli download Wan-AI/Wan2.1-T2V-14B --local-dir ./Wan2.1-T2V-14B`

4. Run text-to-video generation: `python generate.py --task t2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-T2V-14B --prompt 'Text description'`

5. Adjust parameters as needed, such as resolution and prompt text.

Featured AI Tools

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M