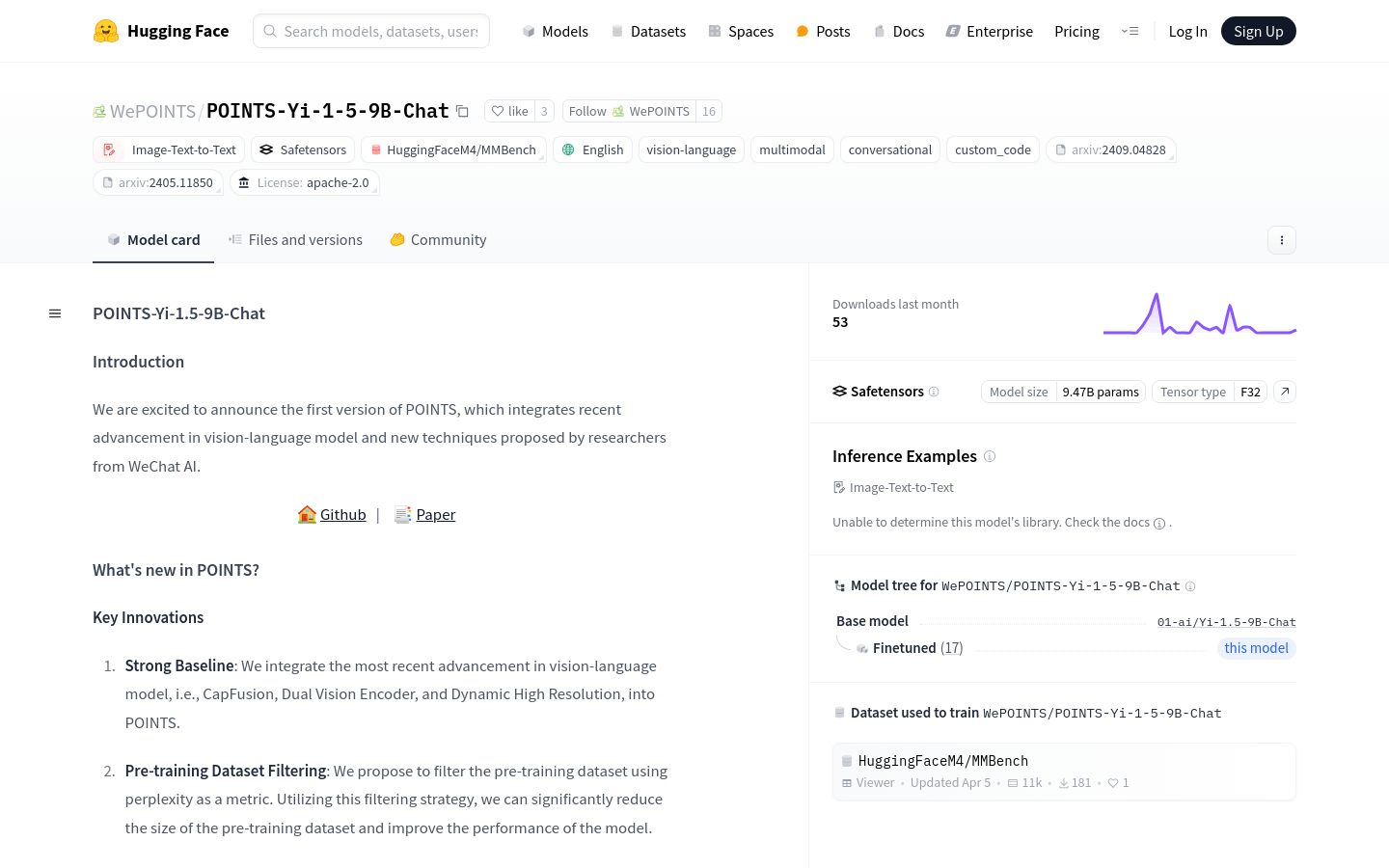

POINTS Yi 1.5 9B Chat

Overview :

POINTS-Yi-1.5-9B-Chat is a visual language model that incorporates the latest visual language model technologies along with innovations introduced by WeChat AI. The model features significant innovations in pre-training dataset filtering and Model Soup technology, allowing for substantial reductions in dataset size while enhancing model performance. It excels in multiple benchmark tests, marking an important advancement in the field of visual language models.

Target Users :

The target audience includes researchers, developers, and enterprises, particularly those professionals who need to train and apply models in the field of visual language. This product enhances model performance, minimizes computational resource consumption, and accelerates the research and development process through advanced visual language model technology and optimization strategies.

Use Cases

In an image captioning task, use POINTS-Yi-1.5-9B-Chat to generate detailed descriptions of images.

In a visual question-answering task, utilize the model to answer questions related to images.

In a visual instruction-following task, based on user-provided images and commands, the model executes the corresponding actions.

Features

Integrates the latest visual language model technologies, such as CapFusion, Dual Vision Encoder, and Dynamic High Resolution.

Filters the pre-training dataset using perplexity as a metric, reducing dataset size and improving model performance.

Employs Model Soup technology to consolidate models fine-tuned on various visual instructions, further enhancing performance.

Demonstrates exceptional performance across multiple benchmark tests, including MMBench-dev-en, MathVista, and HallucinationBench.

Supports Image-Text-to-Text multimodal interactions, suitable for scenarios requiring a combination of visual and linguistic elements.

Provides detailed usage examples and code to facilitate quick onboarding and integration for developers.

How to Use

1. Install necessary libraries such as transformers, PIL, and torch.

2. Import AutoModelForCausalLM, AutoTokenizer, and CLIPImageProcessor.

3. Prepare image data, which can be sourced from online images or local files.

4. Load the model and tokenizer, specifying the model path as 'WePOINTS/POINTS-Yi-1-5-9B-Chat'.

5. Configure generation parameters such as maximum new tokens, temperature, top_p, and beam count.

6. Use the chat method of the model, passing in parameters such as image, prompt, tokenizer, and image processor.

7. Collect the model output and print the results.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M