Qwen2.5 Coder 32B Instruct AWQ

Overview :

Qwen2.5-Coder represents a series of large language models optimized for code generation, covering six mainstream model sizes with 0.5, 1.5, 3, 7, 14, and 32 billion parameters, catering to the diverse needs of developers. Qwen2.5-Coder shows significant improvements in code generation, inference, and debugging, trained on a robust Qwen2.5 backbone with a token expansion to 5.5 trillion, including source code, text grounding, and synthetic data, making it one of the most advanced open-source code LLMs, with coding capabilities comparable to GPT-4o. Additionally, Qwen2.5-Coder offers a more comprehensive foundation for applications in real-world scenarios such as code agents.

Target Users :

The target audience for Qwen2.5-Coder-32B-Instruct-AWQ includes developers and programming enthusiasts, particularly professionals who deal with large amounts of code and extensive text. This model's capabilities in code generation, inference, and debugging help enhance their development efficiency, optimize code quality, and its support for long texts makes it even more user-friendly for handling large projects.

Use Cases

Developers use Qwen2.5-Coder to generate the code for a quick sort algorithm.

Software engineers leverage the model to fix existing errors in their code.

Researchers employ the model for large-scale code analysis and studies.

Features

Code Generation: Significantly enhances code generation capabilities, matching GPT-4o's coding proficiency.

Code Inference: Improves code understanding abilities, assisting developers in better comprehending and optimizing their code.

Code Debugging: Aids developers in identifying and fixing errors in their code.

Long Text Support: Capable of handling long texts with up to 128K tokens.

AWQ Quantization: Utilizes AWQ 4-bit quantization technology to optimize model performance and efficiency.

Multi-parameter Configuration: Features complex configurations with 64 layers, 40 query heads, and 8 KV heads.

Open Source: Available as an open-source model for developers to use and contribute freely.

High Performance: Exhibits high performance in processing long text and code generation tasks.

How to Use

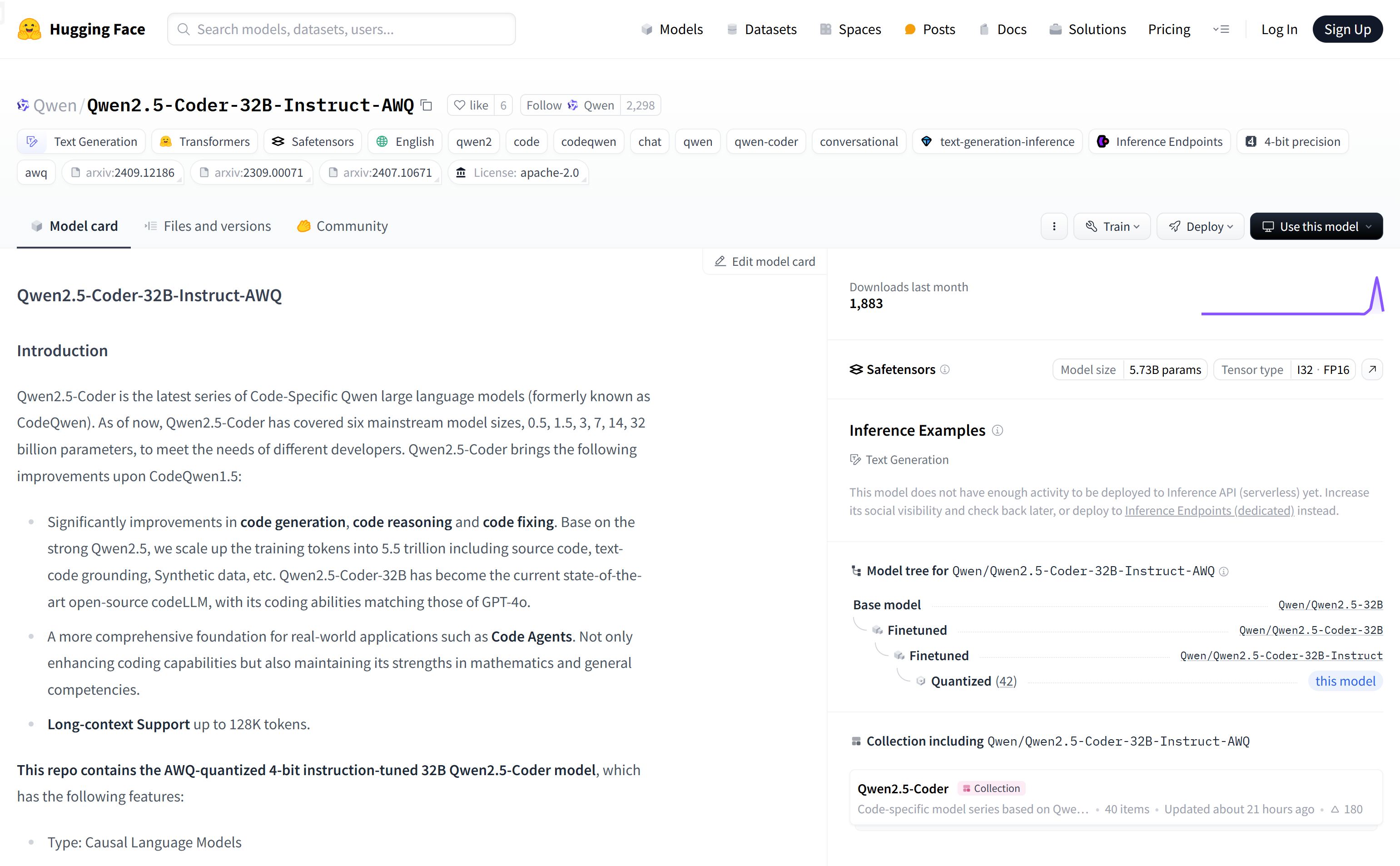

1. Visit the Hugging Face website and search for the Qwen2.5-Coder-32B-Instruct-AWQ model.

2. Import the necessary libraries and modules according to the code snippets provided on the page.

3. Load the model and tokenizer using AutoModelForCausalLM and AutoTokenizer.from_pretrained methods.

4. Prepare the input prompt, such as the requirements for writing an algorithm.

5. Process the input message using the tokenizer.apply_chat_template method to generate the model input.

6. Call the model.generate method to generate the code.

7. Use the tokenizer.batch_decode method to convert the generated code IDs into textual format.

8. Analyze and test the generated code to ensure it meets the expected functionality and quality.

Featured AI Tools

Chinese Picks

Douyin Jicuo

Jicuo Workspace is an all-in-one intelligent creative production and management platform. It integrates various creative tools like video, text, and live streaming creation. Through the power of AI, it can significantly increase creative efficiency. Key features and advantages include:

1. **Video Creation:** Built-in AI video creation tools support intelligent scripting, digital human characters, and one-click video generation, allowing for the rapid creation of high-quality video content.

2. **Text Creation:** Provides intelligent text and product image generation tools, enabling the quick production of WeChat articles, product details, and other text-based content.

3. **Live Streaming Creation:** Supports AI-powered live streaming backgrounds and scripts, making it easy to create live streaming content for platforms like Douyin and Kuaishou. Jicuo is positioned as a creative assistant for newcomers and creative professionals, providing comprehensive creative production services at a reasonable price.

AI design tools

105.1M

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M