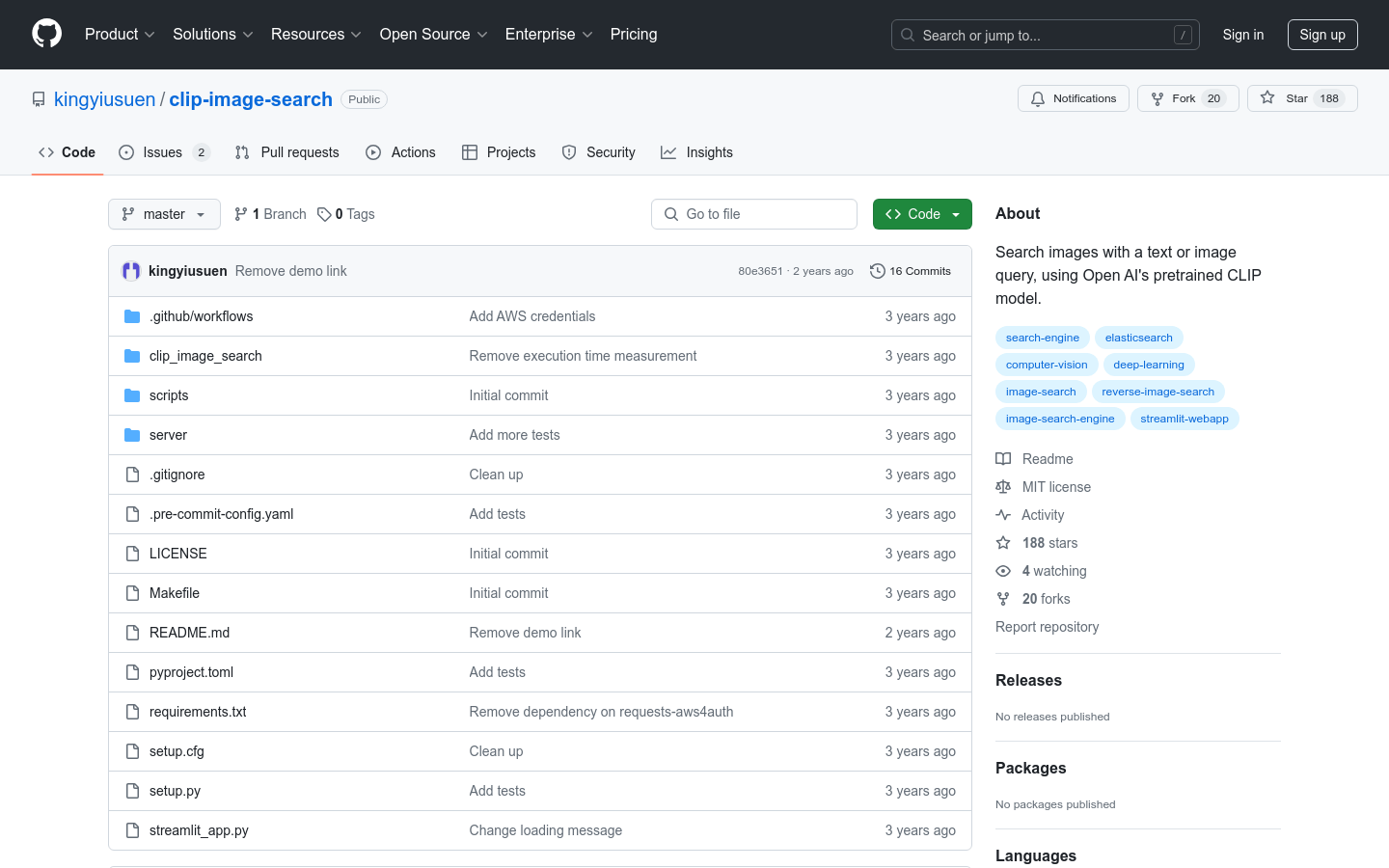

Clip Image Search

Overview :

clip-image-search is an image search tool based on Open AI's pretrained CLIP model, capable of retrieving images through text or image queries. The CLIP model maps images and text into the same latent space through training, enabling comparison via similarity metrics. This tool utilizes images from the Unsplash dataset and performs k-nearest neighbor searches using Amazon Elasticsearch Service, with query services deployed via AWS Lambda functions and API Gateway, and the frontend developed using Streamlit.

Target Users :

The target audience comprises developers and researchers who need to perform image searches, particularly those interested in image retrieval based on deep learning models. This product is ideal for them as it offers a simple and efficient method for image retrieval and can be easily integrated into existing systems.

Use Cases

Researchers use this tool to retrieve images matching specific text descriptions for visual recognition studies.

Developers integrate this tool into their applications to provide text-based image search capabilities.

Educators use this tool to help students understand the connections between images and text.

Features

Use the CLIP model's image encoder to calculate feature vectors for images in the dataset

Index images by image ID, storing their URLs and feature vectors

Compute feature vectors based on queries (text or image)

Calculate cosine similarity between the query feature vector and dataset image feature vectors

Return the k most similar images

How to Use

Install dependencies

Download the Unsplash dataset and extract metadata

Create an index and upload image feature vectors to Elasticsearch

Build a Docker image for AWS Lambda

Run the Docker image as a container and test it with POST requests

Run a Streamlit application for frontend presentation

Featured AI Tools

Yolov8

YOLOv8 is the latest version of the YOLO (You Only Look Once) family of object detection models. It can accurately and rapidly identify and locate multiple objects in images or videos, and track their movements in real time. Compared to previous versions, YOLOv8 has significantly improved detection speed and accuracy, while also supporting a variety of additional computer vision tasks, such as instance segmentation and pose estimation. YOLOv8 can be deployed on various hardware platforms in different formats, providing a one-stop end-to-end object detection solution.

AI image detection and recognition

228.3K

Lexy

Lexy is an AI-powered image text extraction tool. It can automatically recognize text in images and extract it for user convenience in subsequent processing and analysis. Lexy boasts high accuracy and fast recognition speed, suitable for various image text extraction scenarios. Whether you are an individual user needing to extract text from images or an enterprise user requiring large-scale image text processing, Lexy can meet your needs.

AI image detection and recognition

221.6K