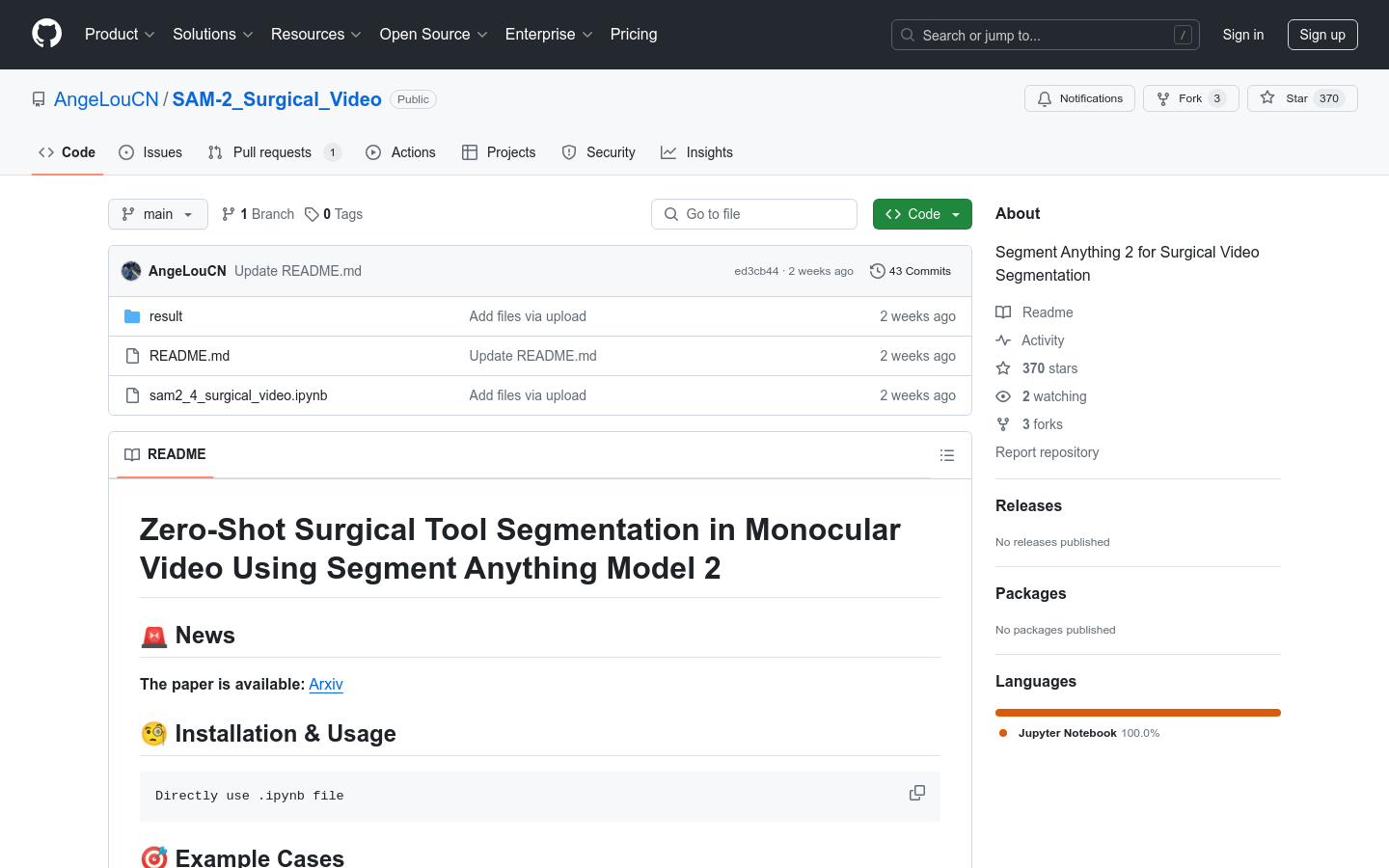

Segment Anything 2 For Surgical Video Segmentation

Overview :

Segment Anything 2 for Surgical Video Segmentation is a surgical video segmentation model based on the Segment Anything Model 2. It utilizes advanced computer vision technology to automatically segment surgical videos for the identification and localization of surgical tools, improving the efficiency and accuracy of surgical video analysis. This model is suitable for various surgical scenarios, including endoscopic surgery and cochlear implantation, and features high precision and robustness.

Target Users :

This product is primarily aimed at researchers and developers in the field of medical image analysis, as well as medical institutions that require automated analysis of surgical videos. It helps users quickly and accurately extract key information from surgical videos, enhancing the efficiency of surgical analysis and assisting clinical decision-making.

Use Cases

In endoscopic surgery, the model automatically identifies surgical tools to assist surgeons in planning and evaluating procedures.

During cochlear implantation, the model is used to locate surgical tools, enhancing the precision and safety of the surgery.

In surgical video teaching and research, the model automatically segments the surgical process, facilitating teaching and academic communication.

Features

Automatically identifies surgical tools present in surgical videos.

Supports a variety of surgical video datasets, including EndoNeRF, EndoVis'17, SurgToolLoc, and cochlear implantation datasets.

Offers Zero-Shot Learning capability, enabling recognition of surgical tools without the need for extensive labeled data.

Fast model training and inference speed, suitable for real-time surgical video analysis.

Supports Jupyter Notebook environments, making it convenient for researchers and developers to conduct experiments and development.

Continuously updated and maintained to ensure the model's advancement and applicability.

How to Use

1. Visit the GitHub page to learn about the model's basic information and usage requirements.

2. Clone or download the model's code repository to your local environment.

3. Install the required libraries and tools, such as Python and Jupyter Notebook.

4. Configure the model's parameters and environment following the guidance in the README document.

5. Prepare the surgical video data and preprocess it according to the model's requirements.

6. Run the model to automatically segment the surgical video and identify tools.

7. Analyze the model's output results and perform further processing and application as needed.

Featured AI Tools

Motionshop

Motionshop is a website for AI character animation. It can automatically detect characters in uploaded videos and replace them with 3D cartoon character models, generating interesting AI videos. The product offers a simple and easy-to-use interface and powerful AI algorithms, allowing users to effortlessly transform their video content into vibrant and entertaining animation.

AI video editing

5.9M

Video Subtitle Remover (VSR)

Video-subtitle-remover (VSR) is a software that uses AI technology to remove hard subtitles from videos. Its main functions include removing hard subtitles from videos without losing resolution, filling the removed subtitle area with an AI algorithm model, supporting custom subtitle position removal, and batch removal of image watermark text. Its advantages include no need for third-party APIs, local implementation, simple operation, and significant effects.

AI video editing

817.5K