Shieldgemma

Overview :

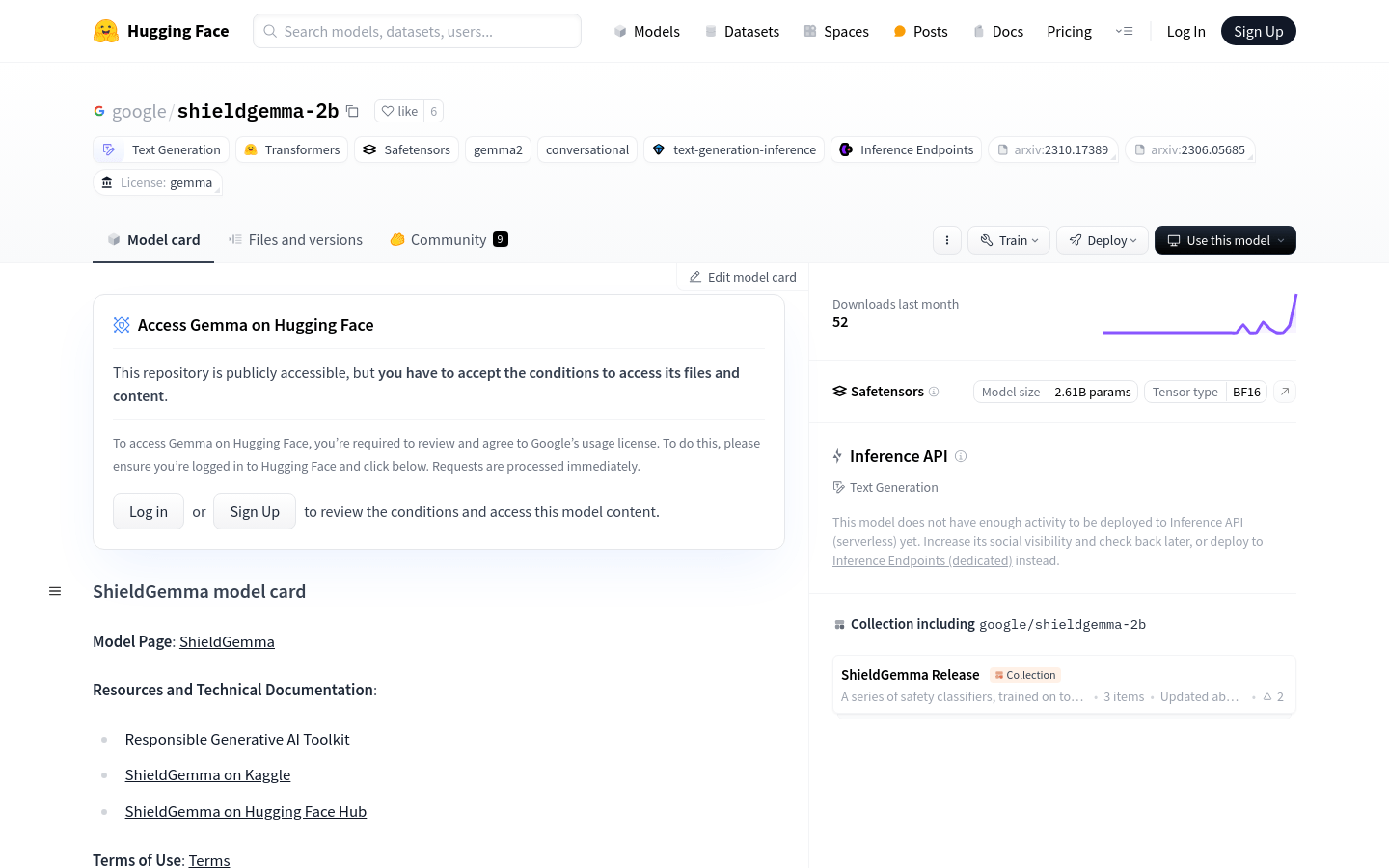

ShieldGemma is a series of security content auditing models built on Gemma 2, developed by Google, focusing on four harm categories: adult content, dangerous content, hate speech, and harassment. These are text-to-text decoder-only large language models available only in English, with open weights, including models with 2B, 9B, and 27B parameter sizes. These models are designed as part of a responsible generative AI toolkit to enhance the safety of AI applications.

Target Users :

The ShieldGemma model is suitable for developers and businesses that need to conduct security audits on text content, such as social media platforms, online forums, and content publishing systems. These models can help filter out content that violates security policies, enhancing the overall quality and compliance of the content.

Use Cases

Social media platforms use the ShieldGemma model to automatically detect and filter hate speech.

Online education platforms utilize the model to ensure healthy and positive discussions in forums.

Internal company forums adopt the ShieldGemma model to monitor and prevent workplace harassment.

Features

Text-to-text decoder-only large language models, focused on content auditing.

Offers three different model sizes: 2B, 9B, and 27B parameters.

Formats input using specific patterns for optimal performance.

Outputs text strings indicating whether user input or model output violates the provided policy.

Has undergone a safety evaluation for fairness features, compliant with internal guidelines.

Part of a responsible generative AI toolkit aimed at improving the safety of AI applications.

How to Use

Install the necessary library: `pip install -U transformers[accelerate]`.

Import AutoTokenizer and AutoModelForCausalLM from Hugging Face.

Load the ShieldGemma model using AutoTokenizer and AutoModelForCausalLM.

Format the prompt as needed, including context, user prompts, and descriptions of security policies.

Input the formatted prompt into the model and use it for content auditing.

Determine if the content violates security policies based on the model’s output of 'Yes' or 'No'.

Adjust model parameters or prompt formats as necessary to optimize auditing effectiveness.

Featured AI Tools

AI Content Detector

AI Content Detector is an enterprise-grade tool for verifying whether text content was generated by artificial intelligence. It can help users determine if the content they are reading was created by a human or an AI, including ChatGPT.

AI content detection

288.4K

Chat GPT Anti Censorship

This plugin prevents text content within Chat GPT from being censored or blocked. It can hide censorship warnings and prevent content from being flagged as violating policy.

AI content detection

258.6K