Funaudiollm

Overview :

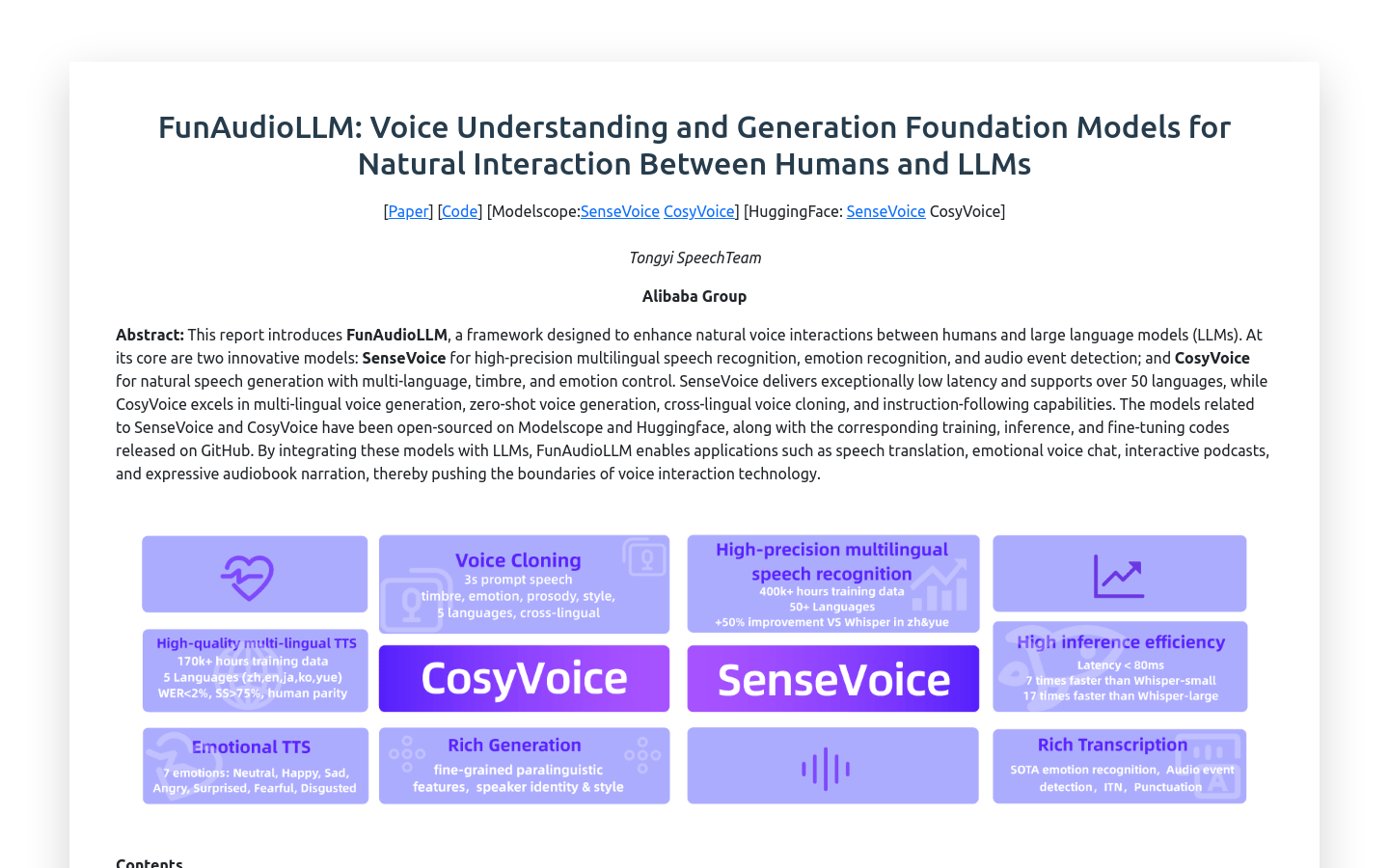

FunAudioLLM is a framework aimed at enhancing natural voice interaction between humans and Large Language Models (LLMs). It comprises two innovative models: SenseVoice, responsible for high-precision multi-lingual speech recognition, emotion recognition, and audio event detection; and CosyVoice, responsible for natural voice generation, supporting multi-lingual, timbre, and emotion control. SenseVoice supports over 50 languages with extremely low latency; CosyVoice excels in multi-lingual voice generation, zero-shot context generation, cross-lingual voice cloning, and instruction following capabilities. Relevant models are open-sourced on Modelscope and Huggingface, and corresponding training, inference, and fine-tuning codes are released on GitHub.

Target Users :

FunAudioLLM targets tech developers, voice technology researchers, and enterprise users who can leverage this framework to build applications with advanced voice interaction features, such as voice translation, emotional voice chat, interactive podcasts, and expressive audiobook narration.

Use Cases

Integrate SenseVoice and CosyVoice to develop an emotional voice chat application, providing a warm and friendly interactive experience.

Utilize FunAudioLLM to create an interactive podcast, allowing listeners to interact in real time with virtual characters in the podcast.

Use LLMs to analyze the emotion of a book and use CosyVoice to synthesize an expressive audiobook, enhancing the listening experience for the audience.

Features

High-precision multi-lingual speech recognition: Supports speech recognition in over 50 languages with extremely low latency.

Emotion recognition: Can recognize emotions in speech, enhancing the interactive experience.

Audio event detection: Detects specific events in audio, such as music, applause, laughter, etc.

Natural voice generation: CosyVoice model can generate natural and fluent voice with multi-lingual support.

Zero-shot context generation: Can generate voice for specific contexts without additional training.

Cross-lingual voice cloning: Can replicate different languages' voice styles.

Instruction following capability: Generates voice in a corresponding style according to user instructions.

How to Use

Visit FunAudioLLM's GitHub page to learn about the model's details and usage conditions.

Select the appropriate model, such as SenseVoice or CosyVoice, based on your needs and obtain the corresponding open-source code.

Read the documentation to understand the model's input/output format and how to configure parameters to meet specific requirements.

Set up a training and inference environment for the model on a local machine or cloud platform.

Use the provided code to train or fine-tune the model to suit specific application scenarios.

Integrate the model into your application to develop products with voice interaction features.

Test the application to ensure the accuracy and naturalness of voice recognition and generation.

Optimize model performance based on feedback to improve user experience.

Featured AI Tools

Openvoice

OpenVoice is an open-source voice cloning technology capable of accurately replicating reference voicemails and generating voices in various languages and accents. It offers flexible control over voice characteristics such as emotion, accent, and can adjust rhythm, pauses, and intonation. It achieves zero-shot cross-lingual voice cloning, meaning it does not require the language of the generated or reference voice to be present in the training data.

AI speech recognition

2.4M

Chattts

ChatTTS is an open-source text-to-speech (TTS) model that allows users to convert text into speech. This model is primarily aimed at academic research and educational purposes and is not suitable for commercial or legal applications. It utilizes deep learning techniques to generate natural and fluent speech output, making it suitable for individuals involved in speech synthesis research and development.

AI speech synthesis

1.4M