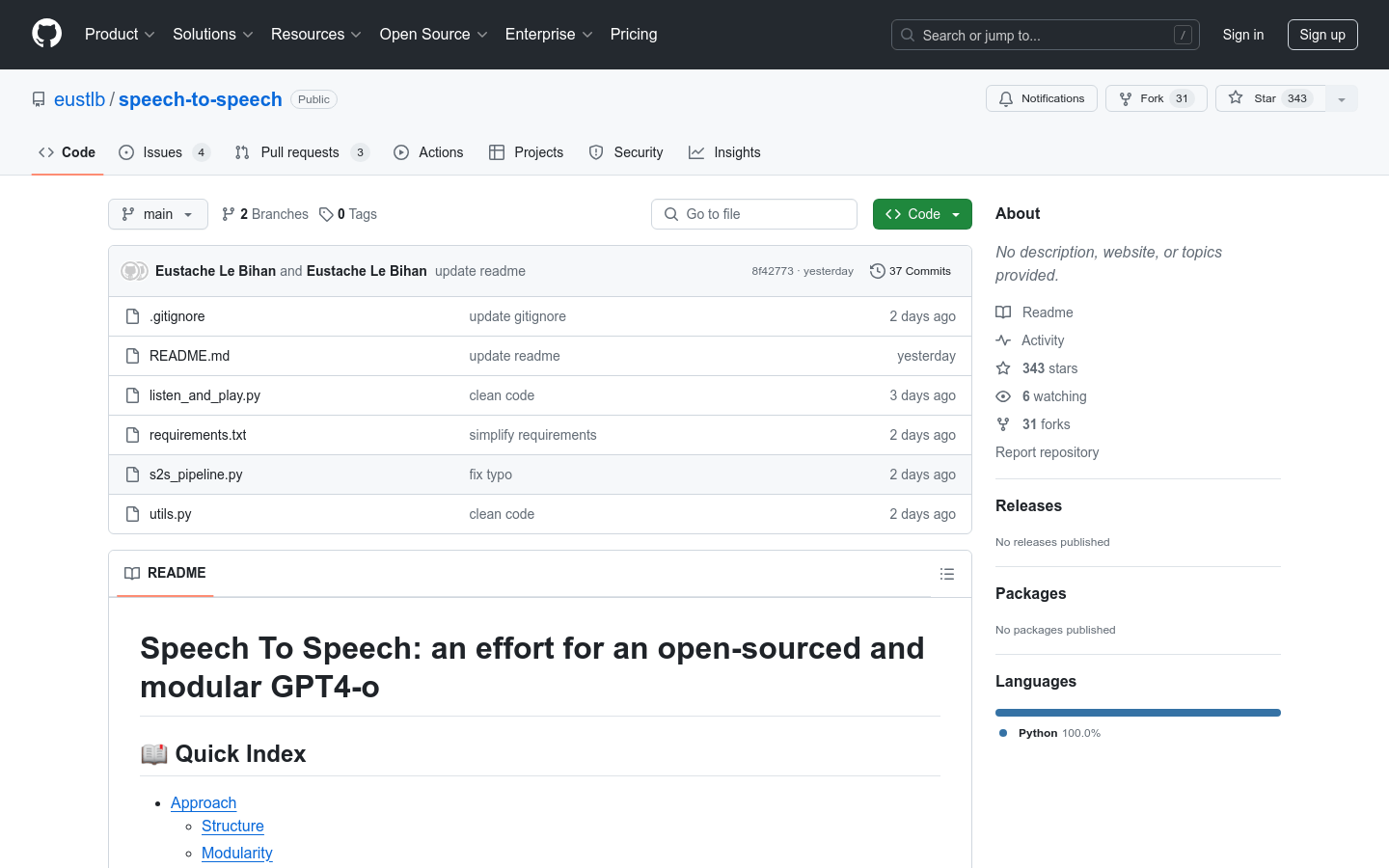

Speech To Speech

Overview :

speech-to-speech is an open-source modular GPT4-o project that achieves speech-to-speech conversion through sequential components such as voice activity detection, speech-to-text, language modeling, and text-to-speech synthesis. It leverages the Transformers library and models available on the Hugging Face hub, providing a high degree of modularity and flexibility.

Target Users :

The target audience includes developers and researchers, particularly those interested in speech recognition, natural language processing, and speech synthesis technologies. This product is suitable for them because it provides a flexible, customizable open-source tool that can be used for research or to develop related applications.

Use Cases

Developers can use this model to create a voice assistant for voice interaction.

Researchers can conduct experiments and studies on speech recognition and synthesis using this model.

Educational institutions can integrate it into teaching tools to enhance students' understanding of speech technology.

Features

Voice Activity Detection (VAD): Uses Silero VAD v5.

Speech to Text (STT): Utilizes the Whisper model, including a distilled version.

Language Model (LM): You can choose any available instruction model from the Hugging Face Hub.

Text to Speech (TTS): Employs Parler-TTS, supporting various checkpoints.

Modular Design: Each component is implemented as a class and can be re-implemented according to specific needs.

Supports both server/client and local running methods.

How to Use

Clone the repository to your local environment.

Install the required dependencies.

Configure model parameters and generation parameters as needed.

Choose a run method: server/client approach or local method.

If using the server/client method, first run the model on the server, then handle audio input and output on the client.

If using the local method, run using the loopback address.

Utilize Torch Compile to optimize the performance of Whisper and Parler-TTS.

Use the model via command line, specifying different parameters to control the behavior of various parts.

Featured AI Tools

Openvoice

OpenVoice is an open-source voice cloning technology capable of accurately replicating reference voicemails and generating voices in various languages and accents. It offers flexible control over voice characteristics such as emotion, accent, and can adjust rhythm, pauses, and intonation. It achieves zero-shot cross-lingual voice cloning, meaning it does not require the language of the generated or reference voice to be present in the training data.

AI speech recognition

2.4M

Chattts

ChatTTS is an open-source text-to-speech (TTS) model that allows users to convert text into speech. This model is primarily aimed at academic research and educational purposes and is not suitable for commercial or legal applications. It utilizes deep learning techniques to generate natural and fluent speech output, making it suitable for individuals involved in speech synthesis research and development.

AI speech synthesis

1.4M