Seed ASR

Overview :

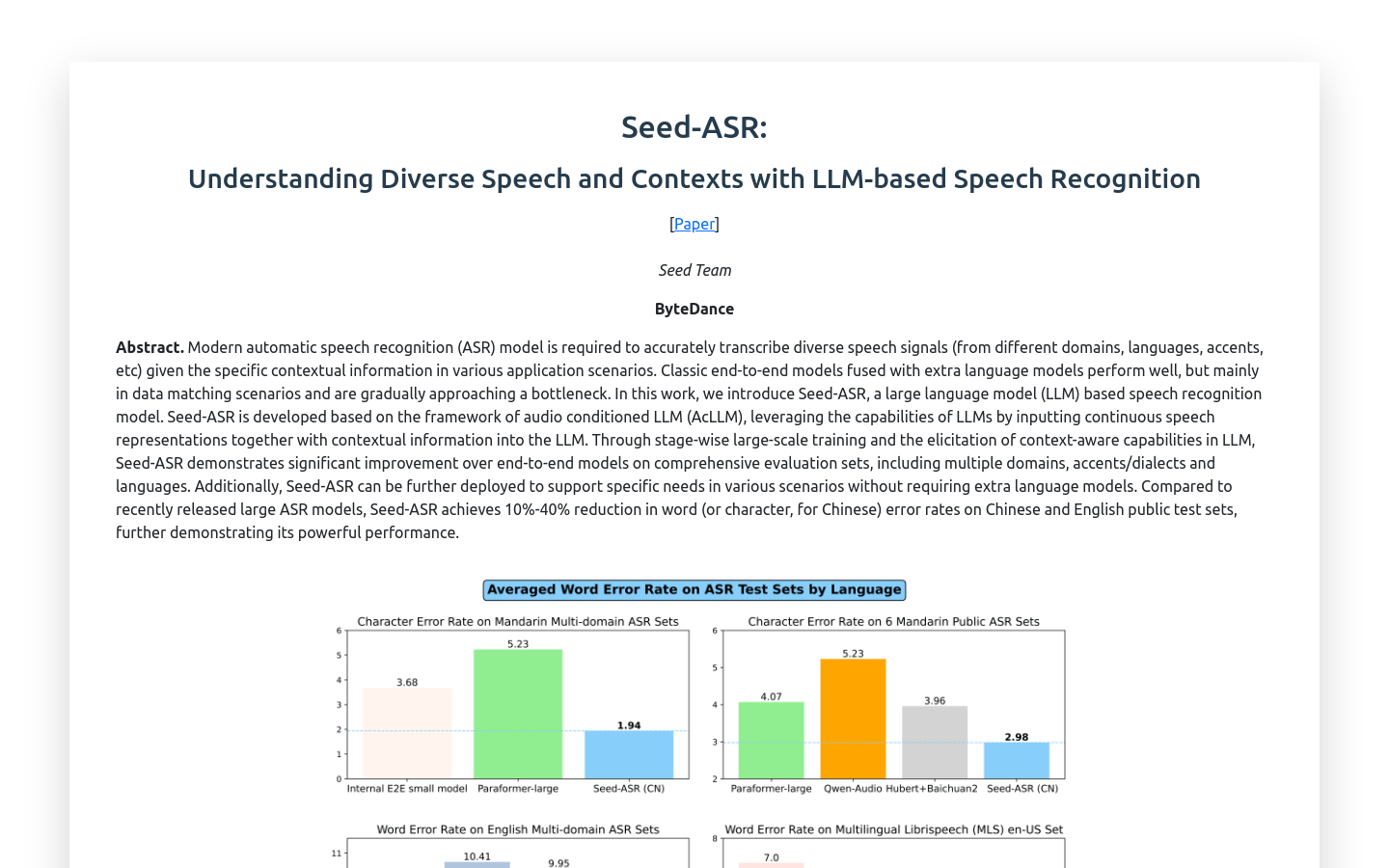

Seed-ASR is a speech recognition model developed by ByteDance that leverages large language models (LLMs). By inputting continuous speech representations and contextual information into the LLM, it significantly enhances performance in comprehensive evaluation sets across multiple fields, accents/dialects, and languages, guided by extensive training and context-awareness capabilities. Compared to recently released large ASR models, Seed-ASR achieves a 10%-40% reduction in word error rate on public test sets in both Chinese and English, further demonstrating its strong performance.

Target Users :

The primary target audience for Seed-ASR includes businesses and individuals who require high-precision speech recognition services, such as speech-to-text service providers, multilingual content creators, and application developers needing speech recognition in complex environments. This technology is particularly suitable for scenarios that involve processing multiple languages and dialects, as well as achieving accurate speech recognition in specific contextual settings.

Use Cases

Businesses use Seed-ASR for real-time transcription of meeting recordings, improving the efficiency and accuracy of meeting minutes.

Content creators utilize Seed-ASR to convert spoken content from videos or podcasts into text for easier distribution across multiple platforms.

Educational institutions adopt Seed-ASR for transcribing classroom recordings, facilitating student review and teacher assessment.

Features

Context Awareness: Enhances recognition accuracy based on conversational history, agent names, and agent description information.

Multifield Adaptability: Provides accurate speech recognition services in various fields such as business, education, and entertainment.

Multilingual Support: Capable of recognizing speech in multiple languages, including Chinese and English.

Dialect Recognition: Able to recognize various Chinese dialects, including Wu, Cantonese, and Sichuanese.

Error Self-Correction: User modifications to subtitles can serve as recognition cues, avoiding repeated errors in subsequent videos.

Background Noise Robustness: Maintains high recognition accuracy even in noisy environments.

How to Use

Step 1: Visit the official Seed-ASR website or download the relevant app.

Step 2: Register and log into your account, then choose the appropriate service plan as needed.

Step 3: Upload the audio files you want to transcribe or conduct live speech recognition directly.

Step 4: Set recognition parameters, such as selecting the language and dialect.

Step 5: Initiate the recognition process and wait for Seed-ASR to process the audio data.

Step 6: Check the recognition results and edit or correct them as necessary.

Step 7: Export or utilize the transcribed text data for further analysis or record-keeping.

Featured AI Tools

Openvoice

OpenVoice is an open-source voice cloning technology capable of accurately replicating reference voicemails and generating voices in various languages and accents. It offers flexible control over voice characteristics such as emotion, accent, and can adjust rhythm, pauses, and intonation. It achieves zero-shot cross-lingual voice cloning, meaning it does not require the language of the generated or reference voice to be present in the training data.

AI speech recognition

2.4M

Azure AI Studio Speech Services

Azure AI Studio is a suite of artificial intelligence services offered by Microsoft Azure, encompassing speech services. These services may include functions such as speech recognition, text-to-speech, and speech translation, enabling developers to incorporate voice-related intelligence into their applications.

AI speech recognition

272.4K