Pixelprose

Overview :

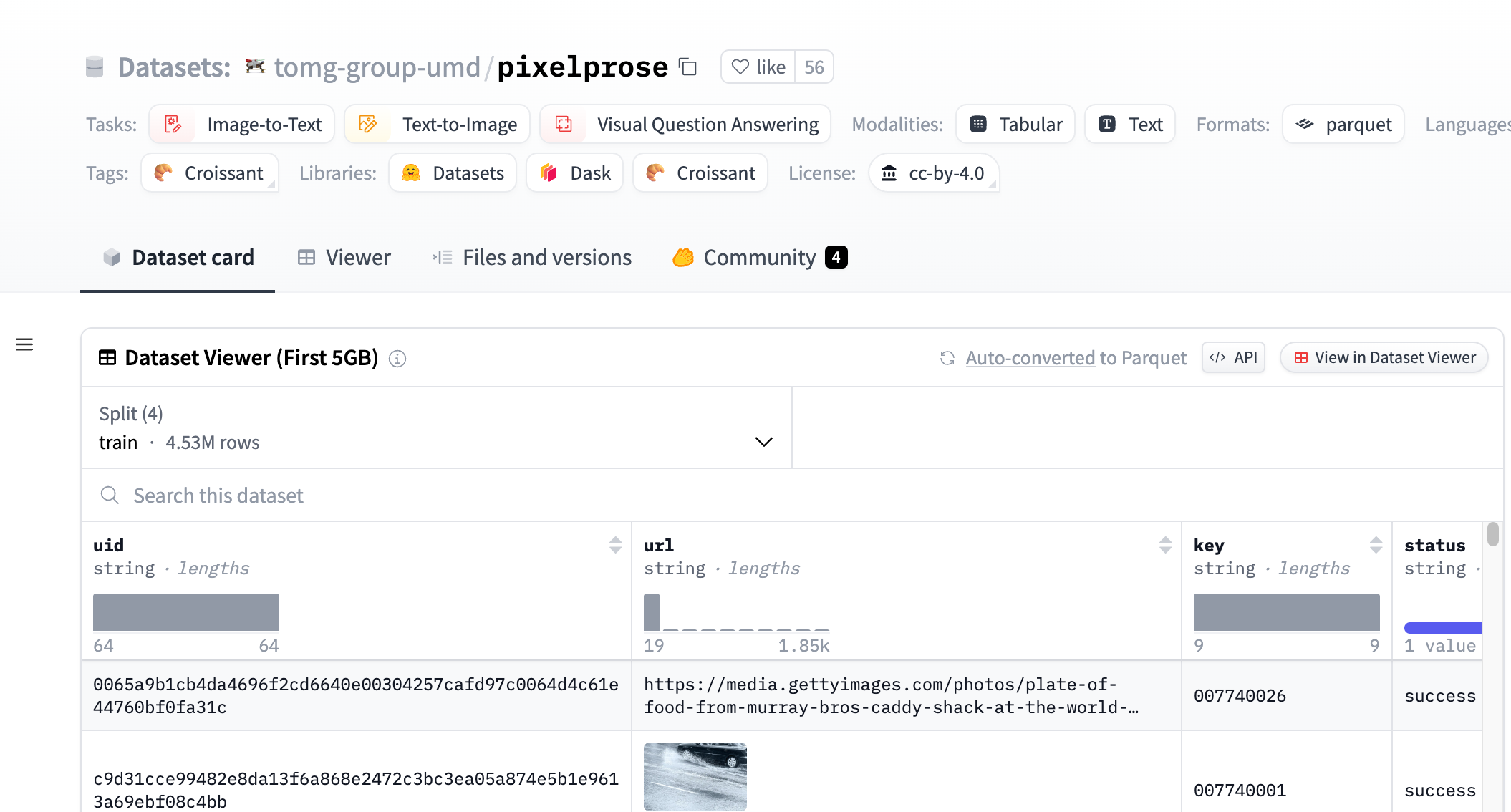

PixelProse, created by the tomg-group-umd, is a large-scale dataset generating over 16 million detailed image descriptions using the advanced vision-language model Gemini 1.0 Pro Vision. This dataset is crucial for developing and improving image-to-text conversion technologies and can be used for tasks like image captioning and visual question answering.

Target Users :

This dataset is aimed at researchers and developers in the field of machine learning and artificial intelligence, particularly those specializing in image recognition, image captioning, and visual question answering systems. The scale and diversity of this dataset make it an ideal resource for training and testing these systems.

Use Cases

Researchers use the PixelProse dataset to train an image captioning model to automatically generate descriptions for pictures on social media.

Developers utilize this dataset to develop a visual question answering application capable of answering user questions about image content.

Educational institutions use PixelProse as a teaching resource to help students understand the fundamentals of image recognition and natural language processing.

Features

Provides over 16M image-text pairs.

Supports multiple tasks, such as image-to-text and text-to-image.

Includes multiple modalities, including tables and text.

Data format is parquet, easily processed by machine learning models.

Contains detailed image descriptions suitable for training complex vision-language models.

Dataset is divided into three parts: CommonPool, CC12M, and RedCaps.

Provides EXIF information and SHA256 hash values for images, ensuring data integrity.

How to Use

Step 1: Visit the Hugging Face website and search for the PixelProse dataset.

Step 2: Choose the appropriate download method, such as through Git LFS, Huggingface API, or directly downloading the parquet file.

Step 3: Use the URL in the parquet file to download the corresponding images.

Step 4: Load the dataset and preprocess it according to research or development needs.

Step 5: Train or test a vision-language model using the dataset.

Step 6: Evaluate model performance and adjust model parameters as needed.

Step 7: Apply the trained model to real-world problems or further research.

Featured AI Tools

Yolov8

YOLOv8 is the latest version of the YOLO (You Only Look Once) family of object detection models. It can accurately and rapidly identify and locate multiple objects in images or videos, and track their movements in real time. Compared to previous versions, YOLOv8 has significantly improved detection speed and accuracy, while also supporting a variety of additional computer vision tasks, such as instance segmentation and pose estimation. YOLOv8 can be deployed on various hardware platforms in different formats, providing a one-stop end-to-end object detection solution.

AI image detection and recognition

228.3K

Lexy

Lexy is an AI-powered image text extraction tool. It can automatically recognize text in images and extract it for user convenience in subsequent processing and analysis. Lexy boasts high accuracy and fast recognition speed, suitable for various image text extraction scenarios. Whether you are an individual user needing to extract text from images or an enterprise user requiring large-scale image text processing, Lexy can meet your needs.

AI image detection and recognition

221.6K