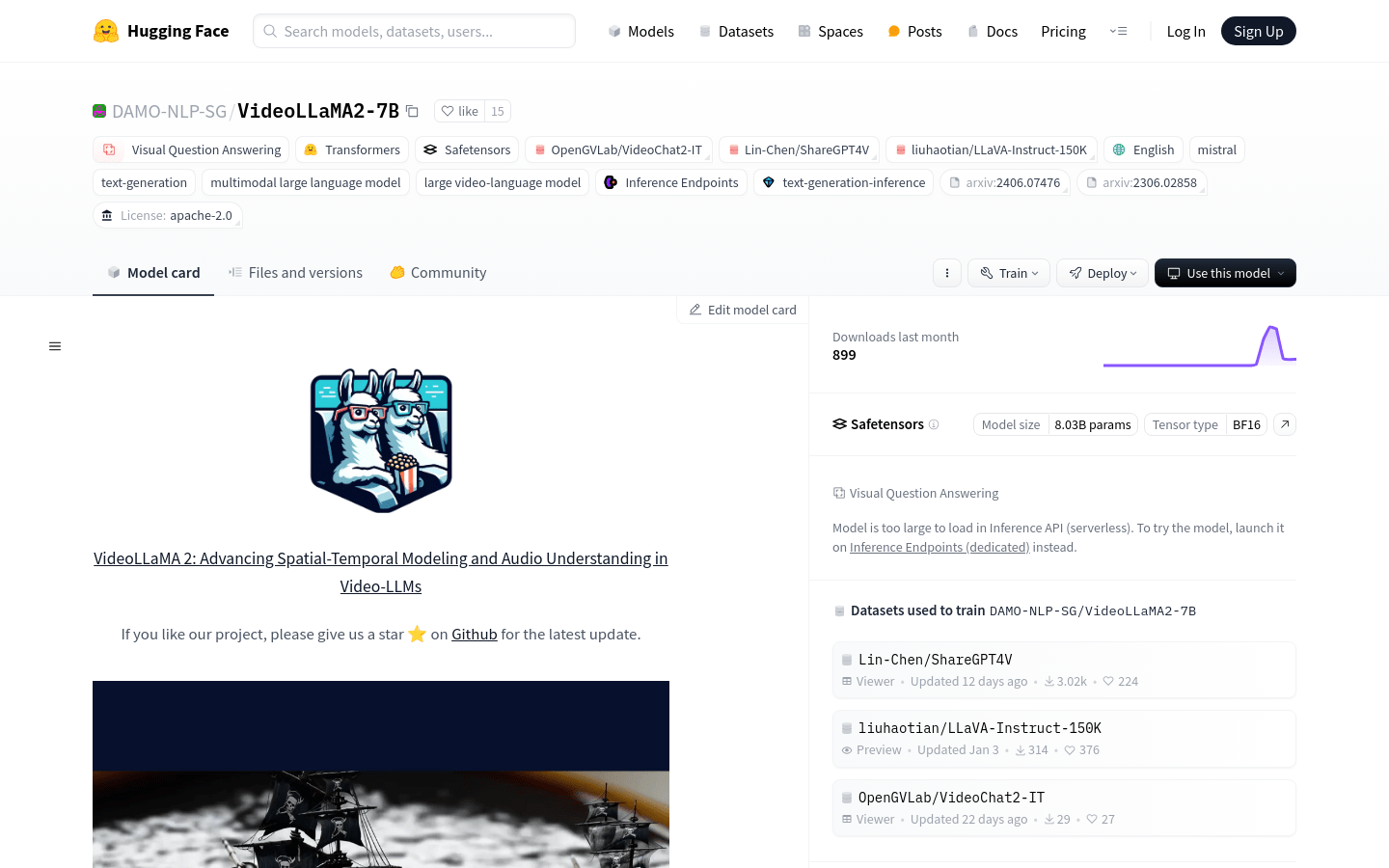

Videollama2 7B

Overview :

Developed by the DAMO-NLP-SG team, VideoLLaMA2-7B is a multimodal large language model focused on video content understanding and generation. This model demonstrates significant performance in video question answering and video captioning, capable of handling complex video content and generating accurate and natural language descriptions. It has been optimized for spatio-temporal modeling and audio understanding, providing powerful support for intelligent analysis and processing of video content.

Target Users :

VideoLLaMA2-7B is primarily designed for researchers and developers who need to deeply analyze and understand video content, such as in video recommendation systems, intelligent surveillance, and autonomous driving. It can help users extract valuable information from videos and improve decision-making efficiency.

Use Cases

Automatically generate engaging captions for user-uploaded videos on social media platforms.

In the education sector, provide interactive question-answering features for teaching videos, enhancing the learning experience.

In security surveillance, quickly pinpoint key events through video question answering, improving response times.

Features

Video Question Answering: The model can understand video content and answer related questions.

Video Captioning: Automatically generates descriptive captions for videos.

Spatio-temporal Modeling: Optimizes the model's understanding of object movement and event development in video content.

Audio Understanding: Enhances the model's ability to parse audio information in videos.

Multimodal Interaction: Provides a richer interactive experience by combining visual and linguistic information.

Model Inference: Supports efficient model inference on dedicated inference endpoints.

How to Use

Step 1: Visit the VideoLLaMA2-7B model page on Hugging Face.

Step 2: Download or clone the model's code repository, preparing the environment needed for model training and inference.

Step 3: Following the provided example code, load the pre-trained model and configure it.

Step 4: Prepare video data and perform necessary preprocessing, such as video frame extraction and dimension adjustment.

Step 5: Use the model for video question answering or captioning, retrieve the results, and evaluate them.

Step 6: Adjust model parameters as needed to optimize performance.

Step 7: Integrate the model into real-world applications to achieve automated video content analysis.

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M