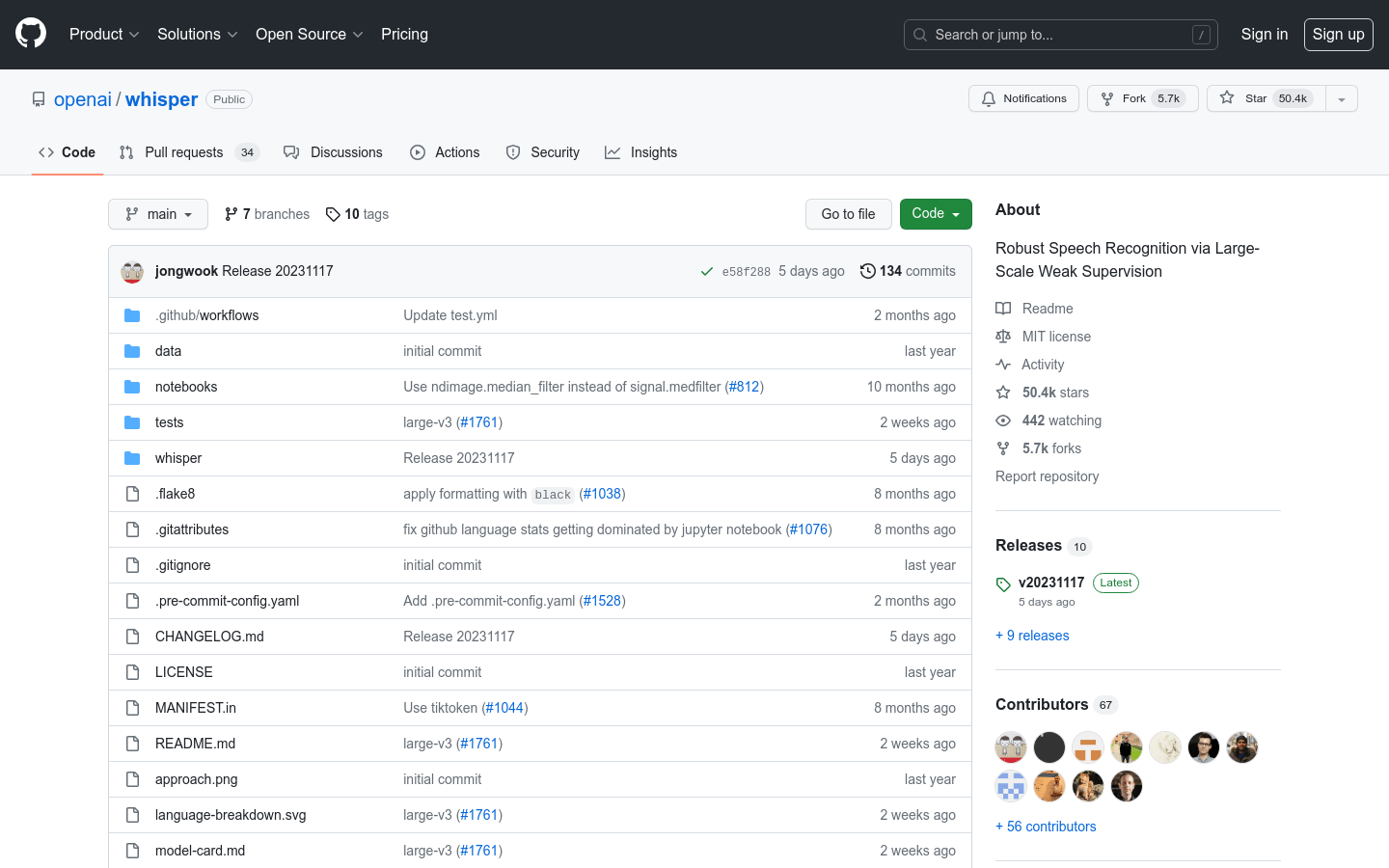

Whisper

Overview :

Whisper is a general-purpose speech recognition model. It is trained on a large and diverse set of audio data and is a multi-task model capable of performing multilingual speech recognition, speech translation, and language identification.

Target Users :

Suitable for applications requiring speech recognition, such as voice assistants and voice transcription.

Features

Multilingual Speech Recognition

Speech Translation

Language Identification

Traffic Sources

| Direct Visits | 51.61% | External Links | 33.46% | 0.04% | |

| Organic Search | 12.58% | Social Media | 2.19% | Display Ads | 0.11% |

Latest Traffic Situation

| Monthly Visits | 4.92m |

| Average Visit Duration | 393.01 |

| Pages Per Visit | 6.11 |

| Bounce Rate | 36.20% |

Total Traffic Trend Chart

Geographic Traffic Distribution

| Monthly Visits | 4.92m |

| United States | 19.34% |

| China | 13.25% |

| India | 9.32% |

| Russia | 4.28% |

| Germany | 3.63% |

Global Geographic Traffic Distribution Map

Similar Open Source Products

Asrtools

AsrTools is an AI-powered speech-to-text tool that utilizes major ASR service interfaces to provide efficient speech recognition without requiring GPU or complex configurations. This tool supports batch processing and multithreading, allowing rapid conversion of audio files into SRT or TXT subtitle files. The user interface of AsrTools, built with PyQt5 and qfluentwidgets, offers an attractive and easy-to-navigate experience. Key advantages include stable integration with major service interfaces, convenience without complex setups, and flexibility in output formats. AsrTools is ideal for users who need to quickly convert speech content into text, especially in fields like video production, audio editing, and subtitle generation. Currently, AsrTools offers a free usage model for major ASR services, significantly reducing costs and enhancing workflow efficiency for individuals and small teams.

AI speech to text

Fresh Picks

Whisper Large V3 Turbo

Whisper large-v3-turbo is an advanced automatic speech recognition (ASR) and speech translation model proposed by OpenAI. It is trained on over 5 million hours of labeled data and can generalize to various datasets and domains in zero-shot settings. This model is a fine-tuned version of Whisper large-v3, reducing the number of decoding layers from 32 to 4 to enhance speed, though it may result in a slight decrease in quality.

AI speech recognition

Omnisensevoice

OmniSenseVoice is an optimized speech recognition model based on SenseVoice, designed for rapid inference and accurate timestamps, providing a smarter and faster way to transcribe audio.

AI speech recognition

Crisperwhisper

CrisperWhisper is an advanced variant of OpenAI's Whisper model, specifically designed for fast, accurate, verbatim speech recognition, providing precise word-level timestamps. Unlike the original Whisper model, CrisperWhisper aims to transcribe every spoken word, including filler words, pauses, stutters, and false starts. This model ranks first in word-level datasets such as TED and AMI, and has been accepted at INTERSPEECH 2024.

AI speech recognition

Fresh Picks

Seed ASR

Seed-ASR is a speech recognition model developed by ByteDance that leverages large language models (LLMs). By inputting continuous speech representations and contextual information into the LLM, it significantly enhances performance in comprehensive evaluation sets across multiple fields, accents/dialects, and languages, guided by extensive training and context-awareness capabilities. Compared to recently released large ASR models, Seed-ASR achieves a 10%-40% reduction in word error rate on public test sets in both Chinese and English, further demonstrating its strong performance.

AI speech recognition

Whisper Diarization

whisper-diarization is an open-source project that integrates Whisper's automatic speech recognition (ASR) capabilities, Voice Activity Detection (VAD), and speaker embedding technology. It improves the accuracy of speaker embeddings by extracting the audible portions of audio, generating transcriptions using Whisper, and correcting timestamps and alignment through WhisperX to minimize segmentation errors caused by temporal offsets. Subsequently, MarbleNet is employed for VAD and segmentation to eliminate silence, while TitaNet is used to extract speaker embeddings for identifying speakers in each segment. Finally, the results are correlated with the timestamps generated by WhisperX, determining the speaker of each word based on timestamps and realigning with a punctuation model to compensate for minor timing offsets.

AI speech recognition

Sensevoicesmall

SenseVoiceSmall is a speech foundation model that supports multiple speech understanding capabilities, including automatic speech recognition (ASR), spoken language recognition (LID), speech emotion recognition (SER), and audio event detection (AED). After training for more than 400,000 hours on data, the model supports more than 50 languages and has a recognition performance that surpasses the Whisper model. The SenseVoiceSmall model, which is a small model, uses a non-autoregressive end-to-end framework with extremely low inference latency and handles a 10-second audio in only 70 milliseconds, which is 15 times faster than Whisper-Large. In addition, SenseVoice also provides convenient fine-tuning scripts and strategies, supports multi-concurrency request service deployment pipelines, and the client languages include Python, C++, HTML, Java, and C#.

AI speech recognition

Emilia

Emilia is an open-source multilingual field voice dataset specifically designed for large-scale voice generation research. It includes over 10,100 hours of high-quality voice data in six languages with corresponding text transcriptions, covering a variety of speaking styles and content types such as stand-up comedy, interviews, debates, sports commentary, and audiobooks.

AI speech recognition

Sensevoice

SenseVoice is a speech foundation model with multiple speech understanding capabilities, including Automatic Speech Recognition (ASR), Language Identification (LID), Speech Emotion Recognition (SER), and Audio Event Detection (AED). It focuses on high-precision multilingual speech recognition, speech emotion recognition, and audio event detection, supporting over 50 languages and exceeding the recognition performance of the Whisper model. The model uses an autoregressive end-to-end framework, resulting in extremely low inference latency, making it an ideal choice for real-time speech processing.

AI speech recognition

Alternatives

Asrtools

AsrTools is an AI-powered speech-to-text tool that utilizes major ASR service interfaces to provide efficient speech recognition without requiring GPU or complex configurations. This tool supports batch processing and multithreading, allowing rapid conversion of audio files into SRT or TXT subtitle files. The user interface of AsrTools, built with PyQt5 and qfluentwidgets, offers an attractive and easy-to-navigate experience. Key advantages include stable integration with major service interfaces, convenience without complex setups, and flexibility in output formats. AsrTools is ideal for users who need to quickly convert speech content into text, especially in fields like video production, audio editing, and subtitle generation. Currently, AsrTools offers a free usage model for major ASR services, significantly reducing costs and enhancing workflow efficiency for individuals and small teams.

AI speech to text

Fresh Picks

Whisper Large V3 Turbo

Whisper large-v3-turbo is an advanced automatic speech recognition (ASR) and speech translation model proposed by OpenAI. It is trained on over 5 million hours of labeled data and can generalize to various datasets and domains in zero-shot settings. This model is a fine-tuned version of Whisper large-v3, reducing the number of decoding layers from 32 to 4 to enhance speed, though it may result in a slight decrease in quality.

AI speech recognition

English Picks

Realtime API

The Realtime API, launched by OpenAI, is a low-latency voice interaction API that enables developers to create fast voice-to-voice experiences within their applications. This API supports natural voice-to-voice conversation and can handle interruptions, similar to the advanced voice mode of ChatGPT. It operates through a WebSocket connection and supports function calls, allowing voice assistants to respond to user requests, trigger actions, or introduce new contexts. With this API, developers no longer need to combine multiple models to construct voice experiences; instead, they can achieve natural conversational interactions through a single API call.

AI speech recognition

Omnisensevoice

OmniSenseVoice is an optimized speech recognition model based on SenseVoice, designed for rapid inference and accurate timestamps, providing a smarter and faster way to transcribe audio.

AI speech recognition

Fresh Picks

Deepgram Voice Agent API

The Deepgram Voice Agent API is a unified voice-to-voice API that enables natural-sounding conversations between humans and machines. This API is backed by industry-leading speech recognition and synthesis models that allow for natural and real-time listening, thinking, and speaking. Deepgram is committed to advancing a voice-first AI future through its agent API, integrating cutting-edge generative AI technology to create business solutions with smooth, human-like speech agents.

AI speech recognition

Crisperwhisper

CrisperWhisper is an advanced variant of OpenAI's Whisper model, specifically designed for fast, accurate, verbatim speech recognition, providing precise word-level timestamps. Unlike the original Whisper model, CrisperWhisper aims to transcribe every spoken word, including filler words, pauses, stutters, and false starts. This model ranks first in word-level datasets such as TED and AMI, and has been accepted at INTERSPEECH 2024.

AI speech recognition

Chinese Picks

Xincheng Lingo Voice Model

The Xincheng Lingo Voice Model is an advanced artificial intelligence voice model, focusing on providing efficient and accurate voice recognition and processing services. It understands and processes natural language, making human-computer interaction smoother and more natural. Built on the powerful AI technology of Xihu Xincheng, this model aims to deliver high-quality voice interaction experiences across various scenarios.

AI speech recognition

Fresh Picks

Seed ASR

Seed-ASR is a speech recognition model developed by ByteDance that leverages large language models (LLMs). By inputting continuous speech representations and contextual information into the LLM, it significantly enhances performance in comprehensive evaluation sets across multiple fields, accents/dialects, and languages, guided by extensive training and context-awareness capabilities. Compared to recently released large ASR models, Seed-ASR achieves a 10%-40% reduction in word error rate on public test sets in both Chinese and English, further demonstrating its strong performance.

AI speech recognition

Whisper Diarization

whisper-diarization is an open-source project that integrates Whisper's automatic speech recognition (ASR) capabilities, Voice Activity Detection (VAD), and speaker embedding technology. It improves the accuracy of speaker embeddings by extracting the audible portions of audio, generating transcriptions using Whisper, and correcting timestamps and alignment through WhisperX to minimize segmentation errors caused by temporal offsets. Subsequently, MarbleNet is employed for VAD and segmentation to eliminate silence, while TitaNet is used to extract speaker embeddings for identifying speakers in each segment. Finally, the results are correlated with the timestamps generated by WhisperX, determining the speaker of each word based on timestamps and realigning with a punctuation model to compensate for minor timing offsets.

AI speech recognition

Featured AI Tools

Openvoice

OpenVoice is an open-source voice cloning technology capable of accurately replicating reference voicemails and generating voices in various languages and accents. It offers flexible control over voice characteristics such as emotion, accent, and can adjust rhythm, pauses, and intonation. It achieves zero-shot cross-lingual voice cloning, meaning it does not require the language of the generated or reference voice to be present in the training data.

AI speech recognition

2.4M

Azure AI Studio Speech Services

Azure AI Studio is a suite of artificial intelligence services offered by Microsoft Azure, encompassing speech services. These services may include functions such as speech recognition, text-to-speech, and speech translation, enabling developers to incorporate voice-related intelligence into their applications.

AI speech recognition

271.0K