Thunder Compute

Overview :

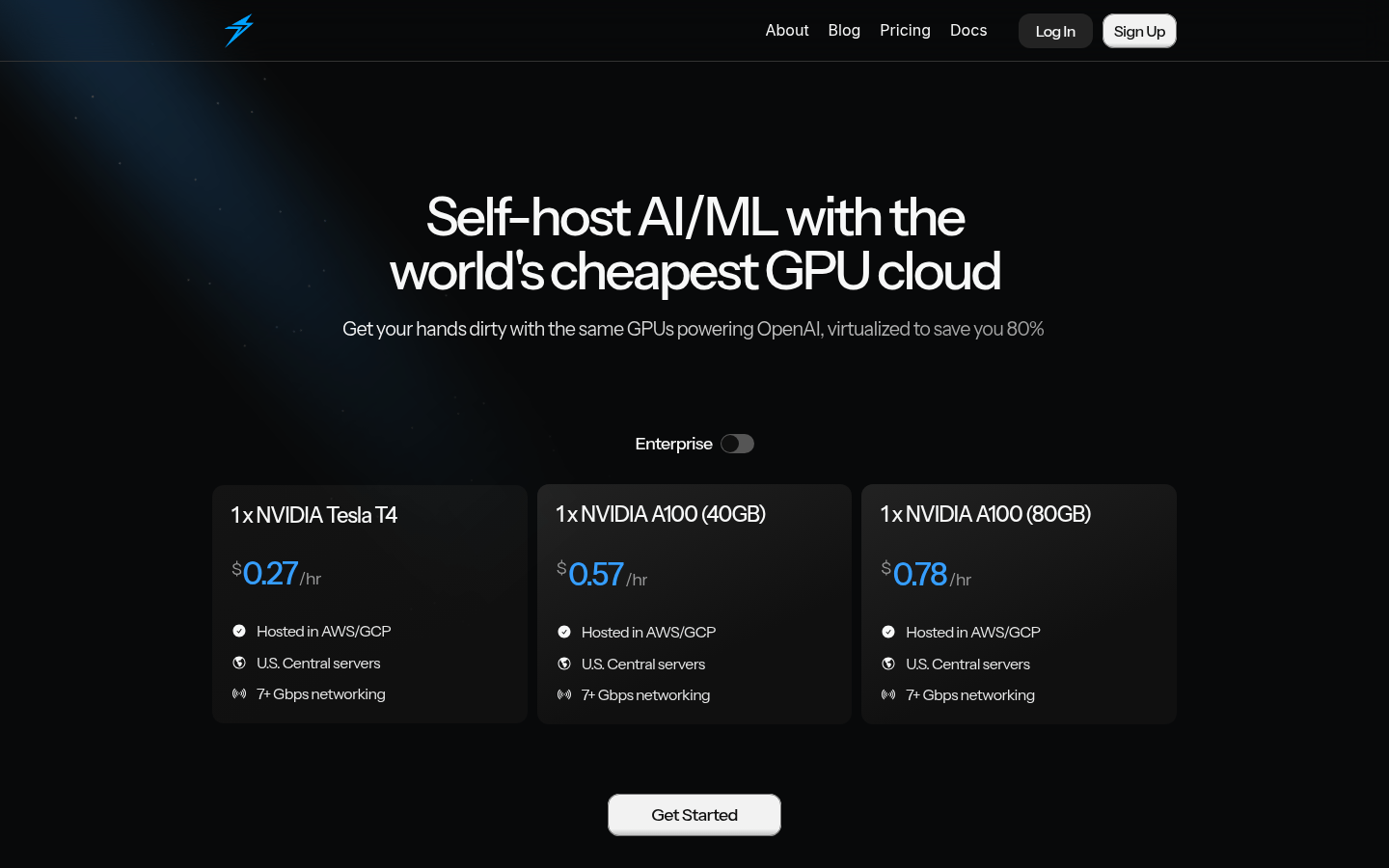

Thunder Compute is a GPU cloud service platform focusing on AI/ML development. Using virtualization technology, it helps users access high-performance GPU resources at a very low cost. Its main advantage is its low price, saving up to 80% of the cost compared to traditional cloud service providers. The platform supports various mainstream GPU models, such as NVIDIA Tesla T4, A100, etc., and provides 7+ Gbps network connectivity to ensure efficient data transfer. Thunder Compute aims to reduce hardware costs for AI developers and enterprises, accelerate model training and deployment, and promote the popularization and application of AI technology.

Target Users :

This product primarily targets AI developers, data scientists, machine learning engineers, and enterprises requiring high-performance computing resources. Thunder Compute is an ideal choice for those wishing to reduce hardware costs, quickly set up AI development environments, and accelerate model training and deployment. Its low price and flexible deployment options are especially suitable for startups and research teams with limited budgets.

Use Cases

User @userdotrandom stated that creating an instance, installing, and running the Ollama template through Thunder Compute was very simple.

Dr. Jung Hoon Son stated that transcribing 41 hours of video using an A100 GPU cost only $6.

KronosAI CEO Yujie Huang stated that setting up environments for engineers using Thunder Compute was simpler and saved significant costs compared to AWS.

Features

Provides a selection of various high-performance GPU models to meet different AI/ML development needs.

Achieves cost optimization through virtualization technology, saving 80% of the cost compared to traditional cloud services.

Supports the rapid creation of GPU instances and one-click deployment of commonly used tools (such as Ollama, Comfy-ui).

Provides a simple and easy-to-use user interface and command-line tools to facilitate user creation, management, and monitoring of instances.

7+ Gbps high-speed network connection ensures efficient and stable data transmission.

Instance template function simplifies the process of setting up AI/ML development environments.

Supports hosting on mainstream cloud platforms such as AWS/GCP, providing flexible deployment options.

Provides detailed documentation and community support to help users get started quickly and resolve problems.

How to Use

Visit the Thunder Compute website, register, and log in.

Select the appropriate GPU model and configuration, and create a GPU instance.

Connect to the created instance via command-line tools or the console.

Use instance templates to quickly install and configure necessary AI tools (such as Ollama).

Begin running model training or AI development tasks.

Monitor the instance's running status via the console or command-line tools.

Adjust instance configurations or expand resources as needed.

Release instance resources to save costs after completing tasks.

Featured AI Tools

Fresh Picks

Miaoda

MiaoDa is a no-code AI development platform launched by Baidu, which is based on large models and agent technology. It enables users to build software without writing code. Users can easily implement various ideas and concepts through no-code programming, multi-agent collaboration, and scalable tool invocation. The main advantages of MiaoDa include zero-code programming, multi-agent collaboration, scalable tool invocation, intuitive operation, realization of creativity, automation of processes, and modular building. It is suitable for businesses, educational institutions, and individual developers who need to rapidly develop and deploy software applications without requiring programming knowledge.

Development Platform

447.1K

Tensorpool

TensorPool is a cloud GPU platform dedicated to simplifying machine learning model training. It provides an intuitive command-line interface (CLI) enabling users to easily describe tasks and automate GPU orchestration and execution. Core TensorPool technology includes intelligent Spot instance recovery, instantly resuming jobs interrupted by preemptible instance termination, combining the cost advantages of Spot instances with the reliability of on-demand instances. Furthermore, TensorPool utilizes real-time multi-cloud analysis to select the cheapest GPU options, ensuring users only pay for actual execution time, eliminating costs associated with idle machines. TensorPool aims to accelerate machine learning engineering by eliminating the extensive cloud provider configuration overhead. It offers personal and enterprise plans; personal plans include a $5 weekly credit, while enterprise plans provide enhanced support and features.

Model Training and Deployment

306.6K