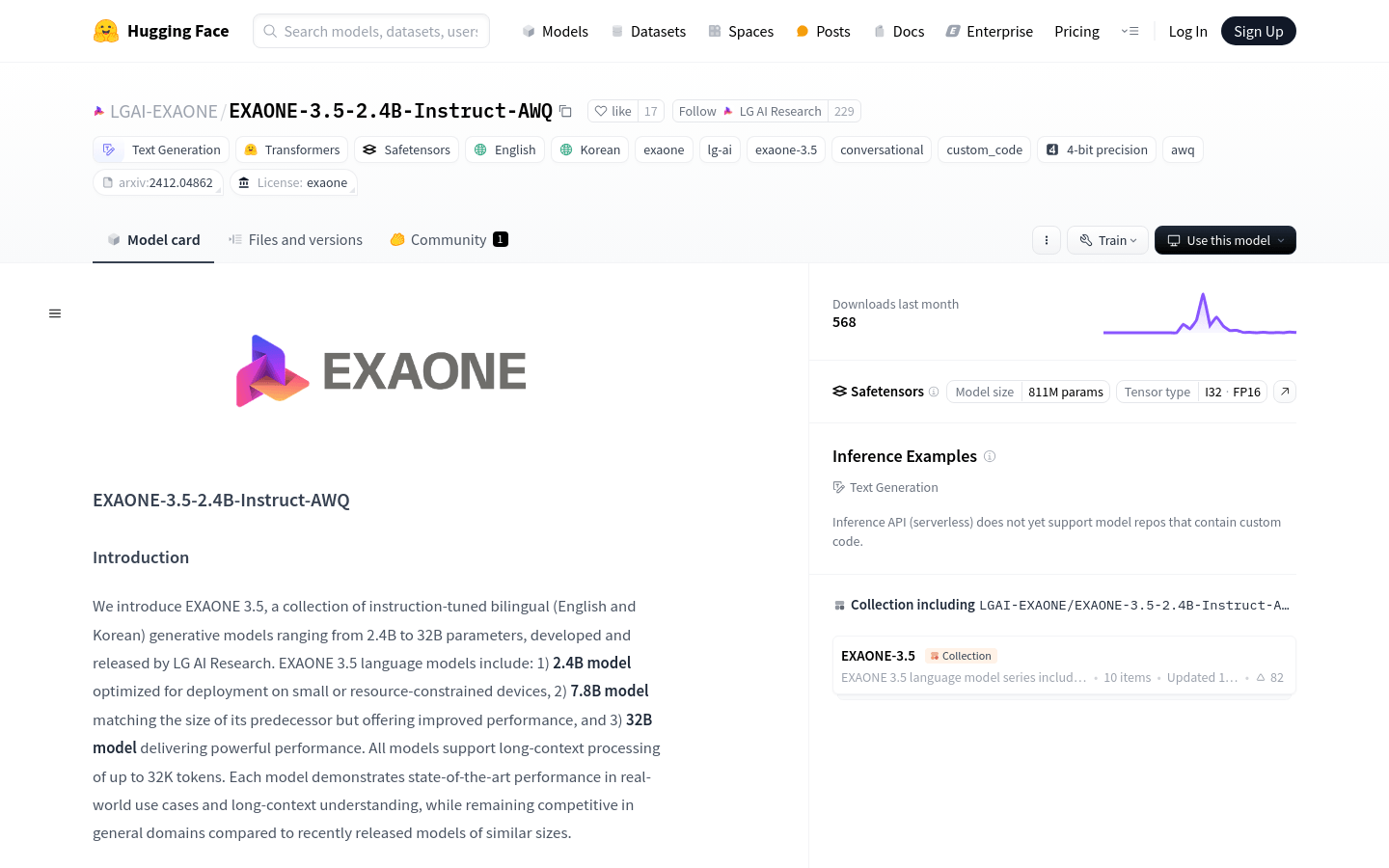

EXAONE 3.5 2.4B Instruct AWQ

Overview :

EXAONE-3.5-2.4B-Instruct-AWQ is a series of bilingual (English and Korean) instruction-tuned generative models developed by LG AI Research, with parameter sizes ranging from 2.4B to 32B. These models support long context processing of up to 32K tokens and demonstrate state-of-the-art performance in real-world use cases and long context understanding, while remaining competitive in general domains compared to similarly sized recently released models. The model has been optimized for deployment on small or resource-constrained devices and utilizes AWQ quantization technology, achieving 4-bit grouped weight quantization (W4A16g128).

Target Users :

The target audience includes developers and researchers who need to deploy high-performance language models on resource-constrained devices, as well as NLP application developers handling long-text data and multilingual support. EXAONE-3.5-2.4B-Instruct-AWQ is particularly suited for scenarios where language models need to be deployed on mobile devices or in edge computing environments due to its optimized deployment capabilities and long context processing abilities.

Use Cases

Generating dialogue responses in English and Korean.

Providing language model services on resource-constrained mobile devices.

Serving as a tool for long text processing and analysis for research and business intelligence.

Features

Supports long context processing capabilities of up to 32K tokens.

Optimized 2.4B model suitable for deployment on resource-constrained devices.

7.8B model offers the same scale as predecessors with improved performance.

32B model delivers powerful performance.

Supports both English and Korean languages.

AWQ quantization technology achieves 4-bit grouped weight quantization.

Supports various deployment frameworks such as TensorRT-LLM, vLLM, etc.

How to Use

1. Install the necessary libraries such as transformers and autoawq.

2. Load the model and tokenizer using AutoModelForCausalLM and AutoTokenizer.

3. Prepare the input prompts, which can be in English or Korean.

4. Use the tokenizer.apply_chat_template method to template the messages and convert them into input IDs.

5. Call the model.generate method to generate text.

6. Use the tokenizer.decode method to decode the generated IDs back into text.

7. Adjust model parameters such as max_new_tokens and do_sample as needed to control the length and diversity of the generated text.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M