Pixtral Large Instruct 2411

Overview :

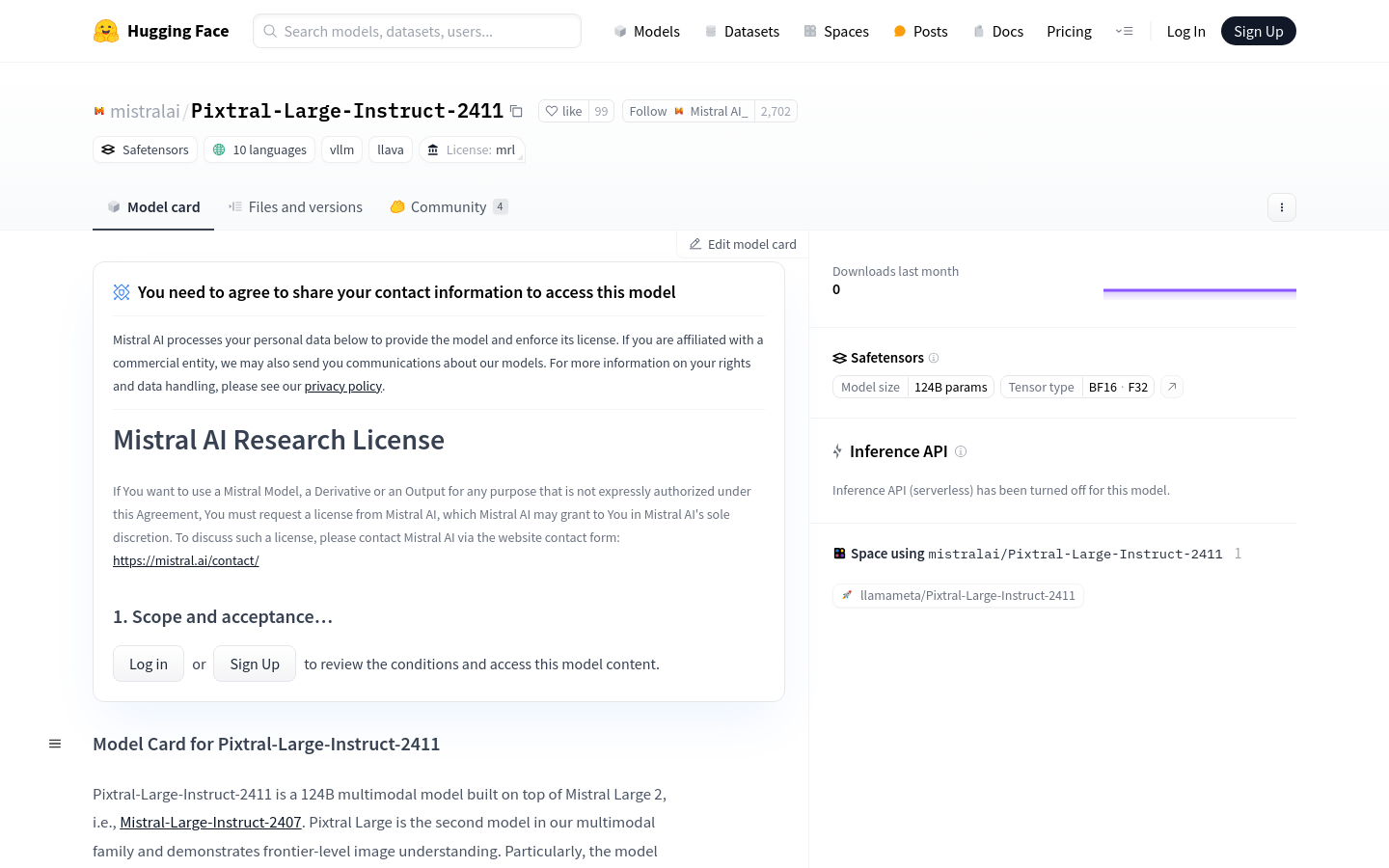

Pixtral-Large-Instruct-2411, developed by Mistral AI, is a large multimodal model with 124 billion parameters, built on Mistral Large 2. It showcases state-of-the-art image understanding capabilities, capable of interpreting documents, charts, and natural images while maintaining a lead in text comprehension from Mistral Large 2. The model achieves advanced performance on datasets like MathVista, DocVQA, and VQAv2, making it a powerful tool for research and business applications.

Target Users :

Target audience includes researchers, developers, and enterprises that require a high-performance AI model capable of understanding and processing large amounts of image and text data. Pixtral-Large-Instruct-2411, with its powerful multimodal processing capabilities and cutting-edge research, is well-suited for professional users engaged in complex data analysis and pattern recognition.

Use Cases

On the DocVQA dataset, Pixtral-Large-Instruct-2411 accurately understands document content and answers questions.

On MathVista, the model can solve complex mathematical problems, demonstrating its capabilities in mathematical understanding.

On the VQAv2 dataset, the model can identify visual elements in images and respond to related queries.

Features

State-of-the-art multimodal performance: Achieved leading results on various image understanding datasets.

Expanded Mistral Large 2: Enhanced image comprehension capabilities without sacrificing text performance.

123B multimodal decoder and 1B parameter visual encoder: Provides robust image and text processing capabilities.

128K context window: Capable of accommodating at least 30 high-resolution images.

System prompt processing: Enhanced support for system prompts to achieve optimal results.

Standardized instruction templates (V7): Offers standardized templates to guide the model's responses.

Research-use only: The model and its derivatives are intended for research purposes only.

How to Use

1. Install the vLLM library: Ensure you have vLLM >= v0.6.4.post1 and mistral_common >= 1.5.0 installed.

2. Start the server: Use the vLLM serve command to launch the Pixtral-Large-Instruct-2411 model service.

3. Configure system prompts: Load and configure the SYSTEM_PROMPT.txt file as needed to guide the model's behavior.

4. Build requests: Construct request data containing system prompts and user messages, including text and image URLs.

5. Send requests: Use HTTP POST requests to send the data to the server and receive the model's response.

6. Process responses: Parse the response returned by the model to extract useful information.

7. Offline usage: If needed, you can also run the model locally without a server using the vLLM library.

Featured AI Tools

Chinese Picks

Douyin Jicuo

Jicuo Workspace is an all-in-one intelligent creative production and management platform. It integrates various creative tools like video, text, and live streaming creation. Through the power of AI, it can significantly increase creative efficiency. Key features and advantages include:

1. **Video Creation:** Built-in AI video creation tools support intelligent scripting, digital human characters, and one-click video generation, allowing for the rapid creation of high-quality video content.

2. **Text Creation:** Provides intelligent text and product image generation tools, enabling the quick production of WeChat articles, product details, and other text-based content.

3. **Live Streaming Creation:** Supports AI-powered live streaming backgrounds and scripts, making it easy to create live streaming content for platforms like Douyin and Kuaishou. Jicuo is positioned as a creative assistant for newcomers and creative professionals, providing comprehensive creative production services at a reasonable price.

AI design tools

105.1M

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M