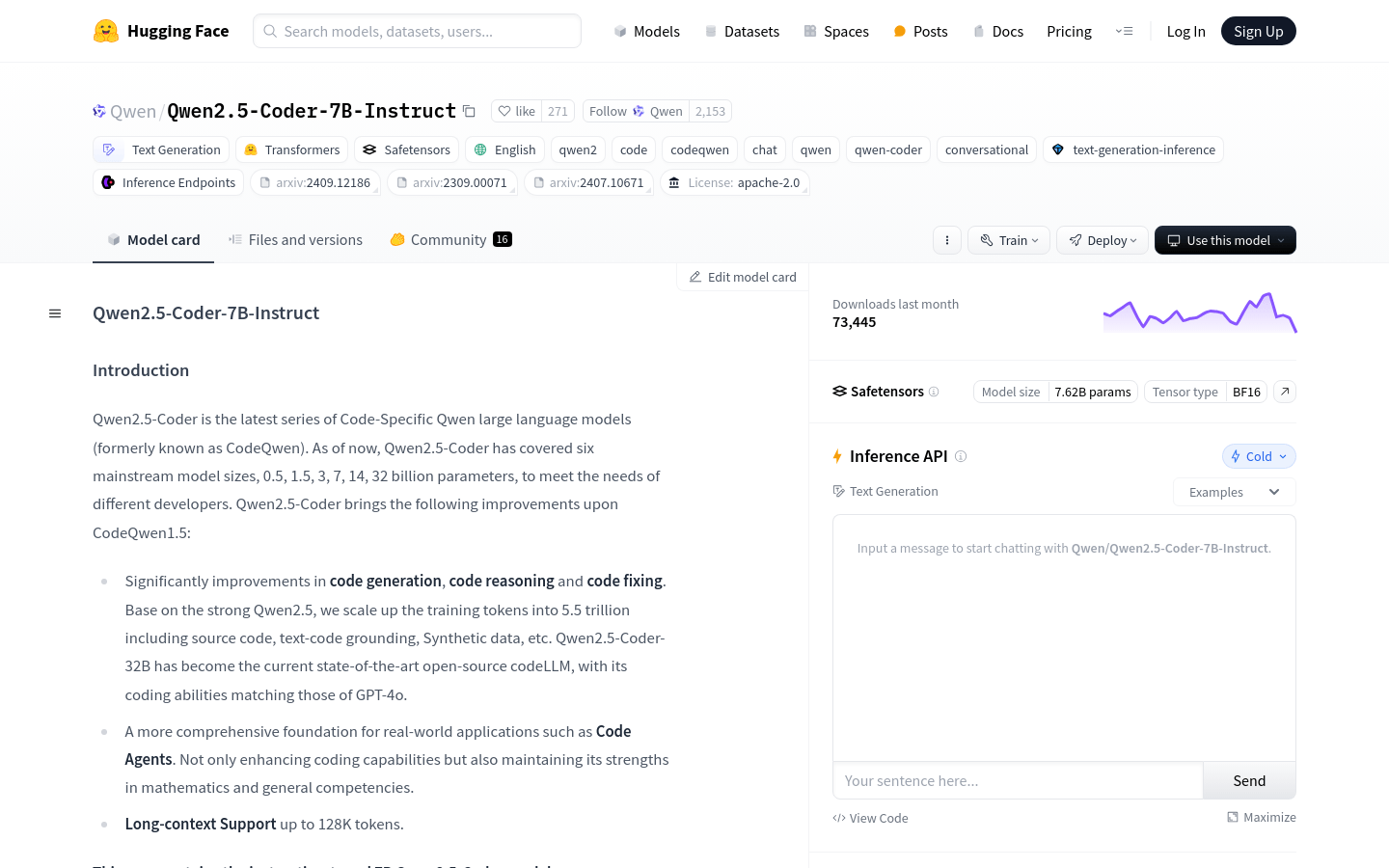

Qwen2.5 Coder 7B Instruct

Overview :

Qwen2.5-Coder-7B-Instruct is a large language model specifically designed for code, part of the Qwen2.5-Coder series which includes six mainstream model sizes: 0.5, 1.5, 3, 7, 14, and 32 billion parameters to meet the diverse needs of developers. This model shows significant improvements in code generation, reasoning, and debugging, trained on an extensive dataset of 5.5 trillion tokens that includes source code, code-related textual data, and synthetic data. The Qwen2.5-Coder-32B represents the latest advancement in open-source code LLMs, matching the coding capabilities of GPT-4o. Moreover, it supports long context lengths of up to 128K tokens, providing a solid foundation for practical applications like code agents.

Target Users :

This model is targeted at developers and programmers, especially those handling large amounts of code and complex projects. Qwen2.5-Coder-7B-Instruct provides advanced capabilities in code generation, reasoning, and debugging, enhancing their development efficiency and code quality.

Use Cases

A developer uses Qwen2.5-Coder-7B-Instruct to generate code for a quicksort algorithm.

A software engineer utilizes the model to fix bugs in an existing codebase.

A data scientist employs the model to generate code for data processing and analysis.

Features

Code Generation: Significantly enhances code generation capabilities and supports multiple programming languages.

Code Reasoning: Boosts understanding of code logic to assist developers in reasoning through their code.

Code Debugging: Automatically detects and fixes errors in code.

Long Context Support: Capable of handling long contexts of up to 128K tokens, ideal for large codebases.

Based on Transformers Architecture: Employs advanced techniques such as RoPE, SwiGLU, RMSNorm, and Attention QKV bias.

Parameter Count: Contains 7.61B parameters, with 6.53B being non-embedding parameters.

Layers and Attention Heads: Features 28 layers and 28 attention heads for Q, along with 4 for KV.

Suitable for Practical Applications: Enhances coding capabilities while maintaining strengths in mathematical and general abilities.

How to Use

1. Visit the Hugging Face platform and locate the Qwen2.5-Coder-7B-Instruct model.

2. Import AutoModelForCausalLM and AutoTokenizer as per the code snippets provided on the page.

3. Load the model and tokenizer using the model name.

4. Prepare an input prompt, such as a request to write code for a specific function.

5. Transform the prompt into a format that the model can understand, using the tokenizer.

6. Pass the processed input to the model and set generation parameters, such as the maximum number of new tokens.

7. After the model generates a response, decode the generated tokens using the tokenizer to obtain the final result.

8. Adjust generation parameters as needed to optimize code generation outcomes.

Featured AI Tools

English Picks

Trae

Trae is an AI-driven integrated development environment (IDE) for developers. With features such as intelligent code completion, multimodal interactions, and contextual analysis of the entire codebase, it helps developers write code more efficiently. Trae's main advantage lies in its powerful AI capabilities, which understand developers' needs and provide precise code generation and modification suggestions. The product currently offers a free version aimed at helping developers reduce repetitive tasks, allowing them to focus on creative work to enhance programming efficiency and productivity.

Coding Assistant

1.7M

Fitten Code

Fitten Code is a GPT-powered code generation and completion tool that supports multiple languages: Python, Javascript, Typescript, Java, and more. It can automatically fill in missing parts of your code, saving you precious development time. Based on AI large models, it performs semantic-level translation of code, supporting cross-language translation for multiple programming languages. It can also automatically generate relevant comments for your code, providing clear and understandable explanations and documentation. In addition, it boasts features such as intelligent bug finding, code explanation, automatic generation of unit tests, and automatic generation of corresponding test cases based on your code.

Coding Assistant

964.9K