Dualgs

Overview :

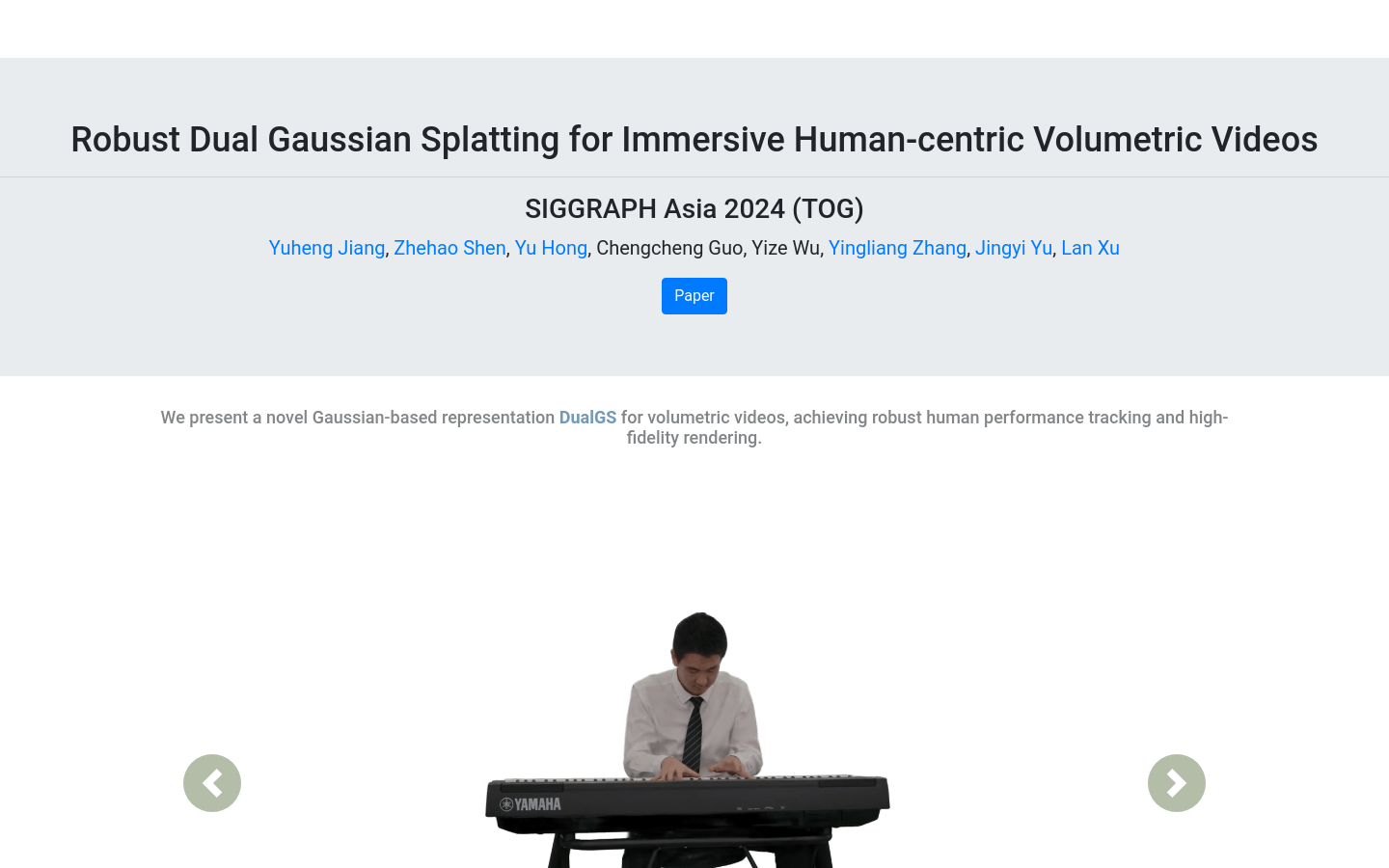

Robust Dual Gaussian Splatting (DualGS) is a novel Gaussian-based volumetric video representation method that captures complex human performances by optimizing joint and skin gaussians, enabling robust tracking and high-fidelity rendering. This technology, showcased at SIGGRAPH Asia 2024, supports real-time rendering on low-end mobile devices and VR headsets, providing a user-friendly and interactive experience. DualGS employs a mixed compression strategy to achieve up to 120 times compression, resulting in more efficient storage and transmission of volumetric video.

Target Users :

The target audience includes 3D graphic designers, VR/AR developers, and video producers who require a volumetric video representation technology capable of delivering immersive experiences and supporting real-time rendering. The DualGS technology, known for its high compression ratio and real-time rendering capability, is particularly suitable for scenarios that demand high-quality visual effects on resource-constrained devices.

Use Cases

Real-time rendering of concert performers in VR headsets

Showcasing high-fidelity sports motion capture videos on mobile devices

Providing realistic human motion previews for special effects in film production

Features

Enables real-time rendering on low-end mobile devices and VR headsets

Utilizes dual Gaussian representation to capture complex human performances

Achieves up to 120 times compression through a mixed compression strategy

Optimizes joint and skin gaussians for robust tracking

Employs a coarse-to-fine strategy for overall motion prediction and fine-grained optimization

Supports multi-view inputs to capture challenging human performances

How to Use

Visit the official DualGS website for more information

Download and install the necessary software or plugins to support DualGS technology

Import or create volumetric video content and render it using DualGS technology

Adjust rendering settings to accommodate different devices and performance requirements

Utilize DualGS's compression features to optimize the storage and transmission of video content

Experience immersive volumetric video content on VR headsets or mobile devices

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M