Loopy Model

Overview :

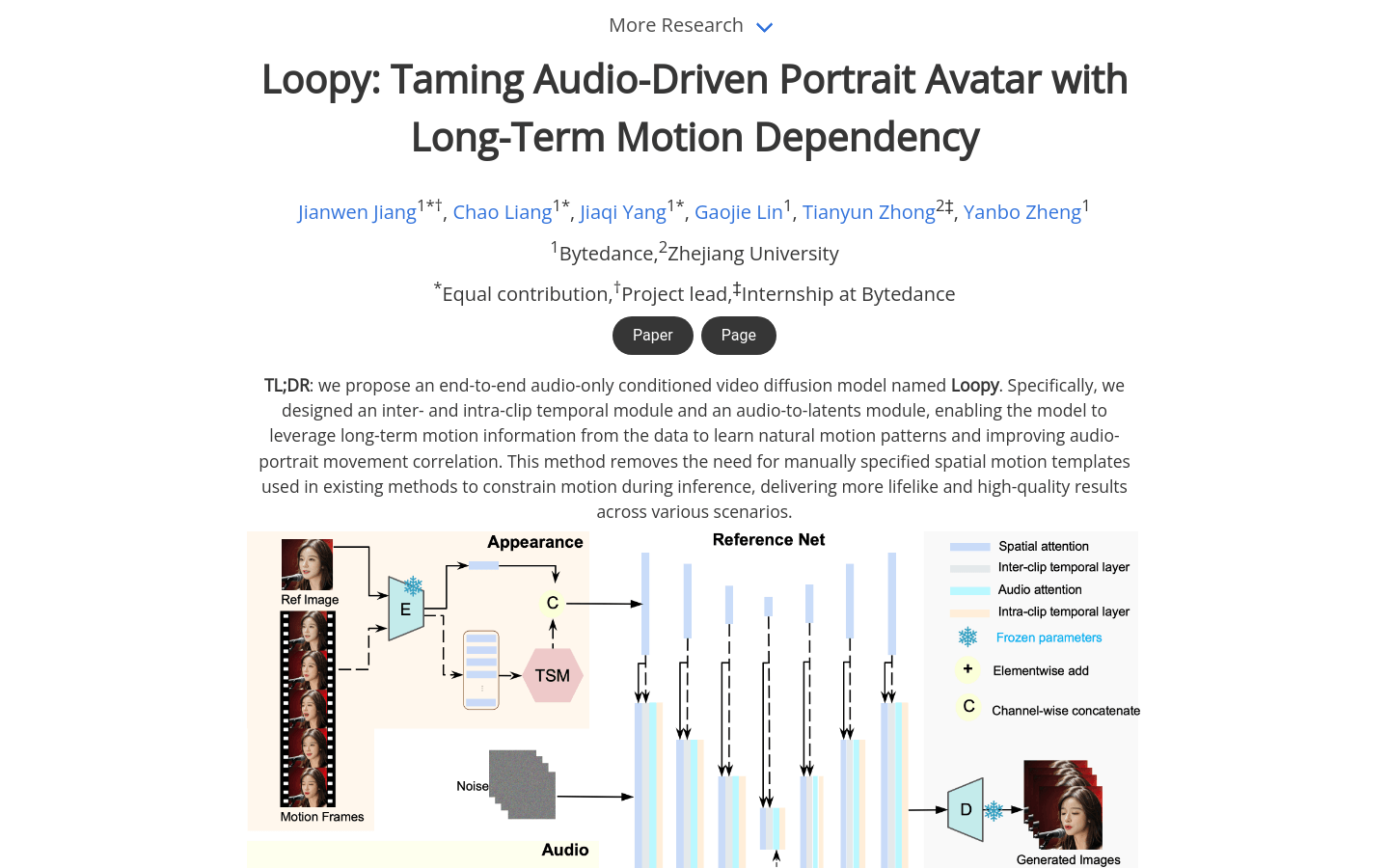

Loopy is an end-to-end audio-driven video diffusion model that features time modules designed for cross-clip and intra-clip interactions, as well as an audio-to-latent representation module. This enables the model to leverage long-term motion information within the data to learn natural movement patterns and enhance the correlation between audio and portrait motion. This approach eliminates the need for manually specified spatial motion templates required by existing methods, achieving more realistic and high-quality results across various scenarios.

Target Users :

Loopy is designed for developers and researchers who need to convert audio into dynamic portrait images, making it ideal for creating realistic avatars in virtual reality, augmented reality, or video conferencing applications.

Use Cases

Use Loopy to generate realistic avatars synchronized with voice during video conferencing.

Leverage Loopy to create dynamically responsive facial expressions for characters in virtual reality games.

Generate personalized dynamic portraits using Loopy on social media platforms.

Features

Supports a variety of visual and audio styles, generating vivid motion details from audio alone.

Can produce motion-adaptive synthesis results for the same reference image based on different audio inputs.

Supports non-verbal gestures, such as sighs, emotion-driven eyebrow and eye movements, and natural head motions.

Facilitates fast, soothing, or realistic singing performances.

Accepts images with side profiles as input.

Demonstrates significant advantages over recent methods in generating realistic dynamics.

How to Use

Visit the official Loopy website or GitHub page.

Read the documentation to understand how the model works and its usage requirements.

Download the necessary code and datasets.

Set up the environment according to the guidelines, including installing required libraries and dependencies.

Test using provided audio files and reference images.

Adjust parameters to optimize the generated dynamic portrait effects.

Integrate Loopy into your own projects or applications.

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M