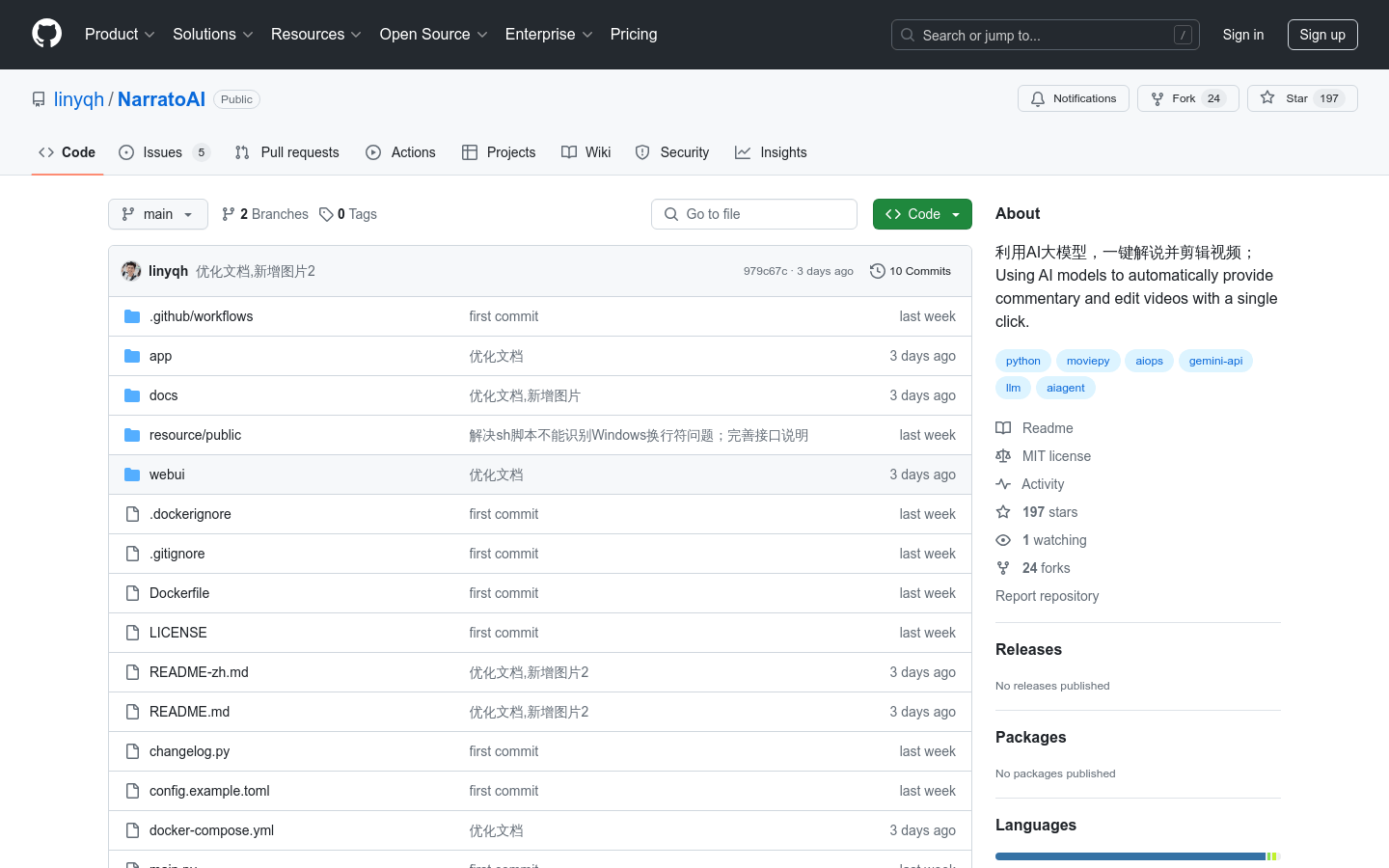

Narratoai

Overview :

NarratoAI is a tool that leverages AI large models to provide one-click narration and video editing. It offers a comprehensive solution for script writing, automatic video editing, voiceovers, and subtitle generation, all powered by LLM to enhance content creation efficiency.

Target Users :

NarratoAI is designed for creators and editors who need to quickly produce video content, particularly those looking to enhance their video production efficiency through AI technology.

Use Cases

Video creators using NarratoAI to quickly generate narration videos.

Educational institutions utilizing the tool for creating instructional videos.

Businesses employing NarratoAI to produce product introduction videos.

Features

Script Writing: Automatically generates video narration scripts.

Automatic Video Editing: Cuts videos automatically based on the script.

Voiceover: Provides text-to-speech functionality.

Subtitle Generation: Automatically creates subtitles for videos.

Model Selection: Currently supports the Gemini model with plans to add more models in the future.

Video Parameter Configuration: Allows users to configure basic video settings.

Video Generation: One-click generation of final videos.

How to Use

1. Apply for a Google AI Studio account and obtain your API Key.

2. Configure your environment to access Google services.

3. Clone the project and launch the Docker deployment.

4. Access the web interface and select a video for narration.

5. Save the script and begin video editing.

6. Review the video and regenerate or manually edit if necessary.

7. Configure video parameters and initiate video generation.

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M