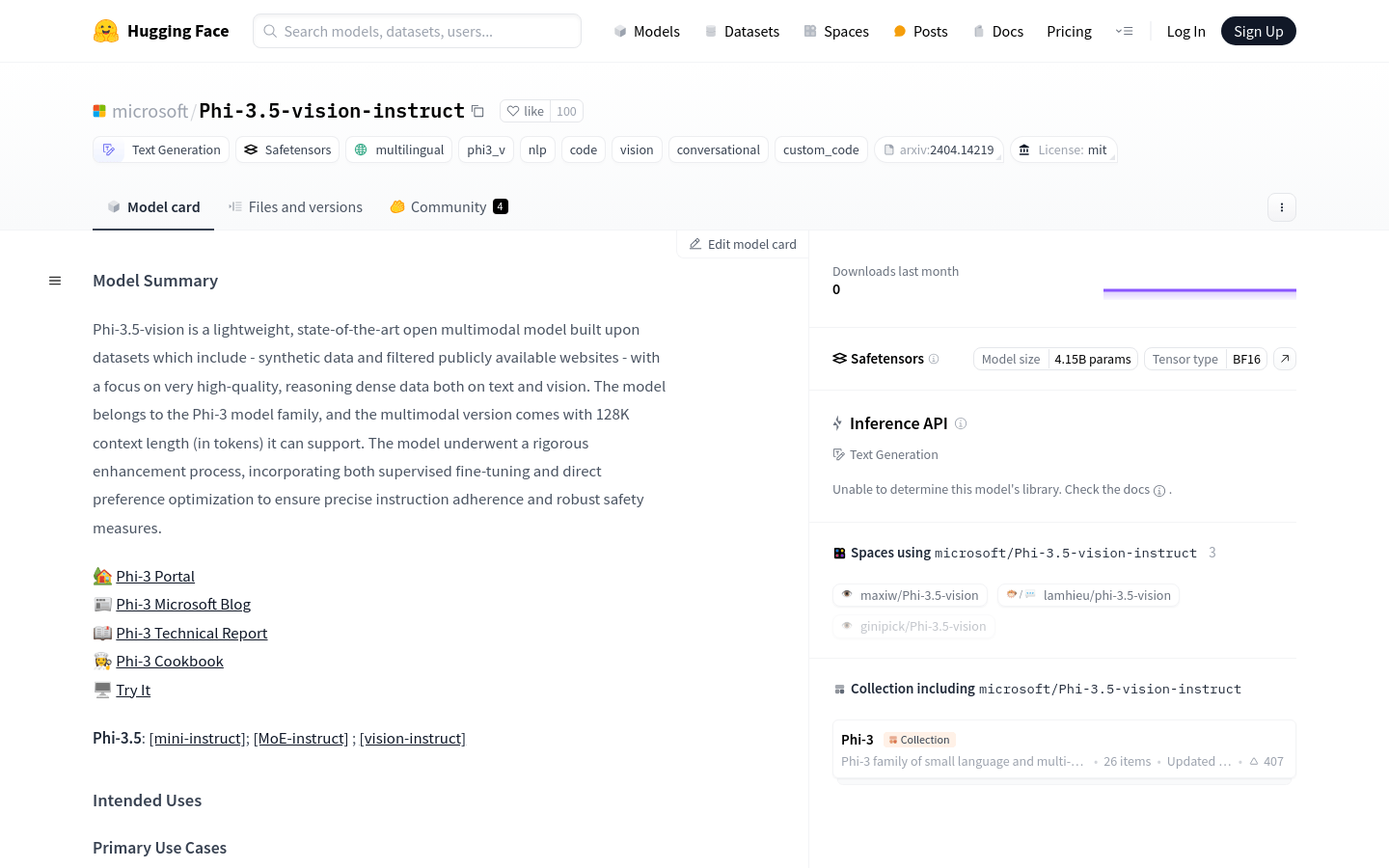

Phi 3.5 Vision

Overview :

Phi-3.5-vision is a lightweight, next-generation multimodal model developed by Microsoft. It is built on a dataset that includes synthetic data and curated publicly available websites, focusing on high-quality, dense reasoning data for both text and visual inputs. This model belongs to the Phi-3 family and has undergone rigorous enhancement processes, combining supervised fine-tuning with direct preference optimization to ensure precise instruction following and robust safety measures.

Target Users :

Target audience includes researchers and developers focused on AI systems and applications that require visual and text input capabilities. This is particularly suitable for professionals working in scenarios with limited memory or computational resources, those sensitive to latency, or those needing image comprehension capabilities.

Use Cases

In office automation, generating summaries for multi-page documents.

In education, analyzing content and extracting key points from teaching slides.

In content creation, comparing image collections and narrating stories.

Features

Supports multi-frame image understanding and inference, suitable for office scenarios.

Demonstrates performance improvements in single-image benchmarks such as MMMU and MMBench.

Offers multilingual support, but is primarily designed for English environments.

Suitable for memory/computation-limited environments and latency-sensitive scenarios.

Supports image understanding, optical character recognition, and comprehension of charts and tables.

Designed to accelerate research in language and multimodal models as a building block for generative AI functionalities.

How to Use

1. Obtain the Phi-3.5-vision-instruct model checkpoint.

2. Use the provided sample code for inference.

3. Prepare image data and load it into the model.

4. Construct prompts based on your needs, such as requesting the model to summarize an image.

5. Generate outputs using the model, such as text summaries or image comparison results.

6. Adjust model parameters as necessary to optimize performance and output quality.

7. Integrate the model into larger AI applications or systems.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M