Bailing TTS

Overview :

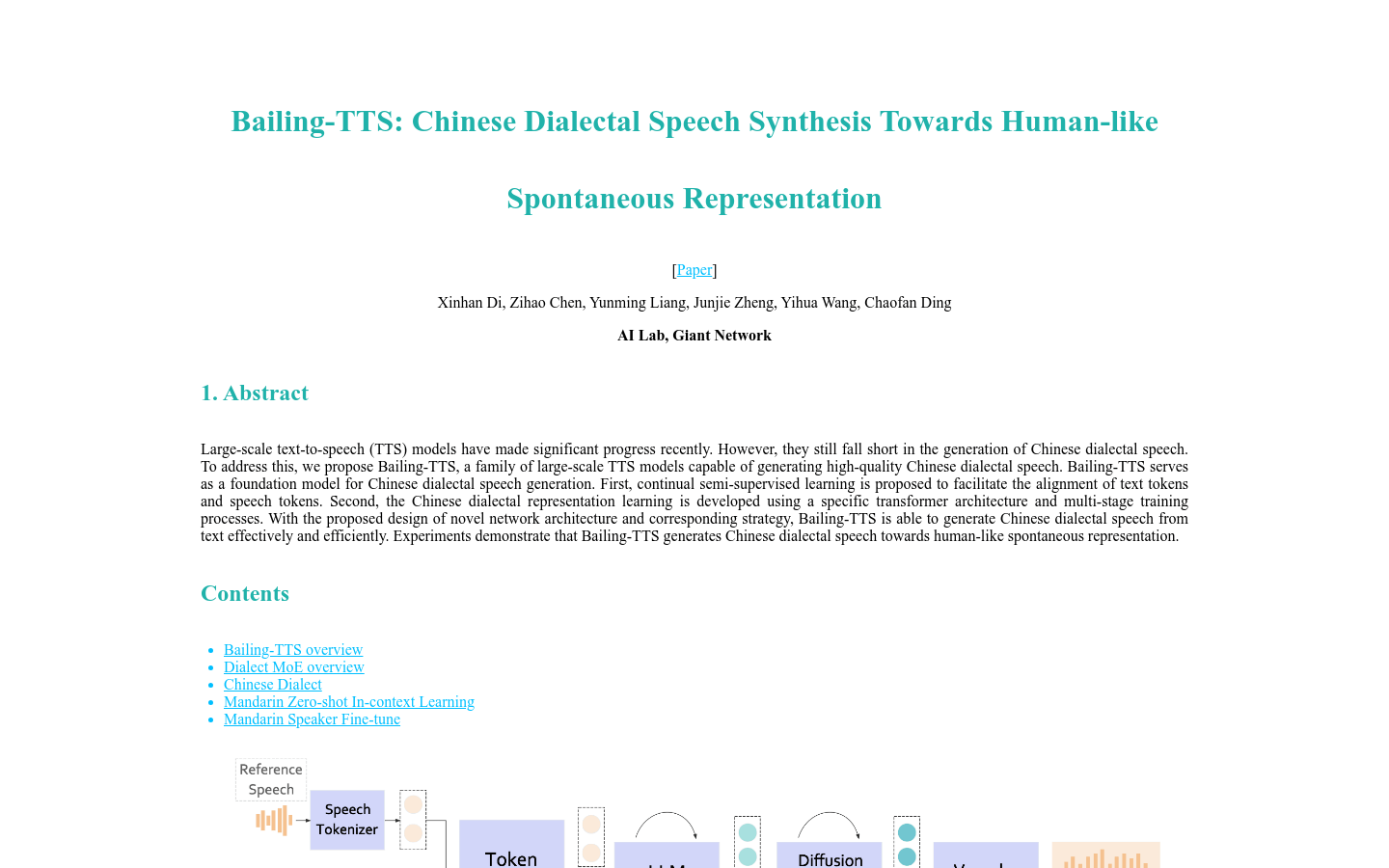

Bailing-TTS is a series of large-scale text-to-speech (TTS) models developed by Giant Network's AI Lab, focused on generating high-quality Chinese dialect voices. The model employs continuous semi-supervised learning and a specific Transformer architecture, effectively aligning text and speech markers through a multi-stage training process to achieve high-quality dialect speech synthesis. Bailing-TTS has demonstrated speech synthesis results that closely resemble natural human expression, holding significant relevance in the field of dialect speech synthesis.

Target Users :

Bailing-TTS is primarily aimed at developers and enterprises seeking high-quality Chinese dialect speech synthesis, such as those in speech synthesis application development, smart assistants, and educational software. It is particularly suitable for scenarios that require a natural and authentic dialect experience in voice interactions, enhancing user experience.

Features

Continuous semi-supervised learning for aligning text and speech markers.

Utilizes a specific Transformer architecture for Chinese dialect representation learning.

Multi-stage training process to improve dialect speech synthesis quality.

Generates dialect speech that closely matches human natural expression.

Supports multiple Chinese dialects, such as the Henan dialect.

Achieves zero-shot contextual learning for Mandarin.

Facilitates fine-tuning for Mandarin speakers.

How to Use

1. Visit the Bailing-TTS model webpage.

2. Select your preferred dialect or Mandarin option.

3. Input or upload the text for speech synthesis.

4. Adjust voice parameters as needed, such as speech rate and pitch.

5. Click the generate button, and the model will produce the speech.

6. Download or play the generated audio file directly.

7. Fine-tune based on feedback to optimize speech synthesis results.

Featured AI Tools

GPT SoVITS

GPT-SoVITS-WebUI is a powerful zero-shot voice conversion and text-to-speech WebUI. It features zero-shot TTS, few-shot TTS, cross-language support, and a WebUI toolkit. The product supports English, Japanese, and Chinese, providing integrated tools such as voice accompaniment separation, automatic training set splitting, Chinese ASR, and text annotation to help beginners create training datasets and GPT/SoVITS models. Users can experience real-time text-to-speech conversion by inputting a 5-second voice sample, and they can fine-tune the model using only 1 minute of training data to improve voice similarity and naturalness. The product supports environment setup, Python and PyTorch versions, quick installation, manual installation, pre-trained models, dataset formats, pending tasks, and acknowledgments.

AI Speech Synthesis

5.8M

Clone Voice

Clone-Voice is a web-based voice cloning tool that can use any human voice to synthesize speech from text using that voice, or convert one voice to another using that voice. It supports 16 languages including Chinese, English, Japanese, Korean, French, German, and Italian. You can record voice online directly from your microphone. Functions include text-to-speech and voice-to-voice conversion. Its advantages lie in its simplicity, ease of use, no need for N card GPUs, support for multiple languages, and flexible voice recording. The product is currently free to use.

AI Speech Synthesis

3.6M