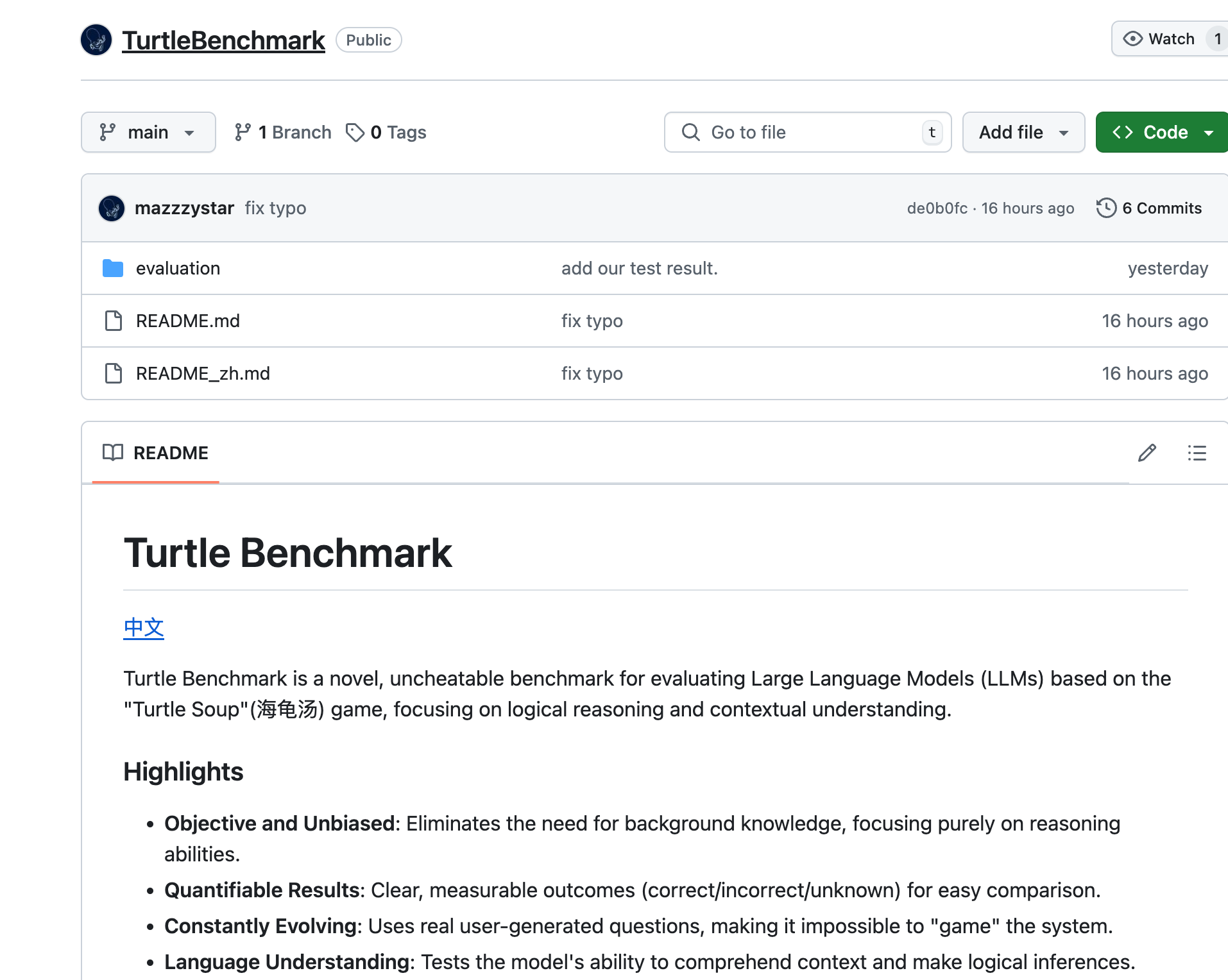

Turtle Benchmark

Overview :

Turtle Benchmark is a new, cheat-proof benchmark based on the 'Turtle Soup' game, focusing on the assessment of large language models (LLMs) in terms of logical reasoning and context comprehension. By eliminating the need for background knowledge, it provides objective and unbiased test results with quantifiable outcomes, ensuring that models cannot be 'gamed' through the use of real user-generated questions.

Target Users :

Turtle Benchmark is designed for researchers and developers who need to evaluate and compare the performance of large language models. It is particularly suited for professionals focused on the model's logical reasoning and context comprehension capabilities, helping them gain a more accurate understanding of the model's performance in Chinese contexts.

Use Cases

Researchers use Turtle Benchmark to assess the performance of different large language models on specific logical reasoning tasks.

Developers utilize Turtle Benchmark to test if their language models can accurately understand and respond to user queries.

Educational institutions use Turtle Benchmark as a teaching tool to help students understand how large language models operate and methods for performance evaluation.

Features

Clear and unbiased objectives: Focuses on reasoning capabilities without requiring background knowledge.

Quantifiable results: Offers clear, measurable outcomes (correct/incorrect/unknown) for easy comparison.

Continual evolution: Utilizes real user-generated questions to prevent system manipulation.

Language understanding: Tests the model’s ability to comprehend context and perform logical inference.

Ease of use: Evaluations can be conducted through simple command line operations.

Rich data: Comprised of 32 unique 'Turtle Soup' stories and 1537 manually labeled annotations.

Results interpretation: Compare different models' overall accuracy and average story accuracy in 2-shot learning scenarios using scatter plots.

How to Use

1. Navigate to the Turtle Benchmark project directory.

2. Rename the .env.example file to .env and add your API key.

3. Execute the command `python evaluate.py` to conduct a 2-shot learning evaluation.

4. For zero-shot evaluation, run the command `python evaluate.py --shot 0`.

5. Review the evaluation results, including overall accuracy and average accuracy across stories.

6. Use scatter plots to analyze performance differences among various models.

Featured AI Tools

Google AI Studio

Google AI Studio is a platform for building and deploying AI applications on Google Cloud, built on Vertex AI. It provides a no-code interface that enables developers, data scientists, and business analysts to quickly build, deploy, and manage AI models.

AI Development Platform

974.3K

Vertex AI

Vertex AI offers an integrated platform and tools for building and deploying machine learning models. It features robust functionalities to expedite the training and deployment of custom models, along with pre-built AI APIs and applications. Key features include: integrated workspace, model deployment and management, MLOps support, etc. It significantly improves the efficiency of data scientists and ML engineers.

AI Development Platform

289.0K