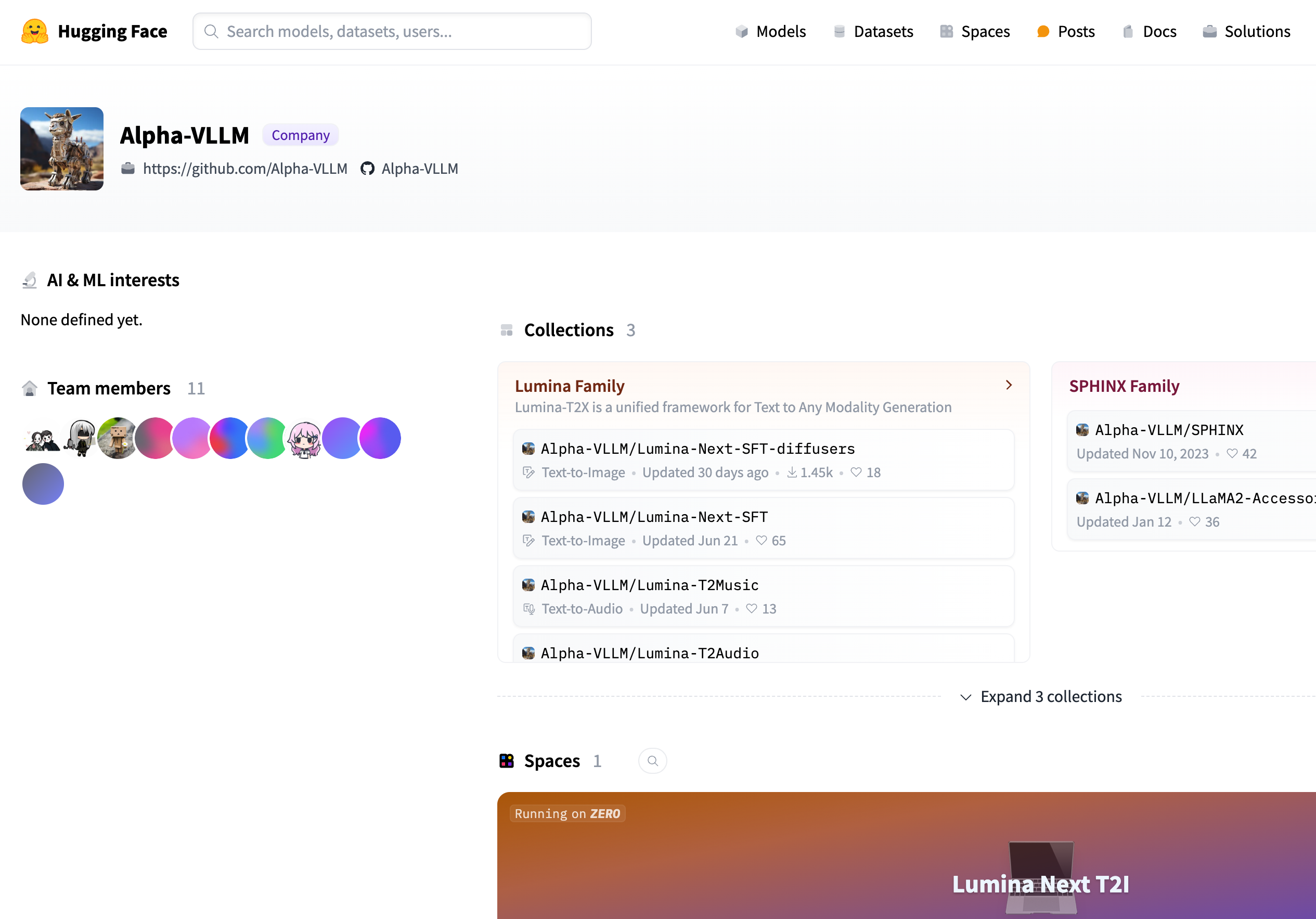

Alpha VLLM

Overview :

Alpha-VLLM offers a range of models that support the generation of multimodal content, including text-to-image and audio. These models are based on deep learning technology and can be widely applied in content creation, data augmentation, and automated design.

Target Users :

The Alpha-VLLM model is designed for developers, data scientists, and professionals in the creative industry who can leverage these models to enhance content creation capabilities, improve work efficiency, achieve automated design, and generate personalized content.

Use Cases

Generate images that match text descriptions using the Lumina-Next-SFT model.

Compose music synchronized with lyrics using the Lumina-T2Music model.

Automatically generate articles or stories with the mGPT-7B series models.

Features

Text-to-image generation: The Lumina Family series models convert text descriptions into images.

Text-to-audio generation: The Lumina-T2Music model transforms text into audio content.

Text-to-text generation: The mGPT-7B series models facilitate the generation and editing of text content.

Multimodal framework: Provides a unified framework for generating content across different modalities.

Model updates: Models are regularly updated to maintain technological advancement and adaptability.

Community support: As an open-source project, it boasts an active community and contributors.

How to Use

Step 1: Visit the Alpha-VLLM GitHub page to explore available models and documentation.

Step 2: Choose a model that fits your needs, such as text-to-image or text-to-audio.

Step 3: Set up your development environment as per the model documentation and install the necessary dependencies.

Step 4: Download and load the selected model onto your local or cloud server.

Step 5: Write code to input text and receive the output generated by the model.

Step 6: Test and tweak the model parameters to optimize the quality of the generated content.

Step 7: Integrate the model into your applications or workflows for automated content generation.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M