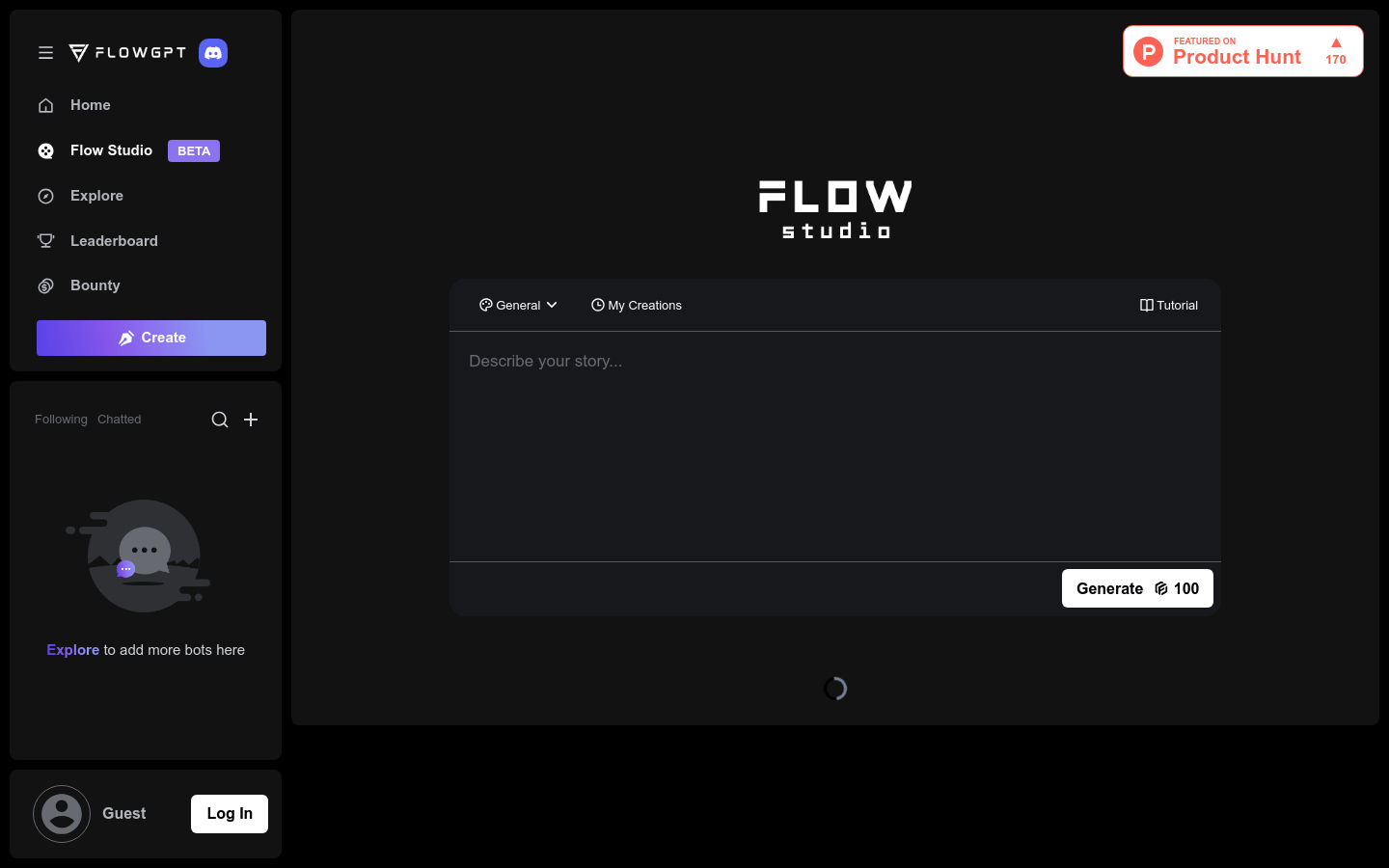

Flow Studio

Overview :

Flow Studio is an AI-based video generation platform focused on providing high-quality, personalized video content for users. The platform utilizes advanced AI algorithms to generate 3-minute videos in a short time, with better results than Luma, Pika, and Sora. Users can quickly create engaging video content by selecting different templates, characters, and scenes. The main advantages of Flow Studio include fast generation speed, realistic effects, and easy operation.

Target Users :

Flow Studio is suitable for users who need to quickly generate video content, such as video makers, advertisers, and social media operators. The platform, powered by AI technology, can generate high-quality videos in a short time, greatly improving work efficiency while reducing production costs.

Use Cases

Advertisers use Flow Studio to generate advertising videos, increasing ad appeal.

Social media operators utilize Flow Studio to create short videos, increasing user interaction.

Video makers use Flow Studio to quickly generate video drafts, improving production efficiency.

Features

Support various video templates and character options to meet different user needs.

Utilize AI technology to quickly generate high-quality video content.

Users can customize personalized videos through simple operations.

Provide preview function, allowing users to view the video generation effect in real-time.

Support video export for different scenarios.

Friendly interface and easy operation, suitable for all types of users.

Provide tutorials and guidance to help users get started quickly.

How to Use

1. Visit the Flow Studio website.

2. Select a video template or character.

3. Customize video content and settings as needed.

4. Use AI technology to generate the video.

5. Preview the video generation effect.

6. Export the video for different scenarios.

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M