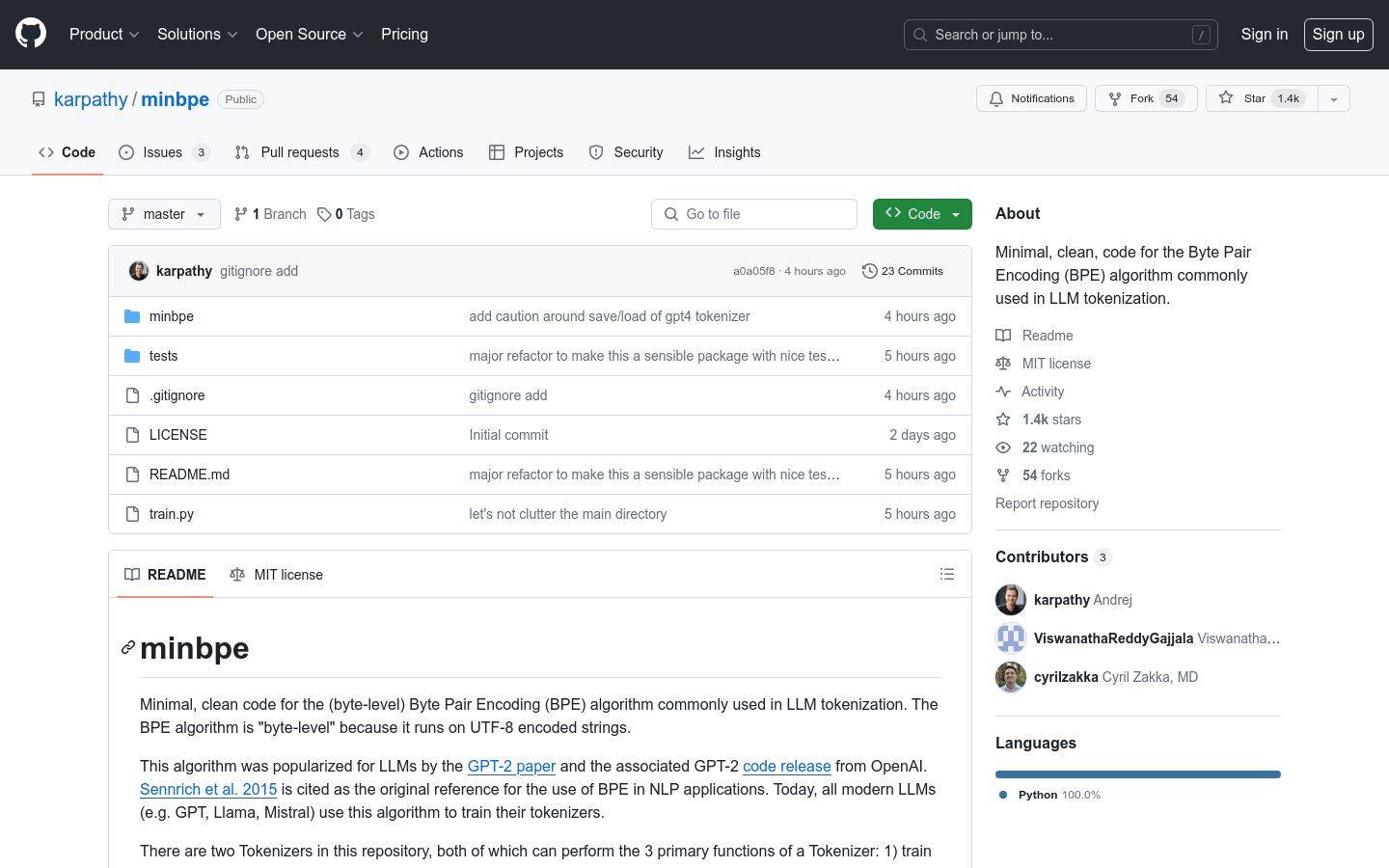

Minbpe

Overview :

The minbpe project aims to create clean, educational code implementations of the BPE algorithm commonly used in LLMs. It provides two Tokenizers that implement the main functionalities of BPE algorithm training, encoding, decoding, etc. The code is concise and easy to read, offering users a convenient and efficient experience. The project has gained considerable attention and attractiveness, and it is believed to play an important role in the development of LLM and NLP technologies.

Target Users :

["Applied in Transformer-based language models","Used as a tokenizer for models like BERT"]

Use Cases

BPE encoding of text using minbpe

Implementing a custom BPE tokenizer using minbpe

minbpe can be used to train language models

Features

Implementation of BPE algorithm training

Implementation of BPE encoding for text

Implementation of decoding of BPE-encoded text

Functionality to save and load

Featured AI Tools

Screenshot To Code

Screenshot-to-code is a simple application that uses GPT-4 Vision to generate code and DALL-E 3 to generate similar images. The application has a React/Vite frontend and a FastAPI backend. You will need an OpenAI API key with access to the GPT-4 Vision API.

AI code generation

971.5K

Codegemma

CodeGemma is an advanced large language model released by Google, specializing in generating, understanding, and tracking instructions for code. It aims to provide global developers with high-quality code assistance tools. It includes a 2 billion parameter base model, a 7 billion parameter base model, and a 7 billion parameter model for guiding tracking, all optimized and fine-tuned for code development scenarios. It excels in various programming languages and possesses exceptional logical and mathematical reasoning abilities.

AI code generation

328.2K