Generative Rendering: 2D Mesh

Overview :

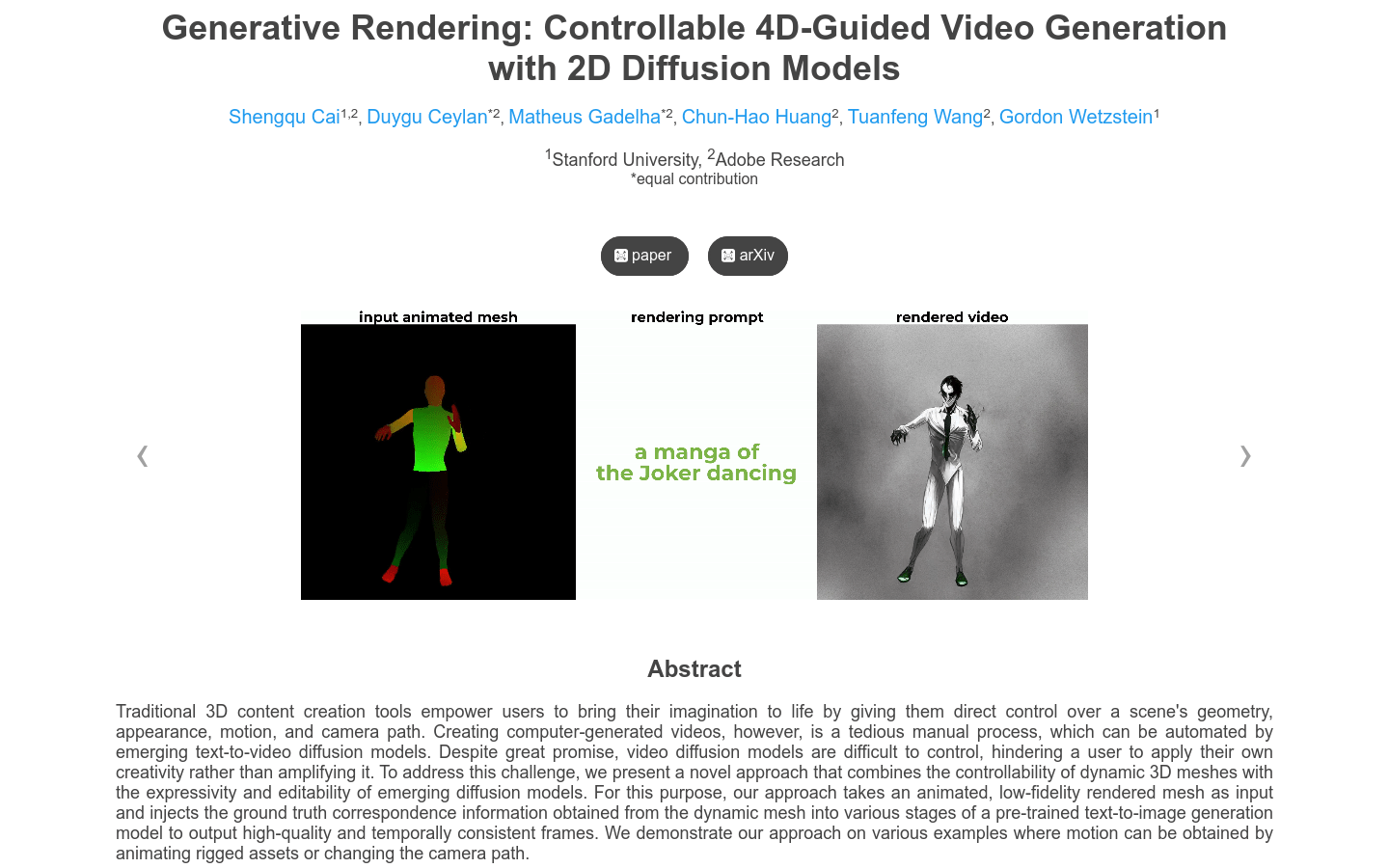

Traditional 3D content creation tools empower users with direct control over scene geometry, appearance, actions, and camera paths, transforming their imagination into reality. However, creating computer-generated videos remains a tedious manual process, which can be automated through emerging text-to-video diffusion models. Despite promising prospects, the lack of control in video diffusion models limits users' ability to apply their own creativity, rather than expanding it. To address this challenge, we propose a novel approach that combines the controllability of dynamic 3D meshes with the expressiveness and editability of emerging diffusion models. Our method takes animated low-fidelity rendering meshes as input and injectsground truth correspondences derived from the dynamic mesh into various stages of a pre-trained text-to-image generation model, resulting in high-quality, temporally consistent frames. We demonstrate our method on various examples, where actions are achieved through animating bound assets or altering camera paths.

Target Users :

Suitable for scenarios requiring controlled video generation, such as animation production and special effects production.

Use Cases

Animation Production: Utilize the generative rendering model to create realistic animation scenes

Special Effects Production: Generate special effects video clips using this model

Post-Production for Film and Television: Apply the model to post-production special effects in movies or television programs

Features

Accepts UV and depth maps from animated 3D scenes as input

Utilizes depth-conditional ControlNet to generate corresponding frames while maintaining consistency through UV correspondences

Initializes noise in the UV space for each object and renders it into each image

For each diffusion step, expands attention is used to extract pre-processed and post-processed attention features for a set of key frames

Projects the post-processed attention features to the UV space and unifies them

Finally, generates all frames by combining the output of the expanded attention with the weighted combination of the key frames' pre-processed features and post-processed features combined with the key frames' UV

Featured AI Tools

Open Sora Plan

Open-Sora-Plan is an open-source project dedicated to replicating OpenAI's Sora (T2V model) and constructing knowledge about Video-VQVAE (VideoGPT) + DiT. Initiated by the Peking University-Tuizhan AIGC Joint Laboratory, the project currently has limited resources and seeks contributions from the open-source community. The project provides training code and welcomes Pull Requests.

AI Video Generation

437.7K

Minigpt4 Video

MiniGPT4-Video is a multimodal large model designed for video understanding. It can process temporal visual data and text data, generate captions and slogans, and is suitable for video question answering. Based on MiniGPT-v2, it incorporates the visual backbone EVA-CLIP and undergoes multi-stage training, including large-scale video-text pre-training and video question-answering fine-tuning. It achieves significant improvements on benchmarks such as MSVD, MSRVTT, TGIF, and TVQA. The pricing is currently unknown.

AI Video Generation

97.7K