Motiondirector

Overview :

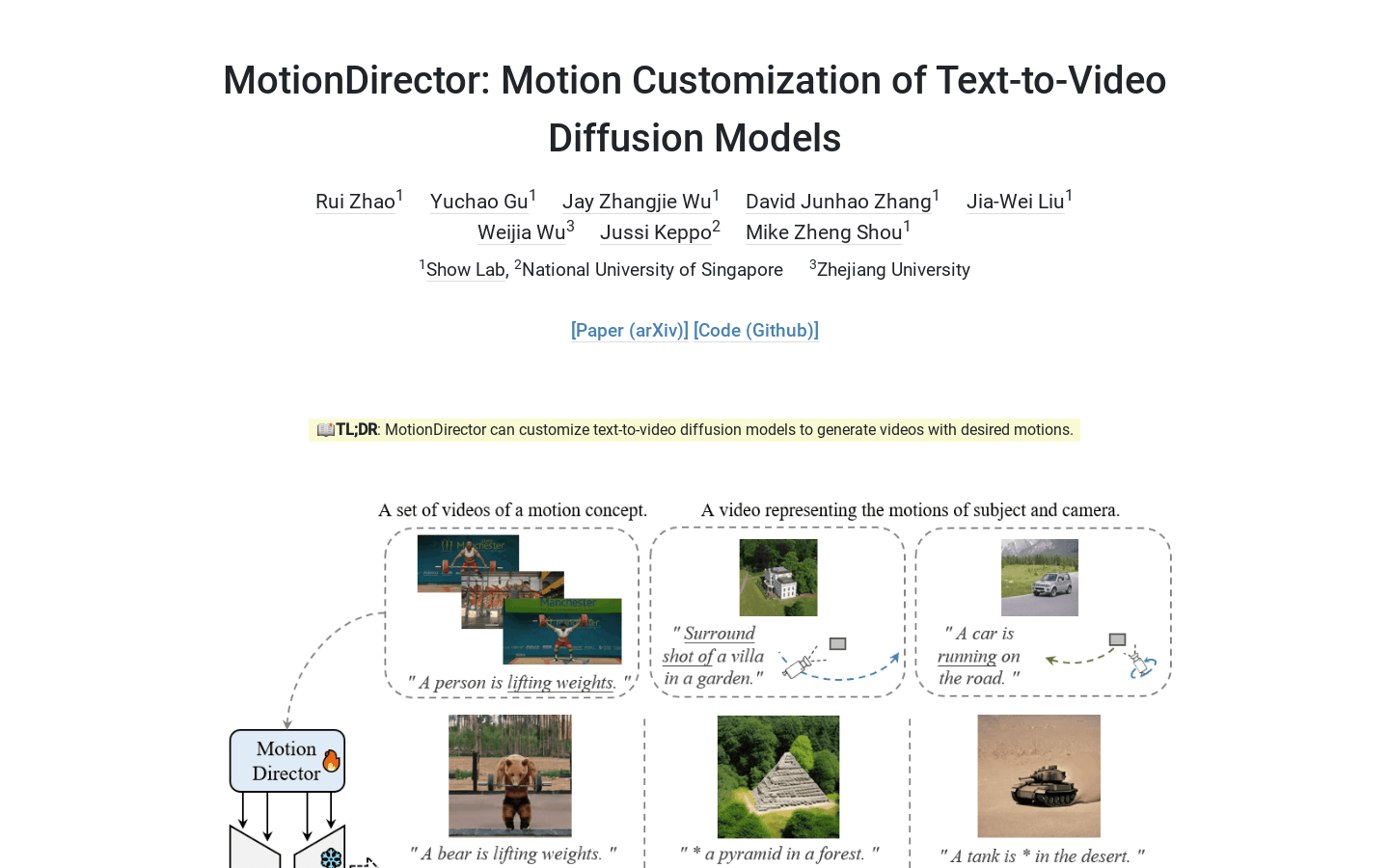

MotionDirector is a technique that enables the customization of text-to-video diffusion models to generate videos with desired motions. It utilizes a dual-path LoRAs architecture to decouple the learning of appearance and motion, and incorporates a novel biased temporal loss to mitigate the influence of appearance on the temporal training objective. This method supports various downstream applications, such as mixing the appearances and motions of different videos, and adding animations to individual images with custom motions.

Target Users :

MotionDirector allows you to customize text-to-video diffusion models to generate videos with desired motions, suitable for video generation and animation production.

Use Cases

Customizing videos of vehicle movement and specific camera shots in film production

Creating custom videos of a bear weightlifting for inspiration by creators

Mixing the appearances and motions of different videos to generate new videos

Features

Learn the appearance and actions from reference videos

During training, spatial LoRAs learn to adapt to the appearance of reference videos, while temporal LoRAs learn their motion dynamics

During inference, the trained temporal LoRAs are injected into the base model, allowing it to generalize the learned actions to different appearances

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M