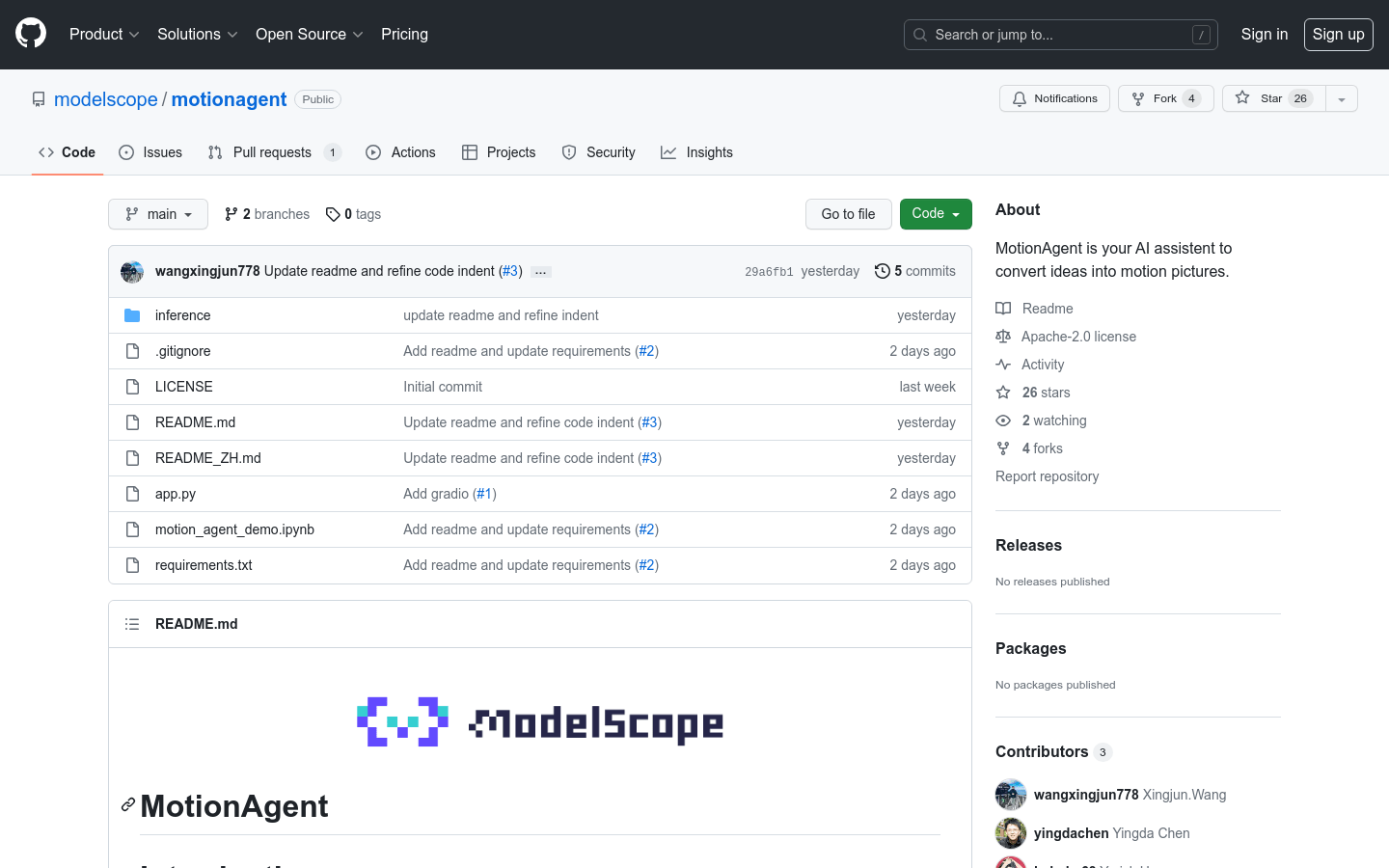

Motionagent

Overview :

MotionAgent is a deep learning-based video content creation tool that allows users to craft scripts, generate cinematic stills, produce images/videos, and create custom background music using our comprehensive suite of tools. The MotionAgent model is supported by the open-source model community ModelScope. Key functionalities include: script generation, enabling users to create scripts in different styles by specifying story themes and background; cinematic stills generation based on scripts; video production, converting images into high-definition video; background music creation, allowing for customization of the musical style. MotionAgent is suitable for video creators, film industry professionals, and video enthusiasts, assisting them in creative scriptwriting and video content production.

Target Users :

["Video Content Creators","Film Industry Professionals","Video Enthusiasts"]

Use Cases

Users can specify the 'Youth Campus' theme, and MotionAgent will generate a relevant style campus youth script.

Users can input a textual description of a scene, and MotionAgent will produce a corresponding cinematic still.

Users can upload a set of cinematic stills, and MotionAgent will generate a cohesive video auscultation.

Users can specify a musical style, and MotionAgent will create customized background music.

Features

Script Generation

Cinematic Stills Generation

Video Production

Background Music Creation

Featured AI Tools

Sora

AI video generation

17.0M

Animate Anyone

Animate Anyone aims to generate character videos from static images driven by signals. Leveraging the power of diffusion models, we propose a novel framework tailored for character animation. To maintain consistency of complex appearance features present in the reference image, we design ReferenceNet to merge detailed features via spatial attention. To ensure controllability and continuity, we introduce an efficient pose guidance module to direct character movements and adopt an effective temporal modeling approach to ensure smooth cross-frame transitions between video frames. By extending the training data, our method can animate any character, achieving superior results in character animation compared to other image-to-video approaches. Moreover, we evaluate our method on benchmarks for fashion video and human dance synthesis, achieving state-of-the-art results.

AI video generation

11.4M