Mistral Small 3.1

Overview :

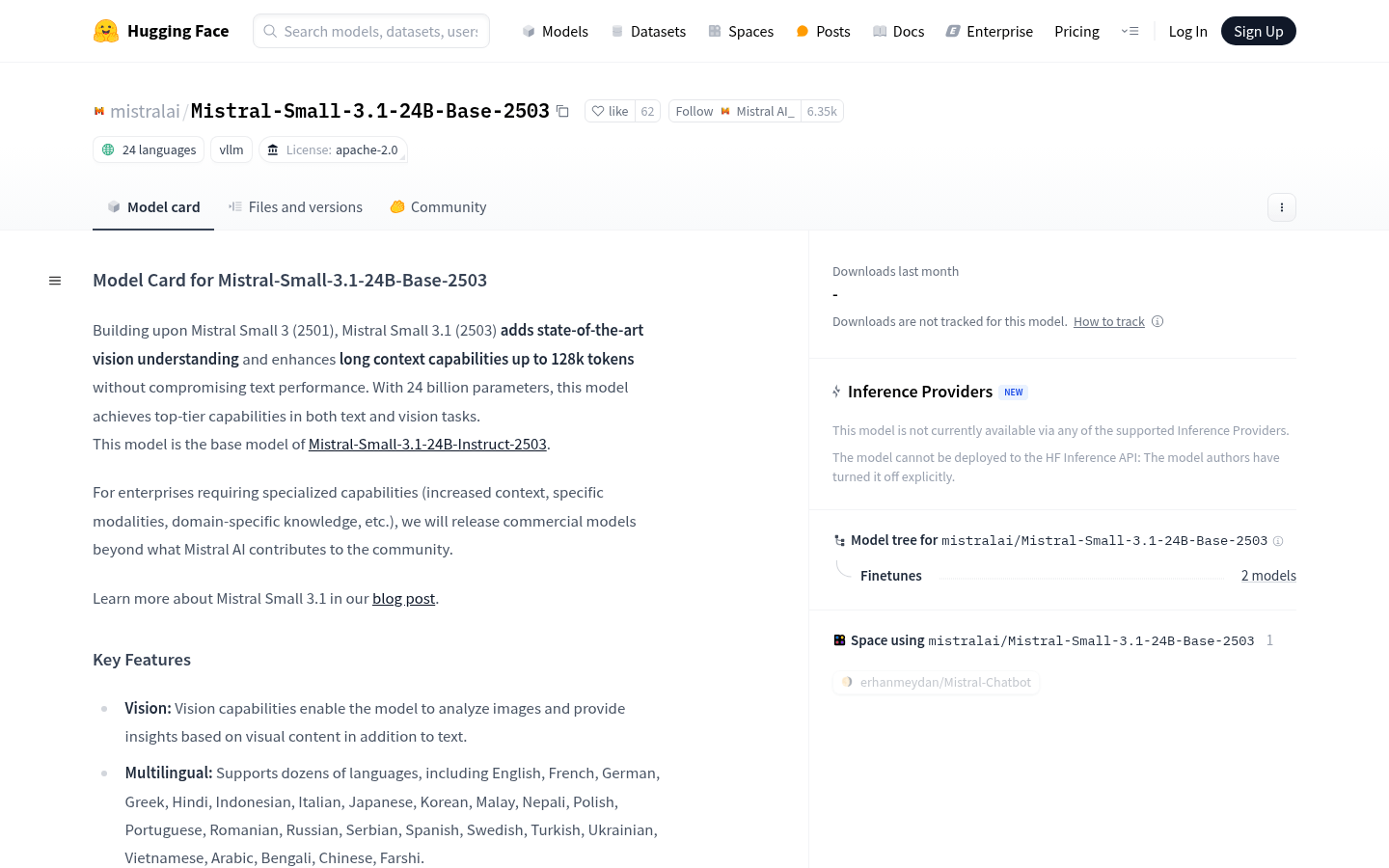

Mistral-Small-3.1-24B-Base-2503 is an advanced open-source model with 24 billion parameters, supporting multilingual and long-context processing, suitable for text and visual tasks. It is the base model of Mistral Small 3.1, possessing strong multimodal capabilities and suitable for enterprise needs.

Target Users :

This product is suitable for enterprises, researchers, and developers, especially those who need to efficiently process text and image data, enabling them to advance AI applications and development in their respective fields.

Use Cases

Analyze images and generate descriptive text.

Perform multilingual text understanding and generation.

Support in-depth conversation and analysis of long texts.

Features

Multimodal analysis: Capable of processing both text and visual input, providing in-depth analysis.

Multilingual support: Supports dozens of languages, suitable for global users.

Large context window: Features a 128k context window, capable of handling long texts.

Open-source license: Uses the Apache 2.0 license, supporting commercial and non-commercial use.

Efficient tokenizer: Uses the Tekken tokenizer, with a vocabulary of 131k words.

How to Use

Install the vLLM library: Install the latest version of the vLLM library using pip.

Download the model: Load the Mistral-Small-3.1-24B-Base-2503 model by specifying its name.

Prepare input: Prepare text and image input as needed.

Encode input: Use the model's encoder to convert the input into the format required by the model.

Generate output: Call the model to generate results based on the input.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M