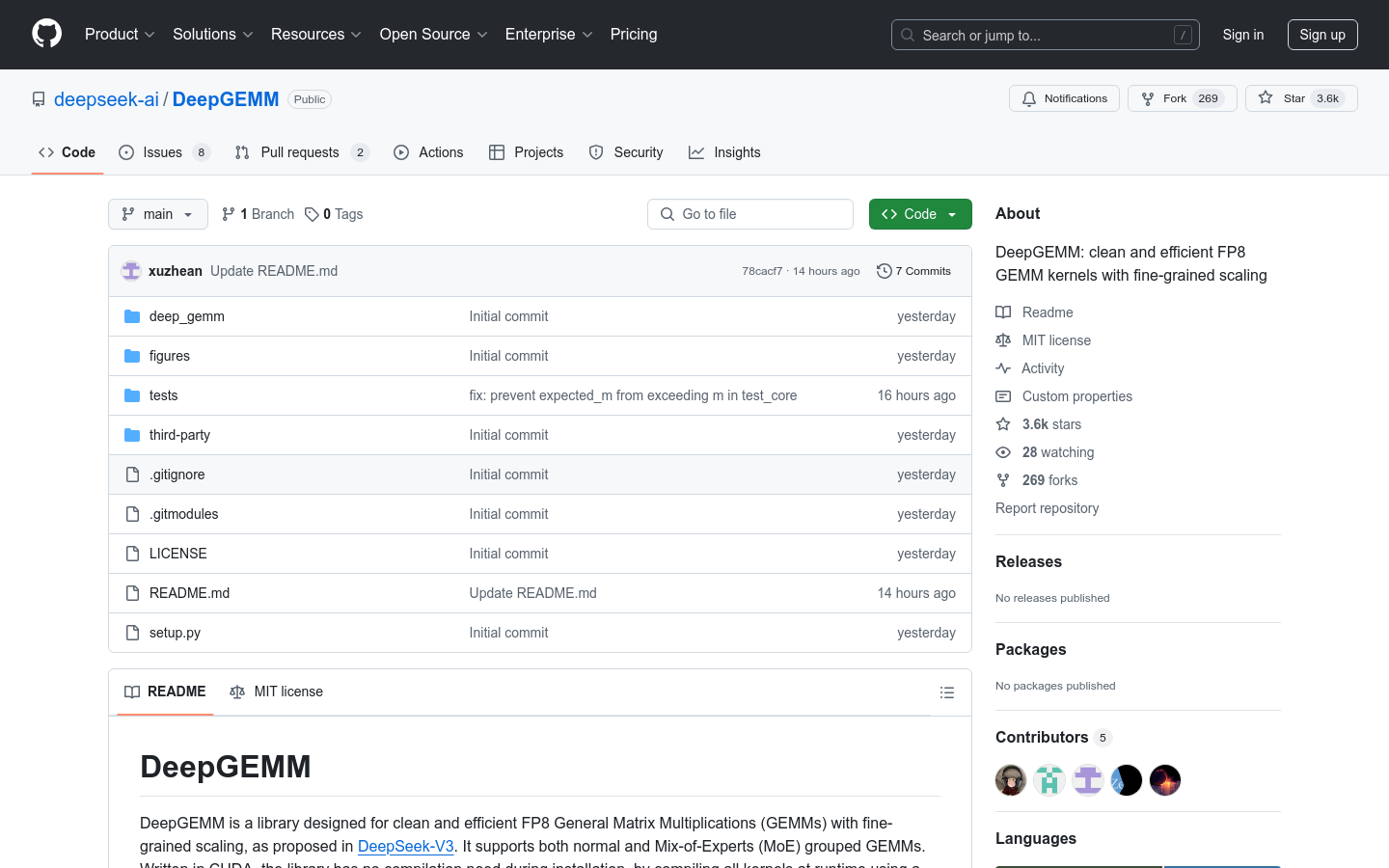

Deepgemm

Overview :

DeepGEMM is a CUDA library focused on high-performance FP8 matrix multiplication. Through fine-grained scaling and various optimization techniques such as Hopper TMA features, persistent thread specialization, and a fully JIT design, it significantly improves matrix computation performance. Primarily aimed at deep learning and high-performance computing, it's suitable for scenarios requiring efficient matrix operations. It supports NVIDIA Hopper architecture Tensor Cores and demonstrates superior performance across various matrix shapes. DeepGEMM boasts a concise design with a core codebase of approximately 300 lines, making it easy to learn and use while achieving performance comparable to or exceeding expert-optimized libraries. Its open-source and free nature makes it an ideal choice for researchers and developers engaged in deep learning optimization and development.

Target Users :

DeepGEMM is primarily designed for deep learning researchers and developers who require efficient matrix multiplication on NVIDIA Hopper architecture GPUs. It's ideal for scenarios demanding optimized FP8 matrix multiplication performance, such as training and inference of large-scale deep learning models. Its open-source, free, and easily integrable nature makes it suitable for developers seeking to quickly enhance matrix computation performance in existing projects.

Use Cases

Accelerate FP8 matrix multiplication in deep learning model training using DeepGEMM to significantly improve training speed.

Optimize the computational performance of Mixture-of-Experts (MoE) models during inference using DeepGEMM's grouped GEMM functionality.

Integrate DeepGEMM into existing deep learning frameworks to leverage its optimization techniques and enhance overall system efficiency.

Features

Supports efficient FP8 matrix multiplication for GEMM operations in deep learning.

Employs fine-grained scaling to enhance computational accuracy and performance.

Utilizes Hopper architecture TMA features for fast data transfer and optimization.

Features a fully JIT design, eliminating the need for compile-time compilation and enabling runtime dynamic compilation optimization.

Supports various matrix multiplication scenarios, including standard GEMM and grouped GEMM.

Provides multiple optimization techniques, such as persistent thread specialization and FFMA instruction optimization.

Offers a simple and user-friendly API for easy integration into existing projects.

How to Use

1. Clone the DeepGEMM repository and initialize submodules: `git clone --recursive https://github.com/deepseek-ai/DeepGEMM.git`

2. Install dependencies, including Python 3.8+, CUDA 12.3+, PyTorch 2.1+, etc.

3. Install in development mode using `python setup.py develop`

4. Test JIT compilation and core functionalities: `python tests/test_jit.py` and `python tests/test_core.py`

5. Import the `deep_gemm` module in your Python project and utilize its provided GEMM functions

Featured AI Tools

Pseudoeditor

PseudoEditor is a free online pseudocode editor. It features syntax highlighting and auto-completion, making it easier for you to write pseudocode. You can also use our pseudocode compiler feature to test your code. No download is required, start using it immediately.

Development & Tools

3.8M

Coze

Coze is a next-generation AI chatbot building platform that enables the rapid creation, debugging, and optimization of AI chatbot applications. Users can quickly build bots without writing code and deploy them across multiple platforms. Coze also offers a rich set of plugins that can extend the capabilities of bots, allowing them to interact with data, turn ideas into bot skills, equip bots with long-term memory, and enable bots to initiate conversations.

Development & Tools

3.8M