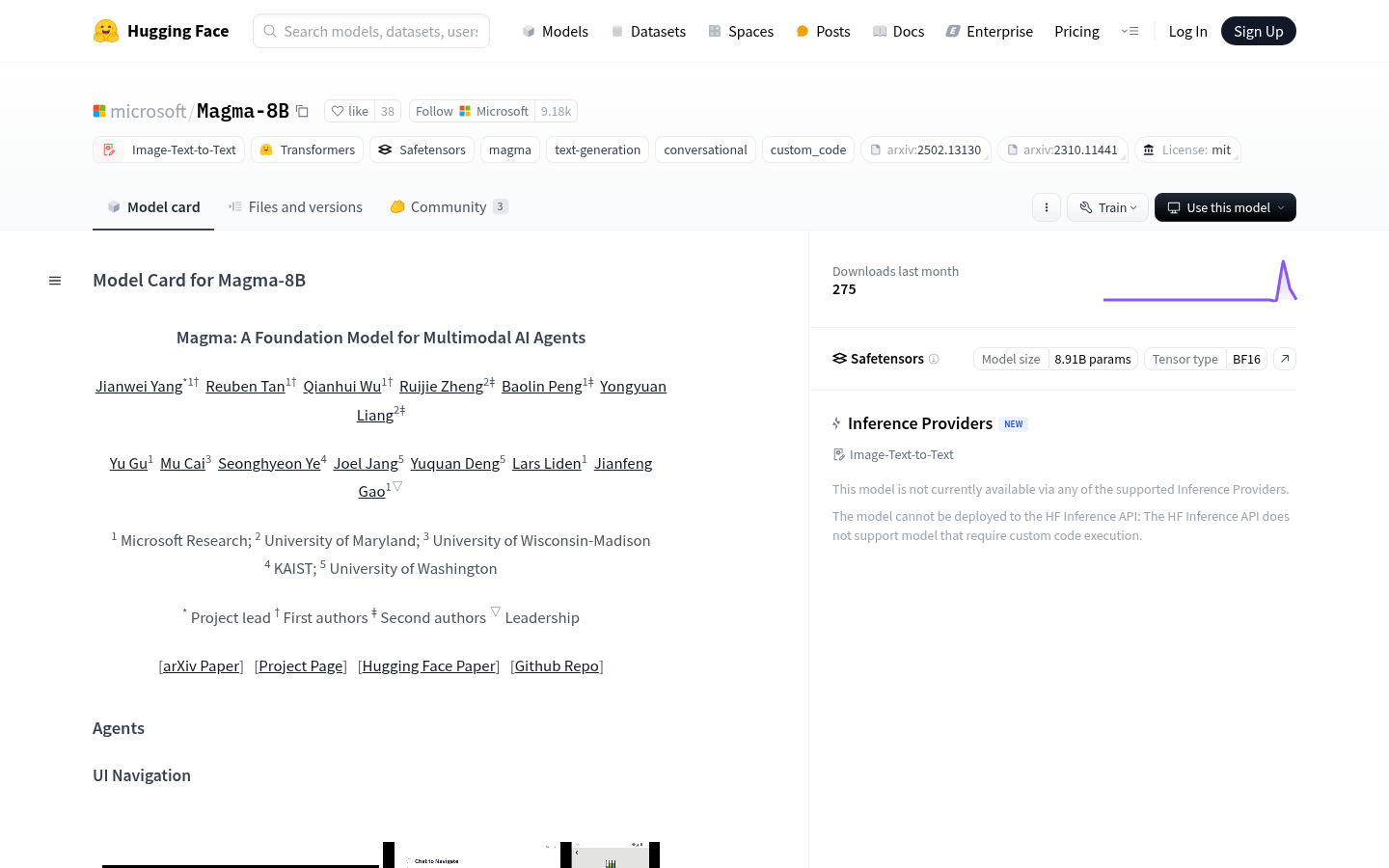

Magma 8B

Overview :

Magma-8B is a foundational multi-modal AI model developed by Microsoft, specifically designed for researching multi-modal AI agents. It integrates text and image inputs to generate text outputs and possesses visual planning and agent capabilities. The model utilizes Meta LLaMA-3 as its language model backbone and incorporates a CLIP-ConvNeXt-XXLarge vision encoder. It can learn spatiotemporal relationships from unlabeled video data, exhibiting strong generalization capabilities and multi-task adaptability. Magma-8B excels in multi-modal tasks, particularly in spatial understanding and reasoning. It provides a powerful tool for multi-modal AI research, advancing the study of complex interactions in virtual and real-world environments.

Target Users :

This model is designed for multi-modal AI researchers, developers, and professionals working with image and text interaction tasks. It provides strong technical support for complex human-computer interaction and robotic manipulation, offering efficient and accurate solutions for multi-modal tasks.

Use Cases

In UI navigation tasks, Magma-8B can generate correct operation instructions, such as clicking specific buttons, based on image input.

In robotic manipulation tasks, the model can generate the robotic arm's operation path based on video input.

In multi-modal question answering tasks, Magma-8B can generate accurate answers by combining image and text inputs.

Features

Supports text generation conditioned on images and videos, such as caption generation and question answering.

Possesses visual planning capabilities, generating visual trajectories for task completion.

Enables UI grounding (e.g., clicking buttons) and robotic manipulation (e.g., robotic arm control).

Learns spatiotemporal relationships from unlabeled video data, enhancing generalization ability.

Exhibits strong performance in multi-modal tasks, especially in spatial and temporal understanding.

How to Use

1. Install necessary dependencies, such as transformers, torch, torchvision, Pillow, and open_clip_torch.

2. Load the Magma-8B model and processor using the transformers library.

3. Prepare input data, including images and text prompts.

4. Preprocess the input data using the processor and pass it to the model.

5. Call the model's generation function to obtain the text output.

6. Decode and post-process the generated text to obtain the final output.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M