Syncanimation

Overview :

SyncAnimation is an innovative audio-driven technology capable of real-time generation of highly realistic speaking avatars and upper body movements. By combining audio with synchronized pose and expression techniques, it addresses the shortcomings of traditional methods in terms of real-time performance and detail representation. This technology primarily targets application scenarios that require high-quality real-time animation generation, such as virtual streaming, online education, remote conferencing, and holds significant practical value. Its pricing and specific market positioning have yet to be determined.

Target Users :

This product is suitable for industries that require real-time generation of high-quality animations, such as virtual streaming, online education, film production, and game development. These fields demand the rapid creation of realistic animated content under limited resources, and SyncAnimation's audio-driven technology and high-precision generation capabilities can meet these needs.

Use Cases

In news reporting, use SyncAnimation to generate a virtual reporter's avatar and upper body movements, allowing it to engage in dialogue synchronized with the audio.

On online education platforms, utilize this technology to create animated virtual teachers, enhancing the fun and interactivity of the teaching experience.

In game development, leverage audio-driven technology to generate real-time expressions and movements for characters, enhancing the immersive experience.

Features

Generate highly realistic speaking avatars and upper body movements using audio-driven technology

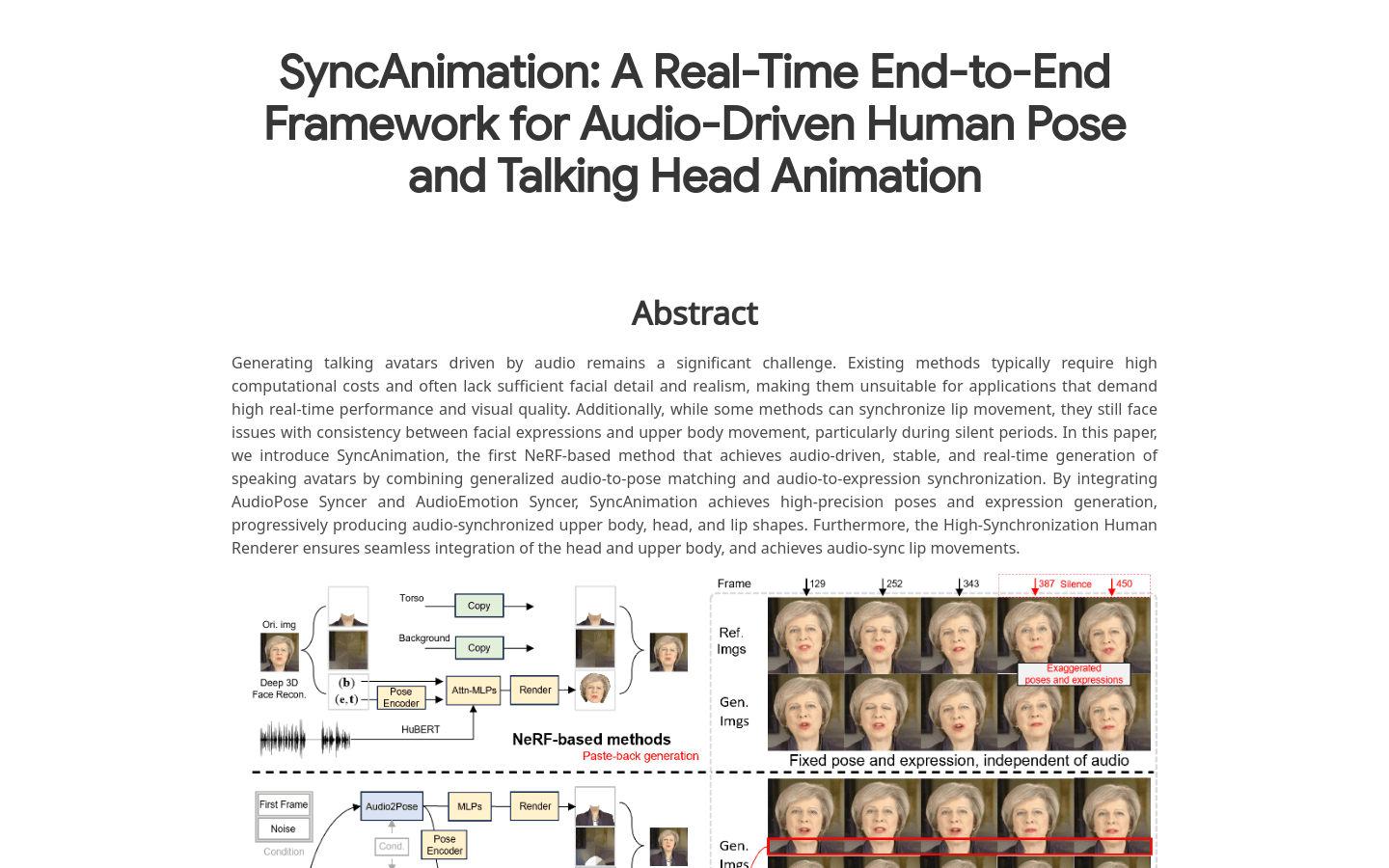

Achieve high-precision pose and expression generation via AudioPose Syncer and AudioEmotion Syncer

Support dynamic and clear lip shape generation, ensuring synchronization of lips with audio

Generate full-body animations with rich expression and pose variations

Extract identity information from monocular images or noise to create personalized animations

How to Use

1. Prepare input data: Provide a character image (to extract identity information) and an audio file (to drive the animation).

2. Preprocess: Extract 3DMM parameters as references for Audio2Pose and Audio2Emotion (or use noise).

3. Pose and expression generation: Use AudioPose Syncer and AudioEmotion Syncer to generate upper body poses and expressions that synchronize with the audio.

4. Animation rendering: Utilize the High-Synchronization Human Renderer to integrate the generated poses and expressions into a complete animation.

5. Output results: The generated animation can be directly used for video production, live streaming, or other applications.

Featured AI Tools

Chinese Picks

Flashcut AI Digital Human

Flashcut is an AI digital human video generation tool. Users can customize their own digital humans and generate voice-over videos simply by inputting text.

Flashcut features image and voice cloning, linking clips, and live stream clipping, accessible via both mobile and web.

Video Generation

1.1M

Vidnoz

Vidnoz's Talking Head is an online tool that allows you to create realistic speaking avatars in minutes. It utilizes artificial intelligence to generate avatar videos with lip-syncing and voice, suitable for various applications like sales, marketing, communication, and support. Talking Head offers free usage and also provides paid plans for more advanced features.

Video Generation

908.6K