Videollama3

Overview :

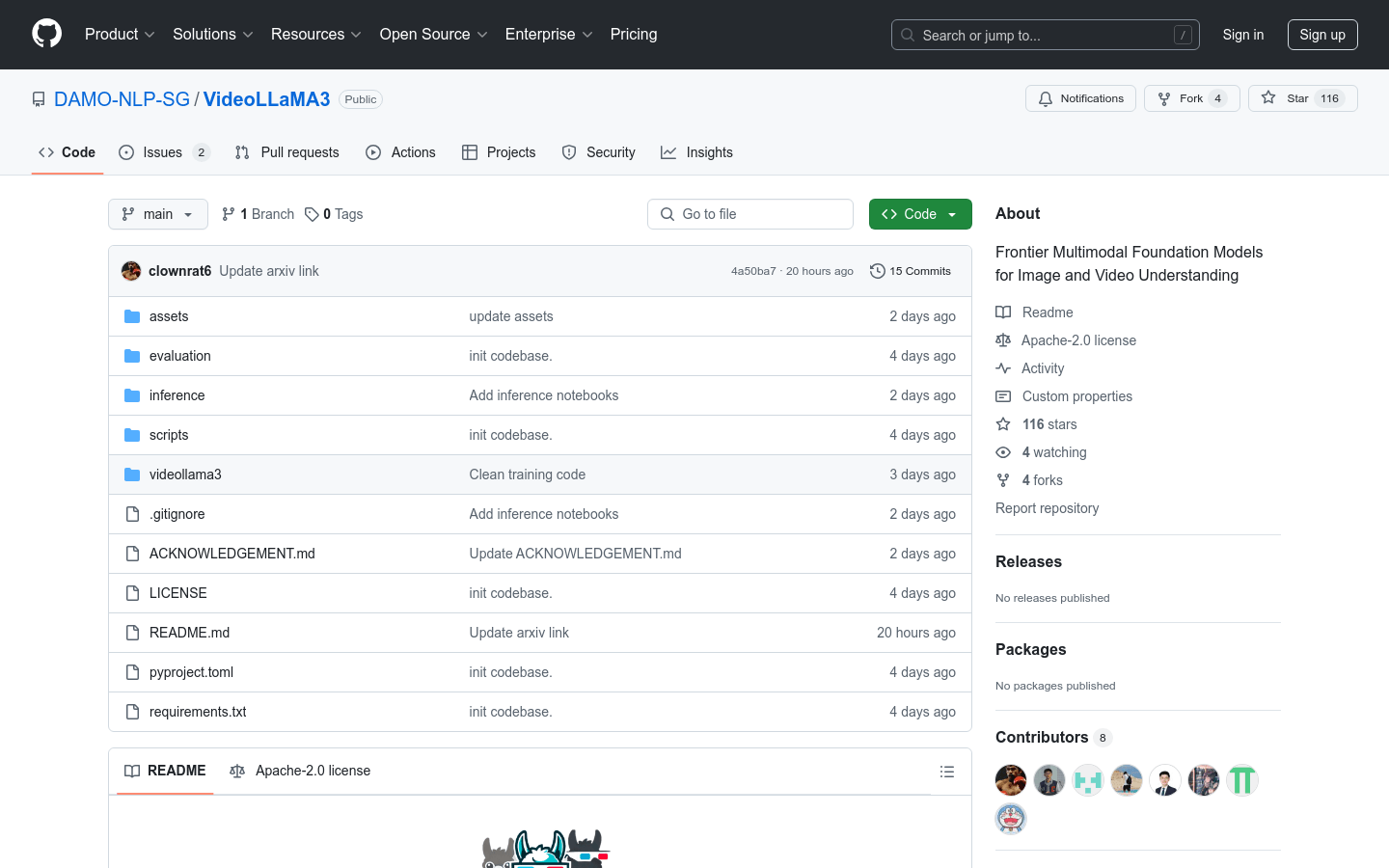

VideoLLaMA3, developed by the DAMO-NLP-SG team, is a state-of-the-art multimodal foundational model specializing in image and video understanding. Based on the Qwen2.5 architecture, it integrates advanced visual encoders (such as SigLip) with powerful language generation capabilities to address complex visual and language tasks. Key advantages include efficient spatiotemporal modeling, strong multimodal fusion capabilities, and optimized training on large-scale datasets. This model is suitable for applications requiring deep video understanding, such as video content analysis and visual question answering, demonstrating significant potential for both research and commercial use.

Target Users :

This model is designed for researchers, developers, and enterprises that require video content analysis, visual question answering, and multimodal applications. Its robust multimodal understanding capabilities enable users to efficiently manage complex visual and language tasks, thereby enhancing productivity and user experience.

Use Cases

In video content analysis, users can upload videos and receive detailed natural language descriptions to quickly comprehend video content.

For visual question answering tasks, users can input questions and obtain accurate answers based on video or image context.

In multimodal applications, combining video and text data for content generation or classification tasks enhances model performance and accuracy.

Features

Supports multimodal input for video and images, capable of generating natural language descriptions.

Offers various pretrained models, including versions with 2B and 7B parameters.

Optimized spatiotemporal modeling capabilities to handle long video sequences.

Supports multilingual generation, suitable for cross-language video understanding tasks.

Provides complete inference code and online demos for users to quickly get started.

Supports local deployment and cloud inference, adaptable to diverse usage scenarios.

Delivers detailed performance evaluations and benchmark results to help users select the appropriate model version.

How to Use

1. Install necessary dependencies, such as PyTorch and transformers.

2. Clone the VideoLLaMA3 GitHub repository and install project dependencies.

3. Download the pretrained model weights and choose the appropriate model version (e.g., 2B or 7B).

4. Use the provided inference code or online demo to test by inputting video or image data.

5. Adjust model parameters or fine-tune if necessary to fit specific application scenarios.

6. Deploy the model locally or in the cloud for practical applications.

Featured AI Tools

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M