Vitpose

Overview :

ViTPose is a series of human pose estimation models based on the Transformer architecture. It leverages the powerful feature extraction capabilities of Transformers to provide a simple yet effective baseline for human pose estimation tasks. The ViTPose models perform exceptionally well across various datasets, demonstrating high accuracy and efficiency. Maintained and updated by the community at the University of Sydney, the model offers various versions of different scales to meet diverse application needs. The ViTPose models are open-sourced on the Hugging Face platform, allowing users to easily download and deploy these models for human pose estimation research and application development.

Target Users :

The target audience includes researchers, developers, and businesses that can utilize the ViTPose model for human pose estimation research, application development, and product integration. For researchers, ViTPose offers a robust baseline model for algorithm improvement and innovation; developers can directly deploy the ViTPose model to quickly implement human pose detection features in areas such as motion analysis, virtual reality, and intelligent surveillance; businesses can integrate ViTPose into their products and services to enhance intelligence levels.

Use Cases

Using the ViTPose model for real-time detection of athletes' poses in sports analysis applications, providing coaches with technical analysis data.

Integrating the model into virtual reality games to interact based on players' poses, enhancing immersion.

Applying it to smart surveillance systems to detect abnormal poses in crowds, improving public safety.

Features

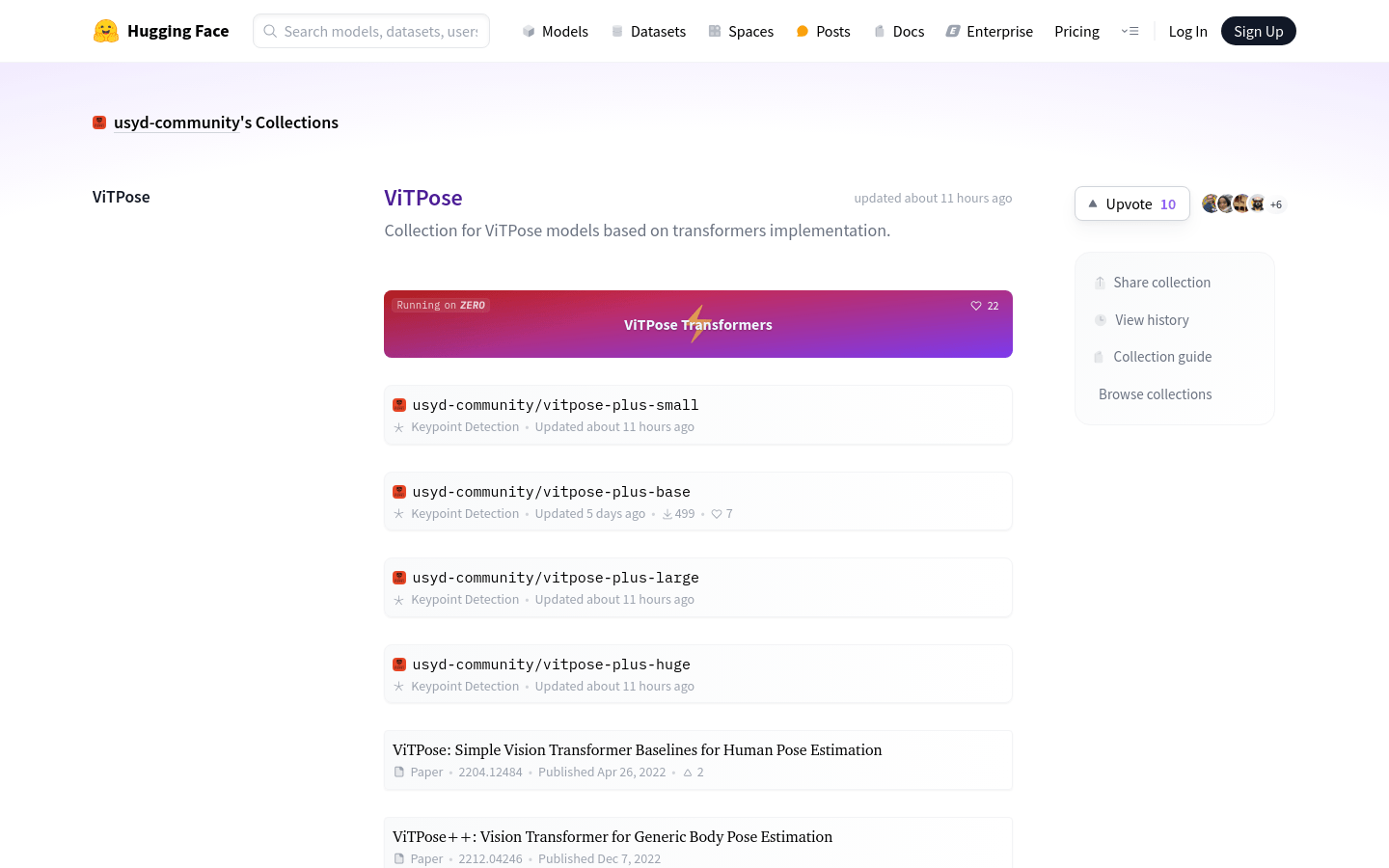

Offers ViTPose models in various scales, including small, base, large, and huge versions, suitable for different computational resources and accuracy requirements.

Supports running on Hugging Face Spaces, allowing users to experience the model's capabilities online.

The model is built on the Transformer architecture, enabling effective capture of long-distance dependencies in images, improving pose estimation accuracy.

Provides detailed documentation and usage guides to help users quickly get started and deploy the model.

Active community maintenance, continuously updating and optimizing the model, fixing potential bugs, and enhancing performance.

How to Use

1. Visit the Hugging Face website and search for the ViTPose model collection.

2. Choose the appropriate version of the ViTPose model based on your needs and computational resources.

3. Download the model weight files and corresponding configuration files.

4. Prepare the image data for detection, ensuring the format and dimensions meet the model's input requirements.

5. Use the provided code examples or API interfaces to load the model and perform pose estimation on the images.

6. Parse the model's output results to obtain the coordinates of the human key points.

7. Further process and analyze the key point coordinates based on the application scenario, such as pose recognition or action tracking.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M