Memory

Overview :

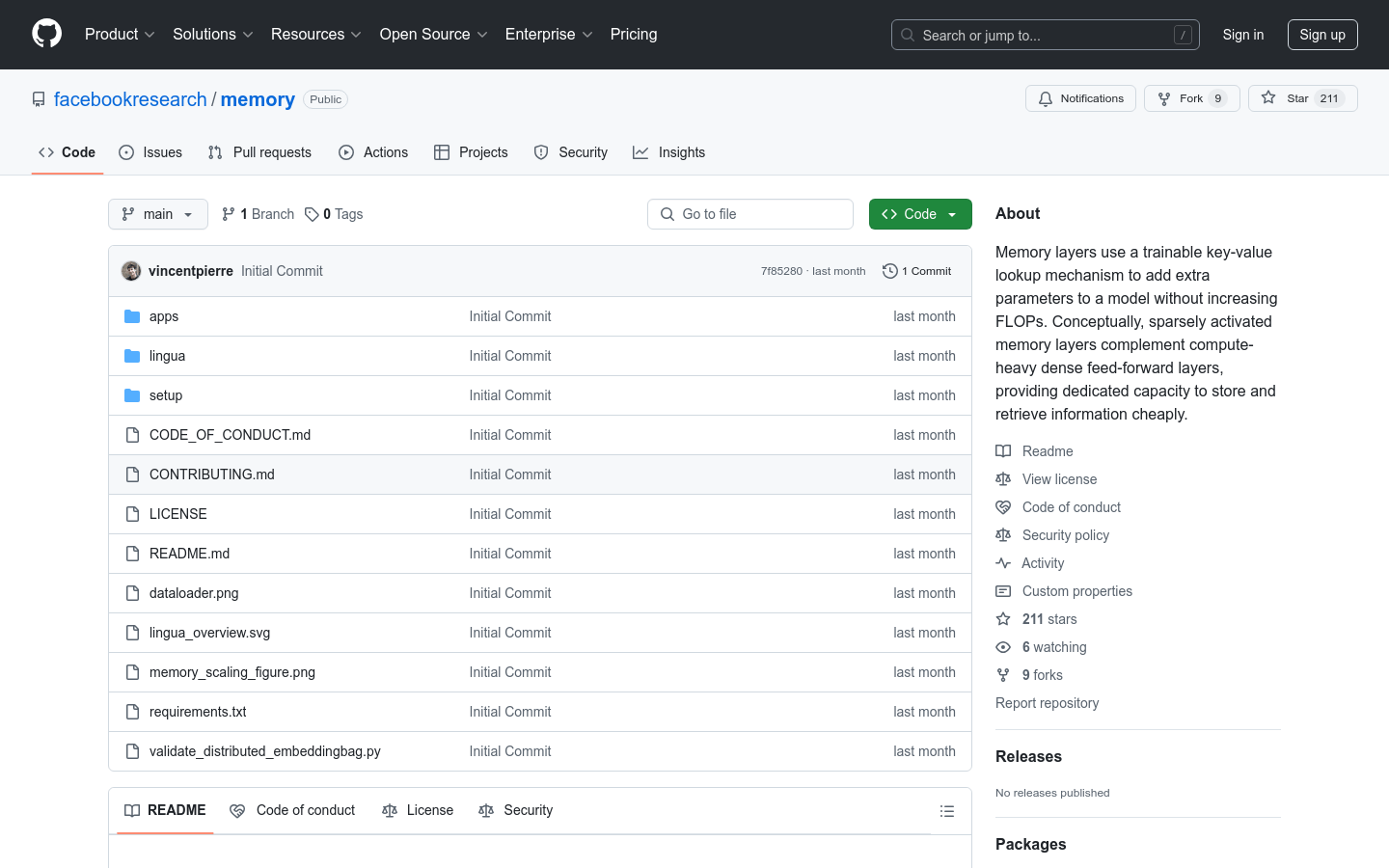

Memory Layers at Scale is an innovative implementation of memory layers that adds extra parameters to models through a trainable key-value lookup mechanism, without increasing floating-point operations. This method is particularly significant in large-scale language models as it enhances the model's storage and retrieval capabilities while maintaining computational efficiency. The key advantages of this technology include effective model capacity expansion, reduced computational resource consumption, and improved model flexibility and scalability. Developed by the Meta Lingua team, this project is suited for scenarios that handle large datasets and complex models.

Target Users :

Designed for developers and researchers who need to expand model capacity without increasing computational load, particularly in large-scale language models and complex data processing scenarios.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M