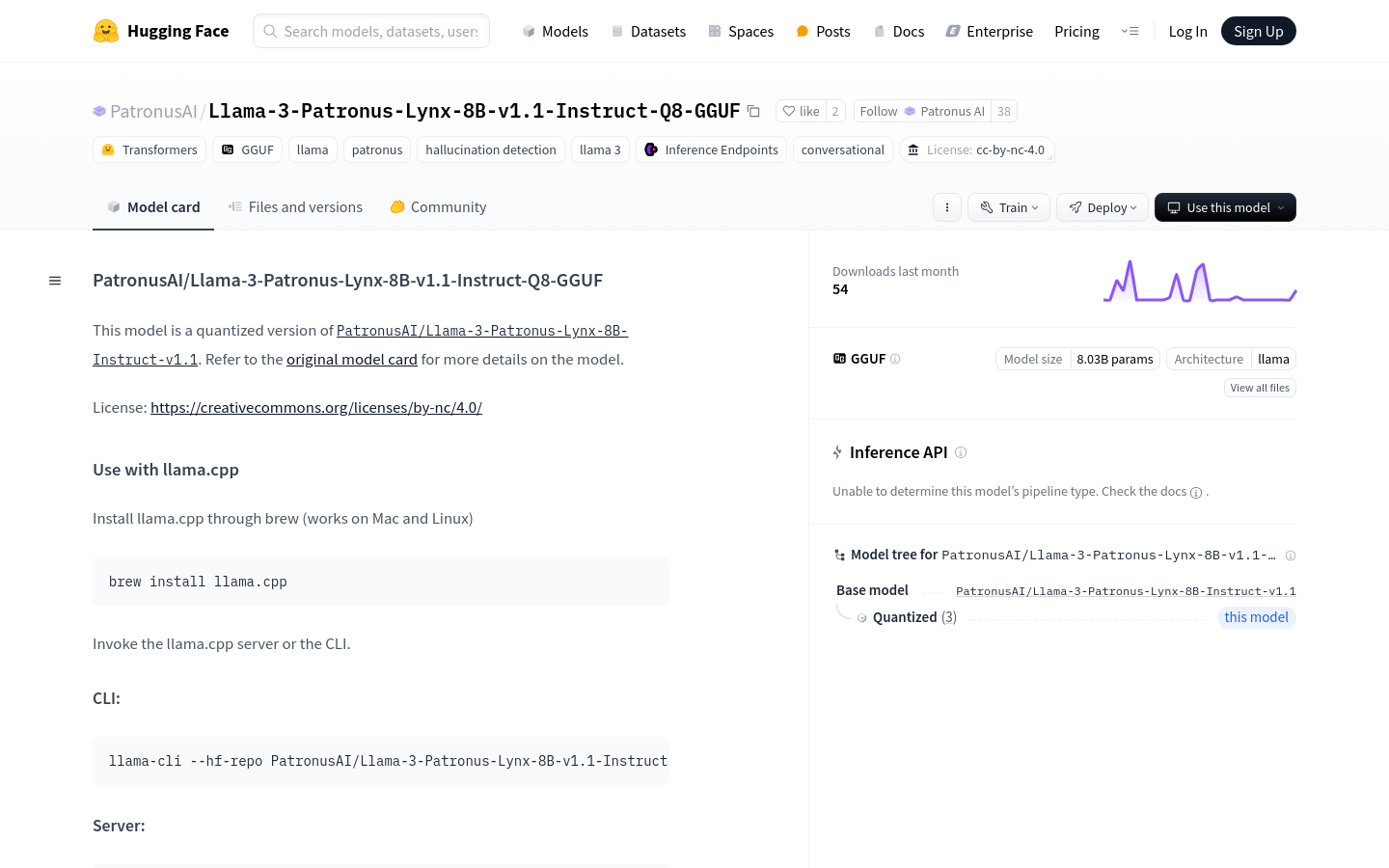

Llama 3 Patronus Lynx 8B V1.1 Instruct Q8 GGUF

Overview :

PatronusAI/Llama-3-Patronus-Lynx-8B-v1.1-Instruct-Q8-GGUF is a quantized version based on the Llama model, specifically designed for dialogue and hallucination detection. It employs the GGUF format and contains 803 million parameters, classifying it as a large language model. Its significance lies in providing high-quality dialogue generation and hallucination detection capabilities, while maintaining efficient model performance. The model is built on the Transformers library and GGUF technology, suitable for applications requiring high-performance conversational systems and content generation.

Target Users :

The target audience includes developers, data scientists, and enterprises looking to build high-performance conversational systems and content generation platforms. This product is suitable for them as it provides a powerful, quantifiable model capable of handling complex natural language processing tasks while maintaining efficient performance.

Use Cases

Example 1: Online customer service chatbot utilizing this model to generate natural language responses, enhancing customer satisfaction.

Example 2: News content review system harnessing the model's hallucination detection capabilities to filter out fake news.

Example 3: Educational platform employing the model to generate personalized learning materials and dialogue exercises.

Features

? Quantized version: The model has undergone quantization to improve operational efficiency.

? Dialogue generation: Capable of generating natural language dialogues, suitable for applications like chatbots.

? Hallucination detection: Equipped with the ability to detect and filter out untrue information.

? Supports GGUF format: Allows the model to be used more broadly across various tools and platforms.

? 803 million parameters: With a substantial number of parameters, capable of handling complex language tasks.

? Based on Transformers: Leverages advanced Transformers technology to ensure model performance.

? Supports Inference Endpoints: Allows direct model inference via API.

How to Use

1. Install llama.cpp: Use brew to install llama.cpp, which supports Mac and Linux systems.

2. Start the llama.cpp server or CLI: Use the provided command-line tools to launch the service.

3. Run inference: Use the llama-cli or llama-server command-line tools to perform model inference.

4. Clone llama.cpp: Clone the llama.cpp project from GitHub.

5. Build llama.cpp: Navigate to the project directory and build the project using the LLAMA_CURL=1 flag.

6. Execute the main program: Run the built llama-cli or llama-server for model inference.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M