Deepseek V3

Overview :

DeepSeek-V3 is a powerful Mixture-of-Experts (MoE) language model featuring a total of 671 billion parameters, activating 37 billion parameters at a time. It utilizes the Multi-head Latent Attention (MLA) and DeepSeekMoE architecture, which were thoroughly validated in DeepSeek-V2. Furthermore, DeepSeek-V3 introduces a novel load balancing strategy without auxiliary losses and establishes multiple-token prediction training objectives for enhanced performance. It has been pre-trained on 14.8 trillion high-quality tokens, followed by supervised fine-tuning and reinforcement learning stages to fully leverage its capabilities. Comprehensive evaluations demonstrate that DeepSeek-V3 outperforms other open-source models, achieving performance on par with leading proprietary models. Despite its outstanding performance, the complete training process of DeepSeek-V3 requires only 2.788 million H800 GPU hours, with a highly stable training environment.

Target Users :

The target audience for DeepSeek-V3 includes researchers, developers, and enterprises in need of an efficient, cost-effective, and high-performance language model to handle large-scale natural language processing tasks. Its exceptional performance and cost efficiency make it particularly suitable for scenarios involving the processing of vast amounts of data and complex tasks, such as machine translation, text summarization, and question answering systems.

Use Cases

In the finance sector, DeepSeek-V3 can analyze large volumes of financial news and reports to extract key information.

In the medical field, the model can understand and analyze medical literature, aiding in drug development and case studies.

In education, DeepSeek-V3 can serve as a supportive tool to help students and researchers quickly access academic materials and solve complex problems.

Features

Employs Multi-head Latent Attention (MLA) and DeepSeekMoE architecture to enhance model efficiency.

Utilizes load balancing strategies without auxiliary losses to minimize performance degradation.

Implements multiple-token prediction training objectives, improving model performance and accelerating inference.

Features an FP8 mixed-precision training framework to reduce training costs.

Innovative methods to extract inference capabilities from DeepSeek R1 series models, enhancing inference performance.

Available for download on HuggingFace, including the 685B-sized model with 671B main model weights and 14B multi-token prediction module weights.

Supports FP8 and BF16 precision inference on NVIDIA and AMD GPUs.

How to Use

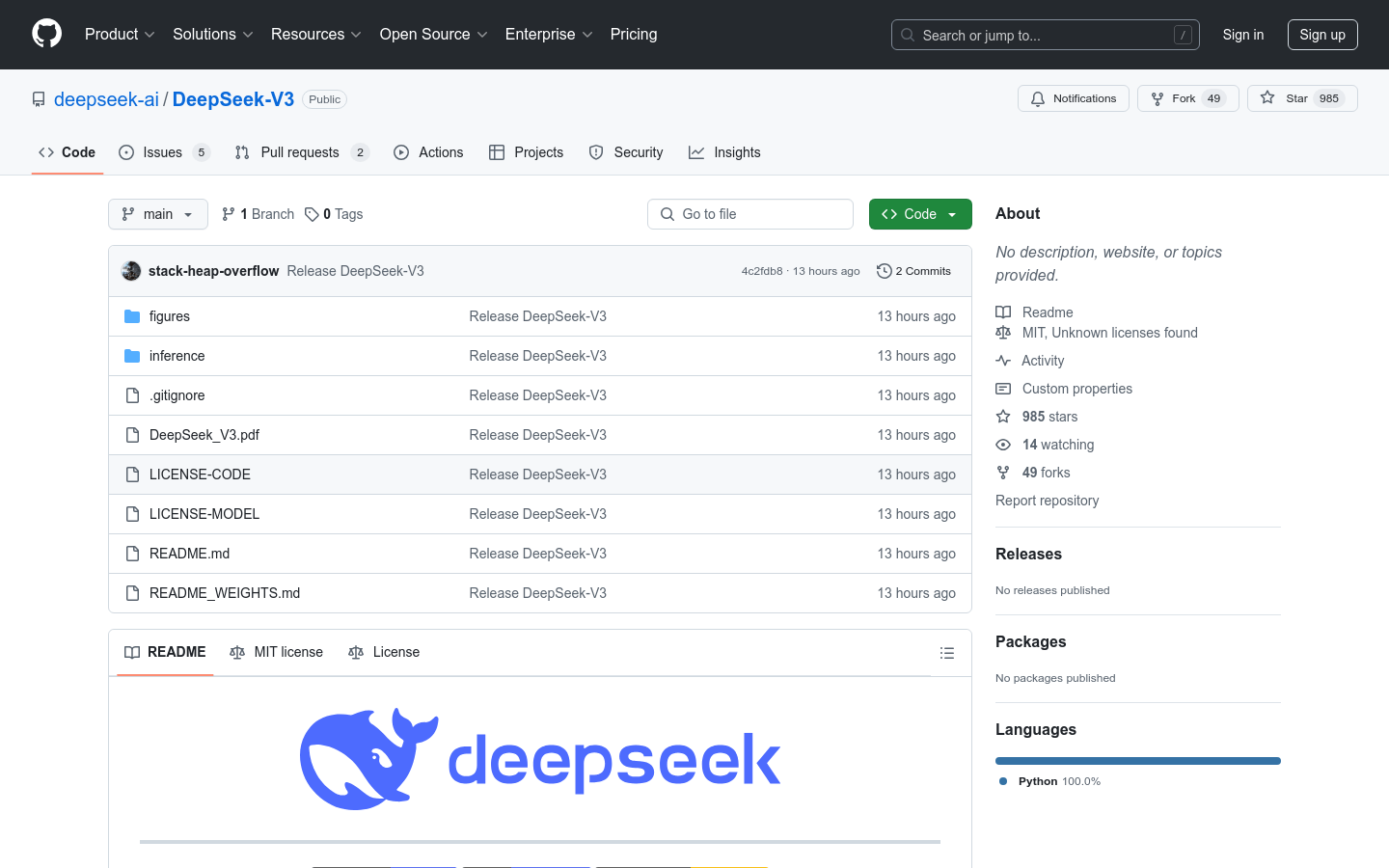

1. Clone the DeepSeek-V3 GitHub repository.

2. Navigate to the `inference` directory and install the dependencies listed in `requirements.txt`.

3. Download the model weights from HuggingFace and place them in the specified folder.

4. Use the provided script to convert FP8 weights to BF16 (if necessary).

5. Run the inference script to interact with DeepSeek-V3 or conduct batch inference based on the provided configuration file and weights path.

6. Alternatively, interact with DeepSeek-V3 through DeepSeek’s official website or API platform.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M