DRT O1 14B

Overview :

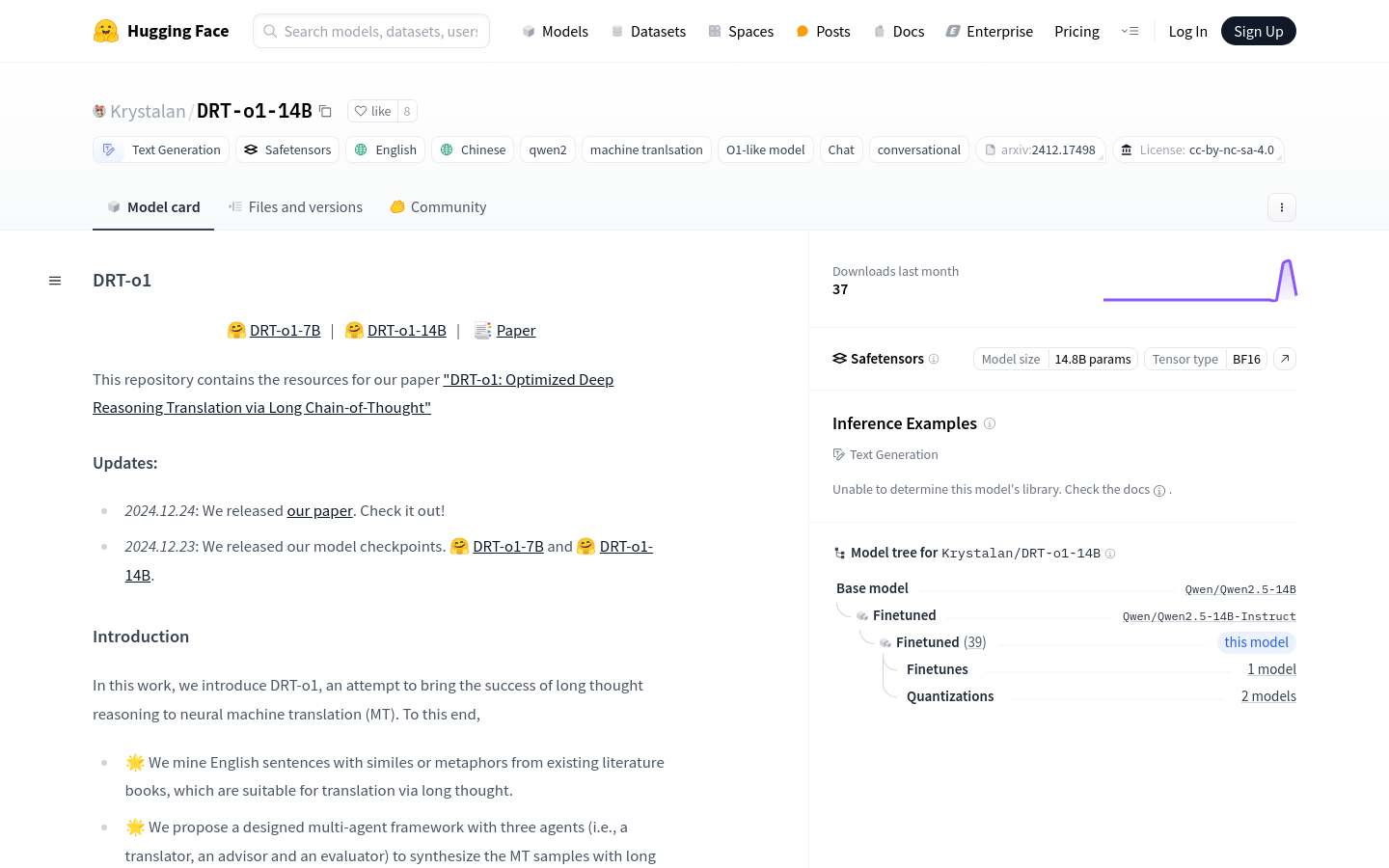

DRT-o1-14B is a neural machine translation model designed to enhance the depth and accuracy of translations through long-chain reasoning. The model extracts English sentences containing metaphors or idioms and employs a multi-agent framework (comprising translator, advisor, and evaluator) to construct long-thought machine translation samples. Trained on the Qwen2.5-14B-Instruct backbone, this model has 14.8 billion parameters and supports BF16 tensor types. Its significance lies in its ability to handle complex translation tasks, especially where deep understanding and reasoning are required, providing a novel solution.

Target Users :

The target audience includes researchers, developers, and enterprises that require complex language translation, particularly those needing deep understanding and reasoning for translation tasks. DRT-o1-14B delivers in-depth translation results, aiding users in grasping and conveying the deeper meanings of the original text.

Use Cases

Translate English sentences containing metaphors into Chinese to explore their deeper meanings.

Use DRT-o1-14B for understanding and translating complex metaphorical expressions in cross-cultural communication.

Utilize DRT-o1-14B to translate specialized literature in academic research for more accurate scholarly information.

Features

Neural machine translation supporting long-chain reasoning

Extracting and translating English sentences with metaphors or idioms

Multi-agent framework design including translator, advisor, and evaluator

Trained on the Qwen2.5-14B-Instruct backbone

Large-scale model with 14.8 billion parameters

Supports BF16 tensor types for optimized computational efficiency

Suitable for complex translation tasks requiring deep understanding and reasoning

How to Use

1. Visit the Hugging Face website and locate the DRT-o1-14B model page.

2. Import the necessary libraries and modules as per the code examples provided on the page.

3. Set the model name and load the model and tokenizer from the Hugging Face library.

4. Prepare the English text for translation and construct the role messages for the system and user.

5. Use the tokenizer to convert the messages into the model input format.

6. Pass the input to the model and set the generation parameters, such as the maximum new token count.

7. Once the model generates the translation results, use the tokenizer to decode the generated tokens.

8. Output and review the translation results, evaluating the accuracy and depth of the translation.

Featured AI Tools

Transluna

Transluna is a powerful online tool designed to simplify the process of translating JSON files into multiple languages. It's an essential resource for developers, localization experts, and anyone involved in internationalization and localization. Transluna delivers accurate JSON translations, helping your website effectively communicate and resonate with global users.

Translation

552.3K

Chinese Picks

Immersive Translation

Immersive Translation is a browser extension that can intelligently recognize the main content area of webpages and offer bilingual translations. It supports document translations in various formats, PDF translations, EPUB ebook translations, and subtitle translations. The extension allows for the selection of multiple translation interfaces, providing the most seamless translation experience possible.

Translation

541.8K