Ultravox V0 4 1 Llama 3 1 70b

Overview :

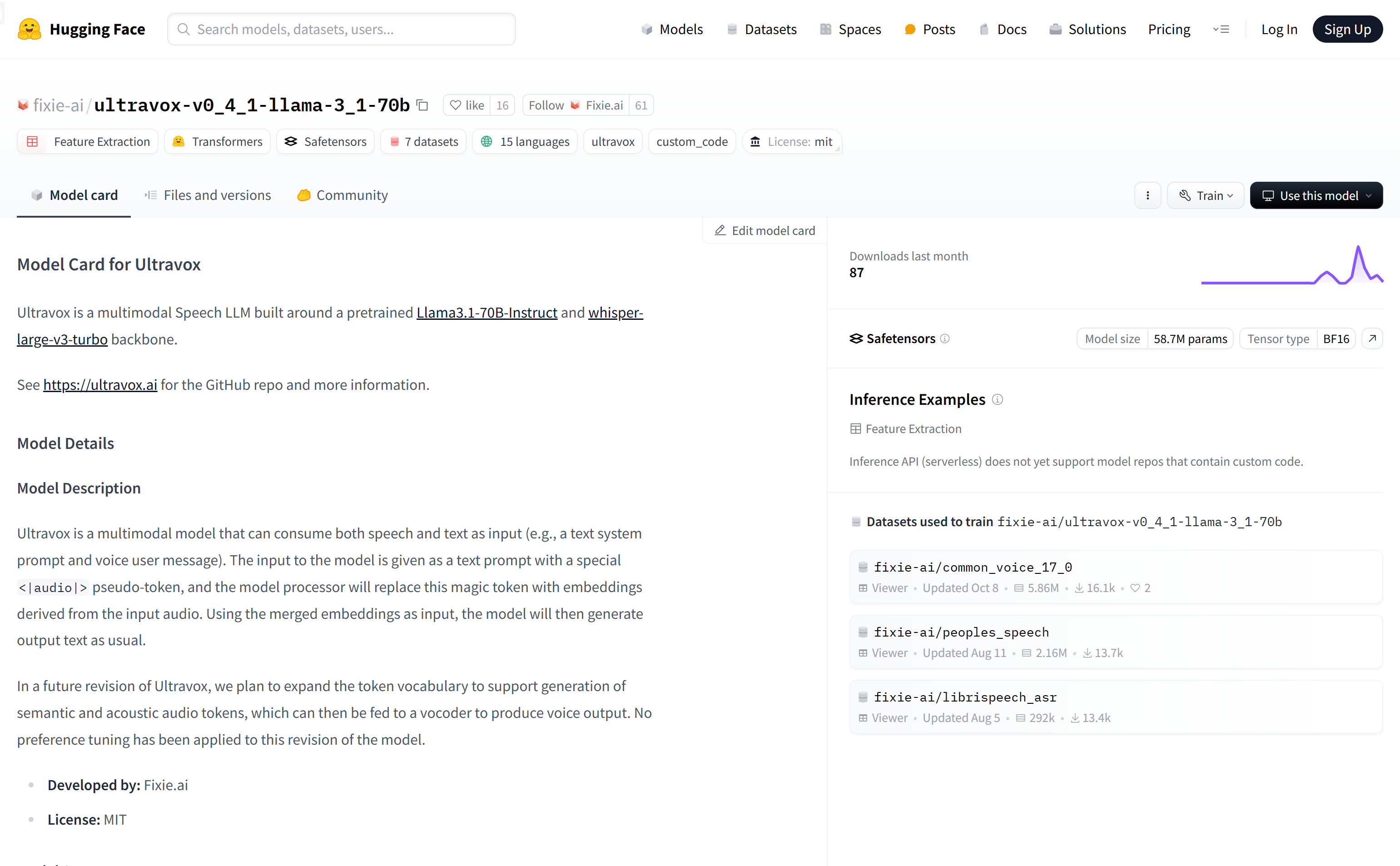

fixie-ai/ultravox-v0_4_1-llama-3_1-70b is a large language model based on pre-trained Llama3.1-70B-Instruct and whisper-large-v3-turbo, capable of handling speech and text input to generate text output. The model converts input audio into embeddings using a special pseudo-tag <|audio|>, which are then merged with text prompts to generate output text. Ultravox is developed to expand the application scenarios of speech recognition and text generation, such as voice agents, speech-to-speech translation, and spoken audio analysis. The model is under the MIT license and developed by Fixie.ai.

Target Users :

The target audience is developers and enterprises that need to process speech and text data, such as professionals in speech recognition, speech translation, and spoken audio analysis. Ultravox's multimodal capabilities make it an ideal choice for these fields, as it provides a more natural and flexible interaction method and improves the accuracy and efficiency of speech and text processing.

Use Cases

As a voice agent, process user voice queries and provide text replies.

Perform speech-to-speech translation, converting speech in one language into speech output in another language.

Analyze spoken audio, extract key information, and generate text summaries.

Features

? Speech and text input processing: Able to handle both speech and text input simultaneously, improving the naturalness and flexibility of interaction.

? Special pseudo-tag <|audio|>: This tag allows the model to recognize and process audio input.

? Audio embedding: Converts input audio into embeddings, which are merged with text prompts to generate output text.

? Multimodal adapter training: Only trains the multimodal adapter, keeping the Whisper encoder and Llama frozen.

? Knowledge distillation loss: Through knowledge distillation loss, Ultravox attempts to match the logits of the text-based Llama backbone.

? Supports multiple languages: Supports 15 languages, enhancing the model's international applicability.

? Model parameters: Has 58.7M parameters, using BF16 tensor type, improving the model's computational efficiency.

How to Use

1. Install necessary libraries: Install the transformers, peft, and librosa libraries using pip.

2. Import libraries: Import the transformers, numpy, and librosa libraries into your code.

3. Load the model: Load the 'fixie-ai/ultravox-v0_4_1-llama-3_1-70b' model using transformers.pipeline.

4. Audio processing: Use the librosa library to load the audio file and obtain the audio data and sample rate.

5. Define interaction: Define a list of turns containing system roles and content.

6. Call the model: Call the model with the audio data, the list of turns, and the sample rate as parameters, and set the max_new_tokens parameter to control the length of the generated text.

7. Get results: The model will generate text output, which can be used for further processing or displayed directly to the user.

Featured AI Tools

Chinese Picks

Douyin Jicuo

Jicuo Workspace is an all-in-one intelligent creative production and management platform. It integrates various creative tools like video, text, and live streaming creation. Through the power of AI, it can significantly increase creative efficiency. Key features and advantages include:

1. **Video Creation:** Built-in AI video creation tools support intelligent scripting, digital human characters, and one-click video generation, allowing for the rapid creation of high-quality video content.

2. **Text Creation:** Provides intelligent text and product image generation tools, enabling the quick production of WeChat articles, product details, and other text-based content.

3. **Live Streaming Creation:** Supports AI-powered live streaming backgrounds and scripts, making it easy to create live streaming content for platforms like Douyin and Kuaishou. Jicuo is positioned as a creative assistant for newcomers and creative professionals, providing comprehensive creative production services at a reasonable price.

AI design tools

105.1M

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M