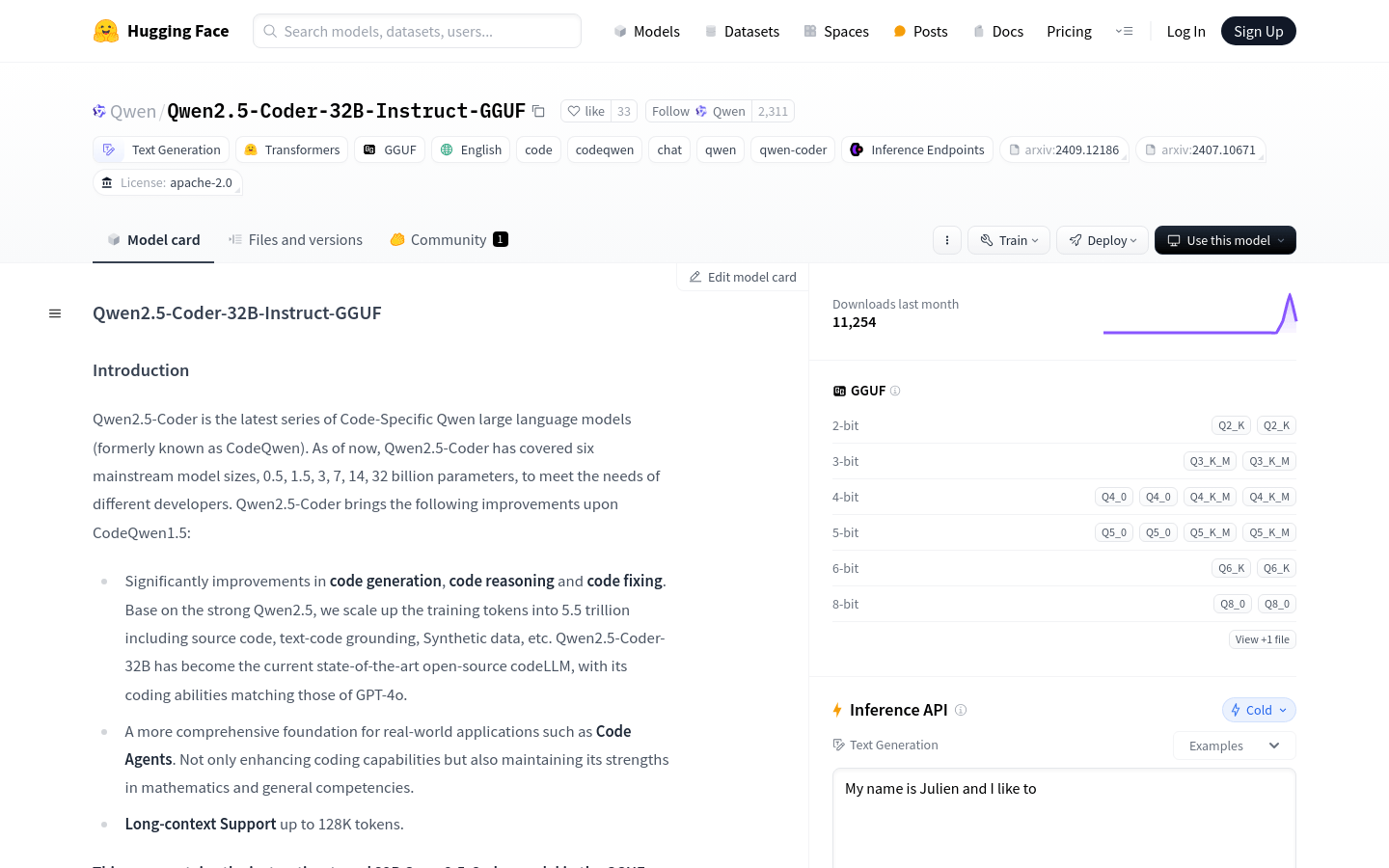

Qwen2.5 Coder 32B Instruct GGUF

Overview :

Qwen2.5-Coder is a model specifically designed for code generation, significantly improving capabilities in this area, with a variety of parameter sizes and support for quantization. It is free and enhances efficiency and quality for developers.

Target Users :

The target audience includes developers and programmers, aiming to enhance development efficiency and code quality by addressing code generation, comprehension, and repair needs.

Use Cases

Automatically generate missing code segments for developers

Analyze code review logic

Assist students in understanding code structure

Features

Powerful code generation capabilities

Enhanced code reasoning abilities

Assistance in code repair

Support for long contexts

Multiple parameter sizes available

Based on advanced architecture

Offers quantized versions

How to Use

1. Install huggingface_hub and llama.cpp.

2. Use huggingface-cli to download the GGUF file.

3. If the file is split, merge it using llama-gguf-split.

4. Start the model using llama-cli.

5. Interact with the model to ask coding questions.

6. Evaluate the results and make adjustments.

Featured AI Tools

Chinese Picks

Douyin Jicuo

Jicuo Workspace is an all-in-one intelligent creative production and management platform. It integrates various creative tools like video, text, and live streaming creation. Through the power of AI, it can significantly increase creative efficiency. Key features and advantages include:

1. **Video Creation:** Built-in AI video creation tools support intelligent scripting, digital human characters, and one-click video generation, allowing for the rapid creation of high-quality video content.

2. **Text Creation:** Provides intelligent text and product image generation tools, enabling the quick production of WeChat articles, product details, and other text-based content.

3. **Live Streaming Creation:** Supports AI-powered live streaming backgrounds and scripts, making it easy to create live streaming content for platforms like Douyin and Kuaishou. Jicuo is positioned as a creative assistant for newcomers and creative professionals, providing comprehensive creative production services at a reasonable price.

AI design tools

105.1M

English Picks

Pika

Pika is a video production platform where users can upload their creative ideas, and Pika will automatically generate corresponding videos. Its main features include: support for various creative idea inputs (text, sketches, audio), professional video effects, and a simple and user-friendly interface. The platform operates on a free trial model, targeting creatives and video enthusiasts.

Video Production

17.6M