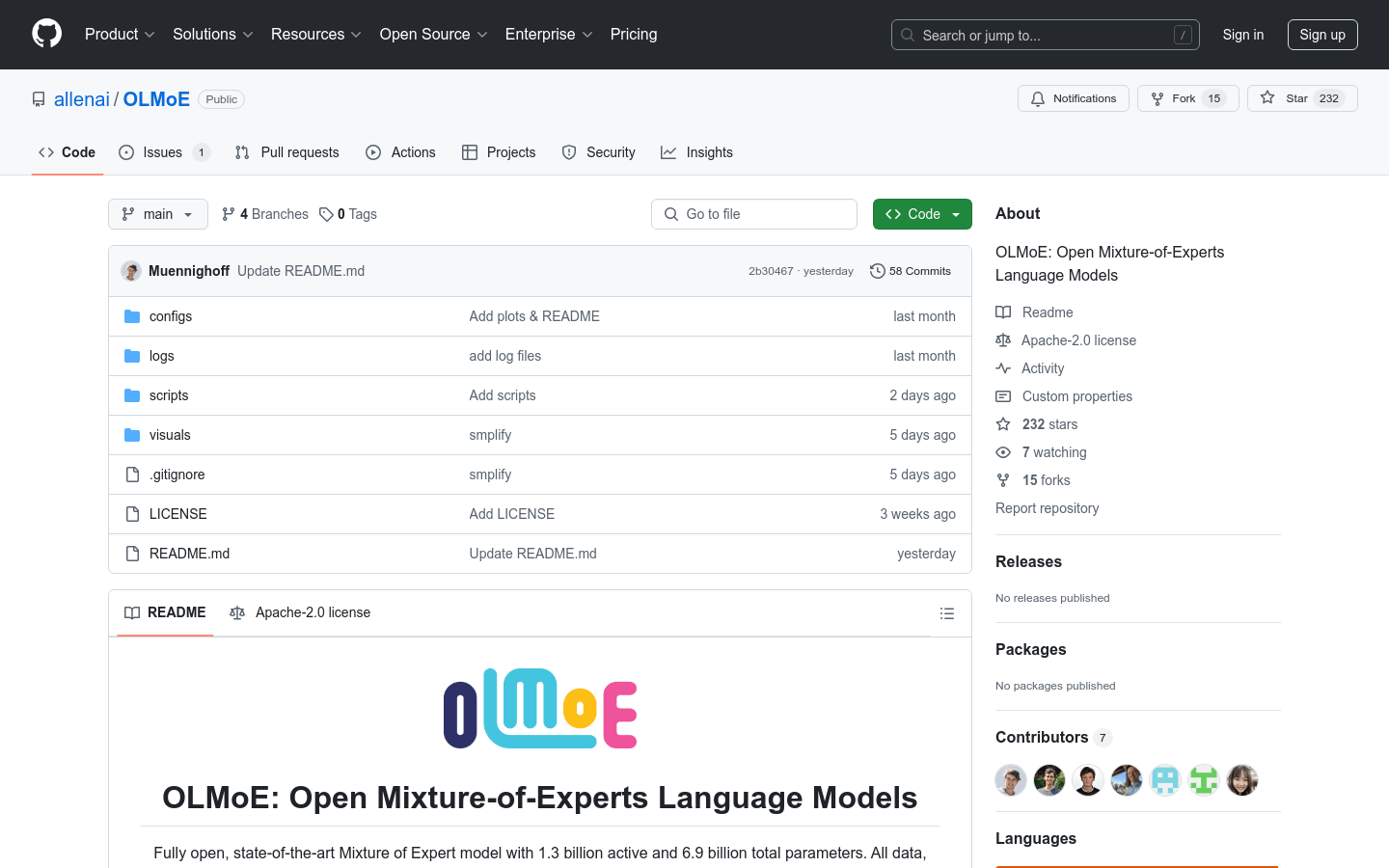

Olmoe

Overview :

OLMoE is a fully open, state-of-the-art expert mixture model with 130 million active parameters and a total of 690 million parameters. All data, code, and logs associated with the model have been released. It provides a comprehensive overview of resources related to the paper 'OLMoE: Open Mixture-of-Experts Language Models'. This model has significant applications in pre-training, fine-tuning, adaptation, and evaluation, marking a milestone in the field of natural language processing.

Target Users :

OLMoE is suitable for researchers and developers in the field of natural language processing, particularly those who need to handle large-scale data and complex language tasks. Its powerful computational capabilities and flexibility make it an ideal choice for researching and developing advanced language models.

Use Cases

Used for building chatbots that provide seamless conversational experiences.

Delivers high-quality output in text generation tasks, such as article writing or content creation.

Achieves accurate translations across languages in the field of machine translation.

Features

Supports natural language processing with large-scale parameters.

Provides checkpoints, code, data, and logs for pre-training, fine-tuning, and adaptation phases.

Facilitates model adaptation across multiple languages and domains.

Offers comprehensive training and evaluation tools.

Allows access and use of the model via the Hugging Face Hub.

Includes visualization tools to aid in understanding model structure and performance.

How to Use

First, install the necessary libraries, such as transformers and torch.

Obtain the model and tokenizer from the Hugging Face Hub.

Prepare the input data and convert it into a format accepted by the model.

Call the model's generate method to produce output.

Use the tokenizer to decode the generated output into readable text.

Adjust model parameters as needed to optimize performance.

Analyze model performance and structure using visualization tools.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M