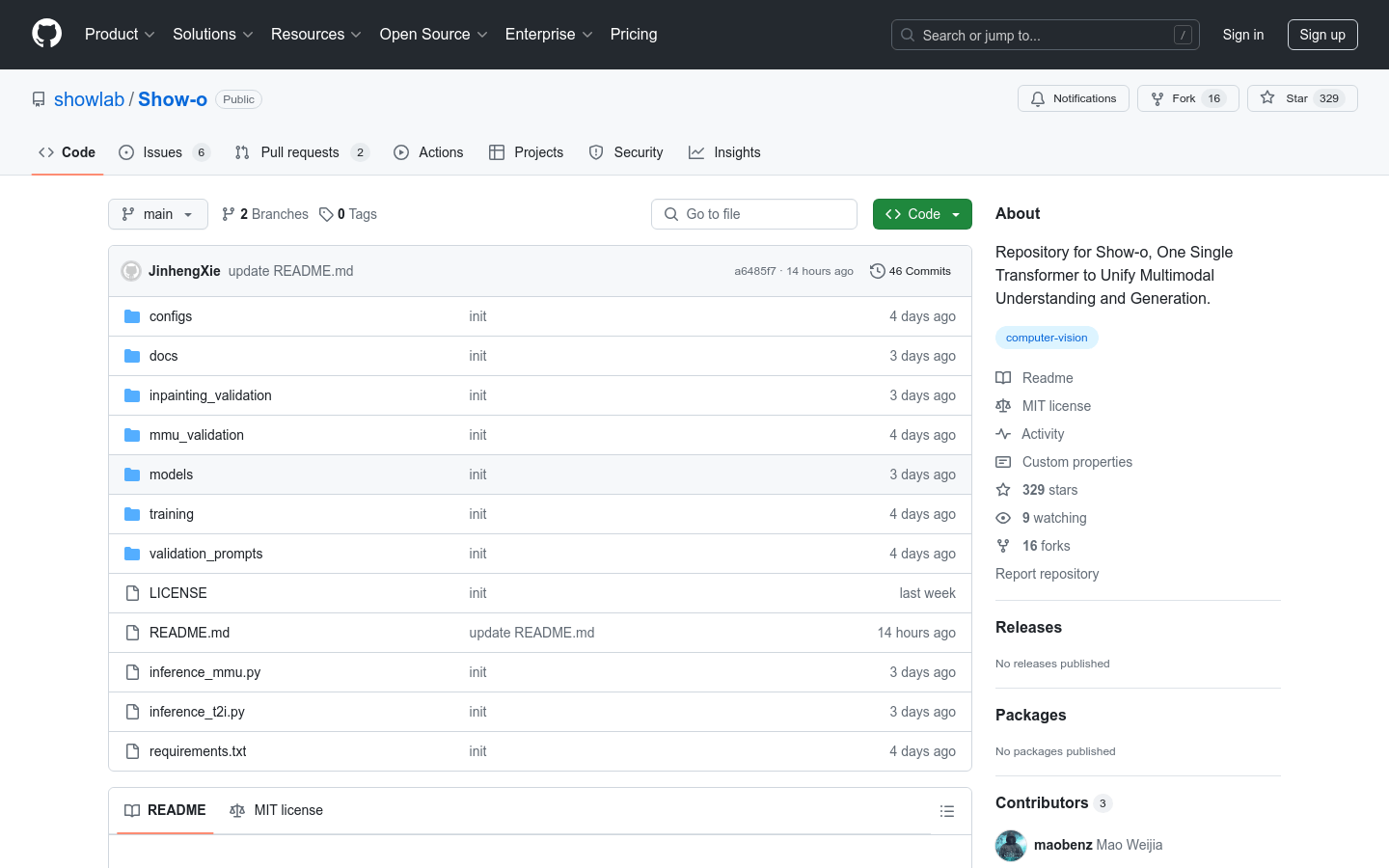

Show O

Overview :

Show-o is a unified transformer model designed for multimodal understanding and generation. It can handle image captioning, visual question answering, text-to-image generation, text-guided inpainting and expansion, as well as mixed-modal generation. This model was collaboratively developed by the Show Lab at the National University of Singapore and ByteDance, utilizing the latest deep learning techniques to understand and generate data across various modalities, representing a significant breakthrough in the field of artificial intelligence.

Target Users :

The target audience for the Show-o model primarily consists of researchers and developers in the field of artificial intelligence, especially those focusing on computer vision and natural language processing. This model aids them in analyzing and generating multimodal data more efficiently, fostering the advancement of AI technology.

Use Cases

Researchers use the Show-o model for image captioning tasks, automatically generating descriptions for a large number of images.

Developers utilize Show-o to enhance the accuracy of intelligent customer service systems through visual question answering.

Artists leverage Show-o's text-to-image generation feature to create unique works of art.

Features

Image Captioning: Automatically generate descriptive text for images.

Visual Question Answering: Provide answers to questions based on image content.

Text-to-Image Generation: Generate corresponding images based on textual descriptions.

Text-Guided Inpainting: Repair damaged parts of images guided by text.

Text-Guided Expansion: Creatively expand upon images using text prompts.

Mixed-Modal Generation: Combine text and images to create new multimodal content.

How to Use

1. Install the necessary environment and dependencies.

2. Download and configure the pre-trained model weights.

3. Log in to your wandb account to view the inference demonstration results.

4. Run the inference demonstration for multimodal understanding.

5. Run the inference demonstration for text-to-image generation.

6. Run the inference demonstration for text-guided inpainting and expansion.

7. Adjust the model parameters as needed to optimize performance.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M