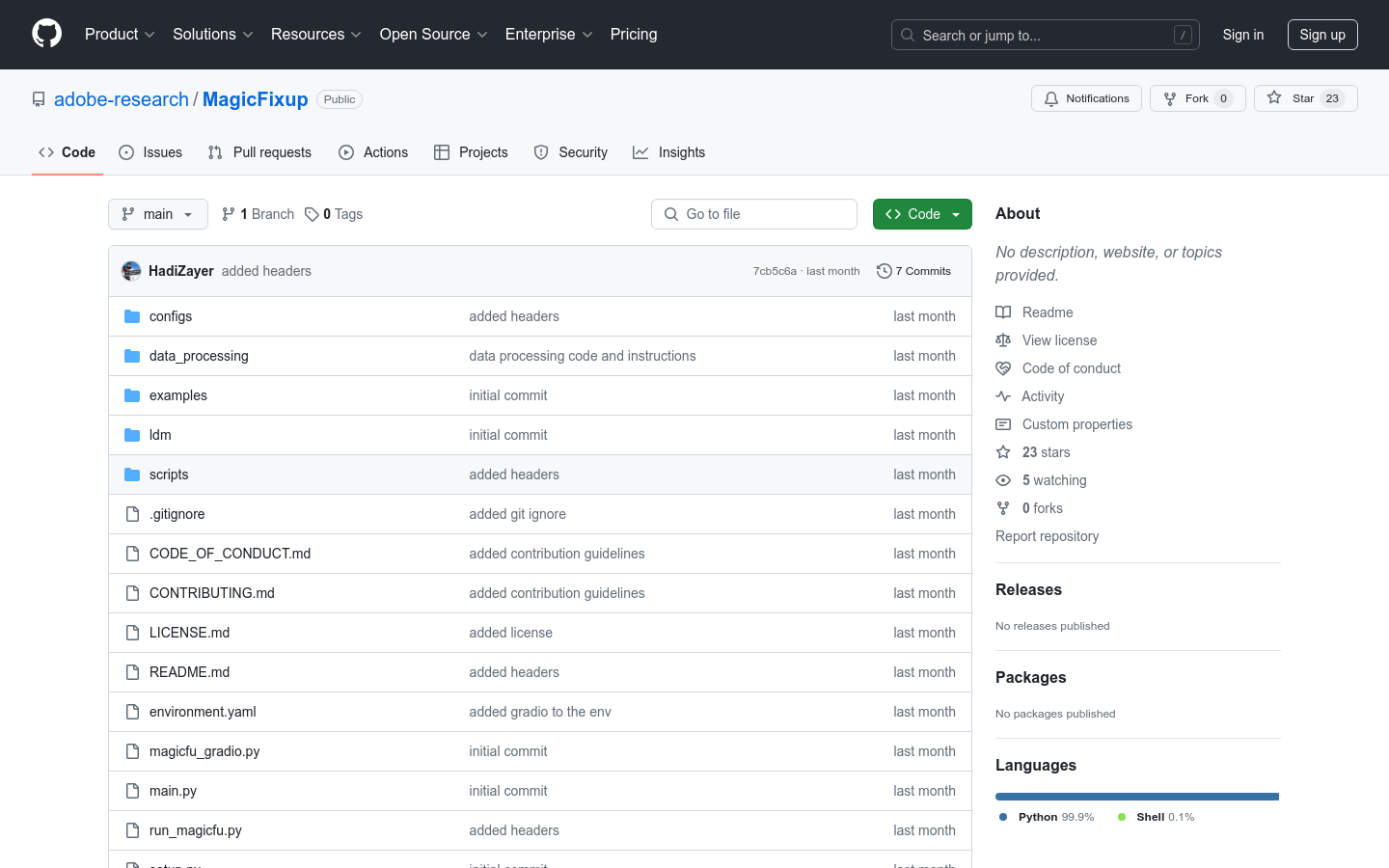

Magicfixup

Overview :

MagicFixup is an open-source image editing model developed by Adobe Research that simplifies photo editing by observing dynamic video. Utilizing deep learning technology, it automatically identifies and corrects defects in images, enhancing editing efficiency while minimizing manual efforts. Trained on the Stable Diffusion 1.4 model, it boasts robust image processing capabilities suitable for both professional image editors and enthusiasts.

Target Users :

MagicFixup is designed for professionals and enthusiasts who require efficient image editing. It automates the editing process, significantly reducing the time and effort involved in manual editing, allowing users to focus more on creativity and fine-tuning details.

Use Cases

Professional photographers quickly fix minor flaws that occur during shooting using MagicFixup.

Designers leverage the model for rapid image adjustments in their design projects.

Image editing enthusiasts learn and practice advanced image editing techniques through MagicFixup.

Features

Automated Image Repair: Automatically identifies and fixes defects in images.

Dynamic Video Learning: Learns image editing techniques by observing dynamic video.

Deep Learning Technology: Trained on the Stable Diffusion 1.4 model.

User-Friendly Interface: Offers a friendly user interface through Gradio demos.

Custom Model Training: Supports users in training models using their own video datasets.

Environment Configuration File: Provides an environment.yaml file to streamline the installation process.

Memory Optimization: Utilizes Deepspeed technology to reduce memory requirements.

How to Use

1. Download and install the required environment dependencies by running the provided script to create a conda environment.

2. Use the provided Google Drive link to download the pre-trained Magic Fixup model.

3. Prepare the original and edited images, ensuring the alpha channel in the edited images is correctly set.

4. Run the inference script `run_magicfu.py`, inputting the paths of the reference and edited images.

5. Launch the Gradio demo to test inputs and view editing results through the user interface.

6. To train a custom model, first process the video dataset, then use `main.py` to train the model.

7. Modify the training and validation data paths in the configuration file as needed to point to the location of the processed data.

Featured AI Tools

Remini

Remini is an online, real-time photo enhancement app that uses world-leading AI technology to transform low-resolution, blurry, pixelated, outdated, and damaged photos into high-quality, crisp, sharp images. Remini also offers more AI-powered image processing features, such as portrait enhancement, painting effects, and blink effects.

AI image enhancement

1.7M

ARC Image Enhancer

ARC Image Enhancer is an image processing tool provided by Tencent AI. It includes portrait restoration, portrait cutout, and anime enhancement features. It can effectively improve the quality and aesthetics of images and can be used in scenarios such as repairing old photos or removing backgrounds from photos.

AI image enhancement

1.7M