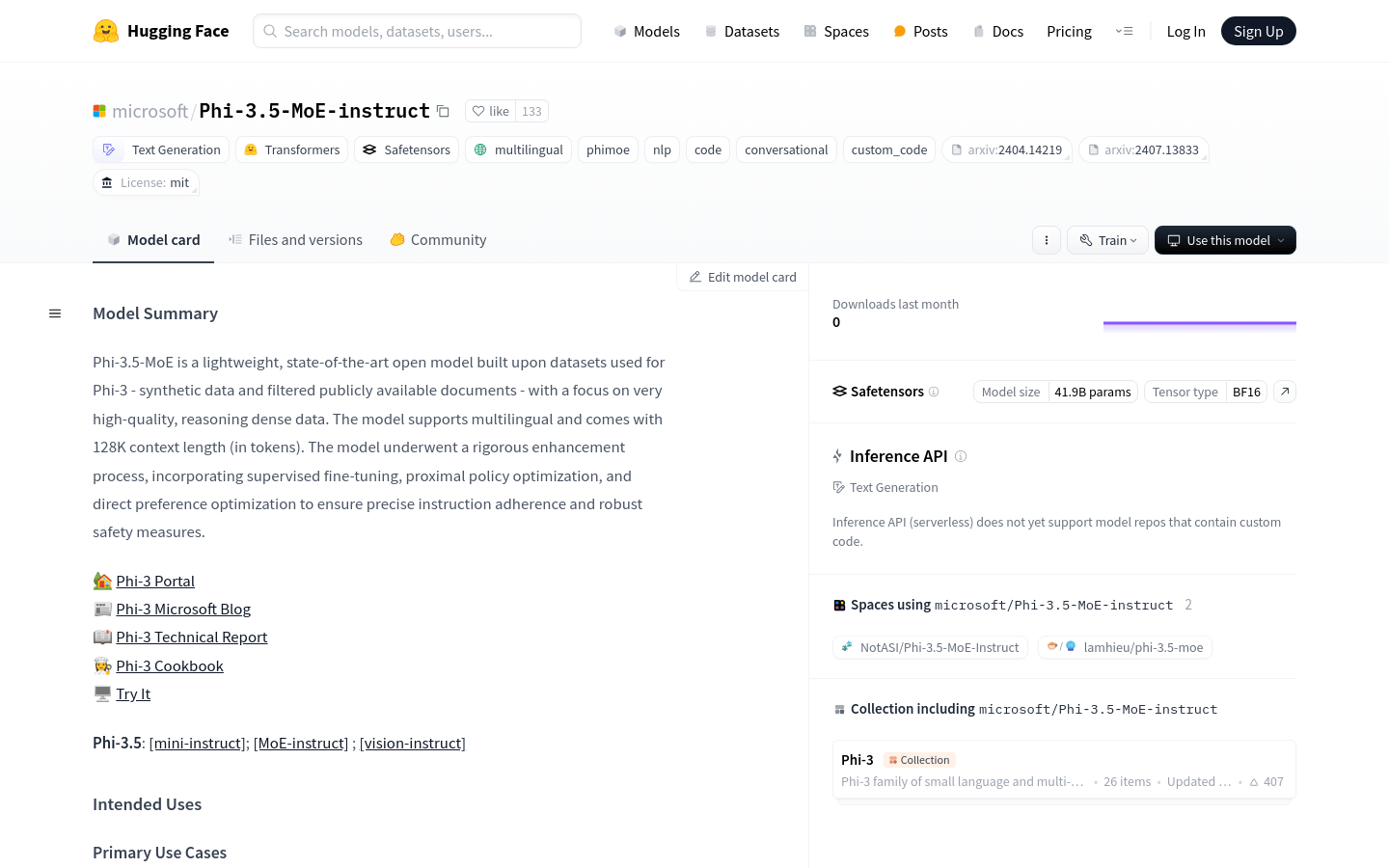

Phi 3.5 MoE Instruct

Overview :

Phi-3.5-MoE-instruct is a lightweight, multilingual AI model developed by Microsoft, built on high-quality, inference-intensive data, supporting a context length of up to 128K. The model has undergone a rigorous enhancement process, including supervised fine-tuning, proximal policy optimization, and direct preference optimization, to ensure precise instruction-following and robust safety measures. It is designed to accelerate research in language and multimodal models, serving as a building block for generative AI capabilities.

Target Users :

The target audience includes researchers and developers who need to perform text generation, reasoning, and analysis in a multilingual environment. This model is suitable for businesses and individuals looking to achieve high-performance AI applications under resource constraints.

Use Cases

Researchers use Phi-3.5-MoE-instruct for cross-language text generation experiments.

Developers leverage this model to create intelligent dialogue systems in constrained computing environments.

Educational institutions adopt this technology for programming and mathematics teaching assistance.

Features

Supports multilingual text generation for commercial and research purposes.

Designed for memory/computationally constrained environments and latency-sensitive scenarios.

Possesses strong reasoning capabilities, particularly in code, mathematics, and logic.

Supports context lengths of up to 128K, suitable for long text tasks.

Has undergone safety assessments and red team testing to ensure model security.

Enhances instruction compliance through supervised fine-tuning and direct preference optimization.

Integrates Flash-Attention technology, requiring specific GPU hardware support.

How to Use

1. Ensure that you have a supported Python environment and necessary dependencies installed, such as PyTorch and Transformers.

2. Use pip to install or update the transformers library.

3. Download the Phi-3.5-MoE-instruct model and tokenizer from the Hugging Face model hub.

4. Configure model loading parameters, including device mapping and remote code trust settings.

5. Prepare input data, which can be multilingual text or prompts in specific formats.

6. Utilize the model for inference or text generation, adjusting generation parameters as needed.

7. Analyze and evaluate the generated text or reasoning results to meet specific application requirements.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M