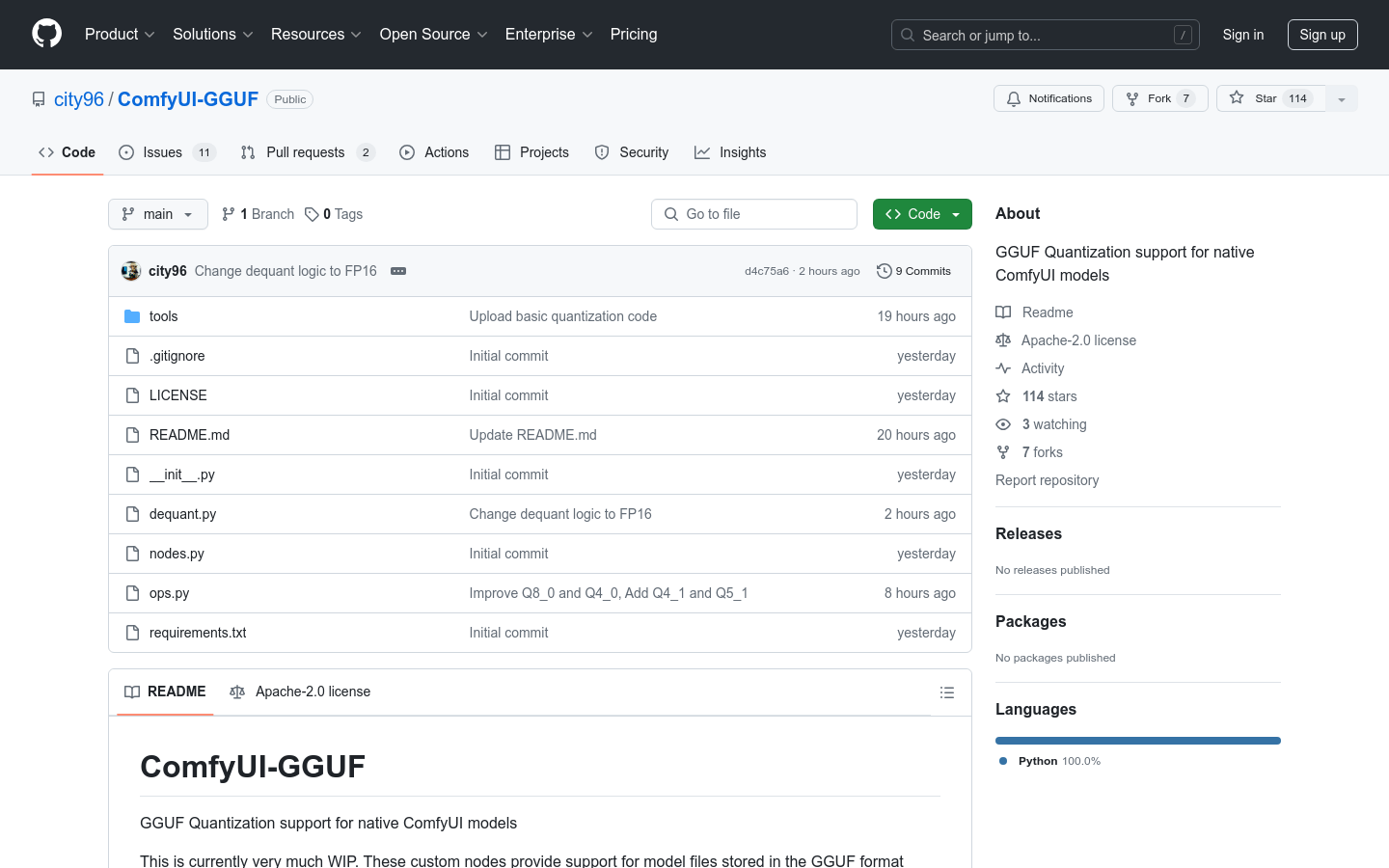

Comfyui GGUF

Overview :

ComfyUI-GGUF is a project that provides GGUF quantization support for native ComfyUI models. It allows model files to be stored in GGUF format, which is promoted by llama.cpp. Although standard UNET models (conv2d) are not suitable for quantization, transformer/DiT models like flux appear to be minimally affected by quantization. This enables them to run on low-end GPUs with lower bits per weight variable rate.

Target Users :

The primary audience consists of developers and researchers who use ComfyUI for model training and deployment. They need to optimize model performance in resource-constrained environments, and ComfyUI-GGUF assists them in achieving this through quantization techniques.

Use Cases

Developers use ComfyUI-GGUF to deploy the flux model on low-end GPUs, achieving resource optimization.

Researchers leverage GGUF quantization techniques to enhance model performance on edge devices.

Educational institutions use ComfyUI-GGUF as a case study in teaching deep learning, demonstrating model optimization techniques.

Features

Supports quantization of models in GGUF format.

Applicable to transformer/DiT models such as flux.

Enables operation on low-end GPUs for optimized resource usage.

Provides custom nodes to support model quantization.

Excludes support for LoRA / Controlnet as weights have been quantized.

Includes installation and usage guides.

How to Use

1. Ensure your ComfyUI version supports custom operations.

2. Clone the ComfyUI-GGUF repository using Git.

3. Install the necessary dependencies for inference (pip install --upgrade gguf).

4. Place the .gguf model files in the ComfyUI/models/unet folder.

5. Use the GGUF Unet loader found under the bootleg category.

6. Adjust model parameters and settings as needed for model training or inference.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M