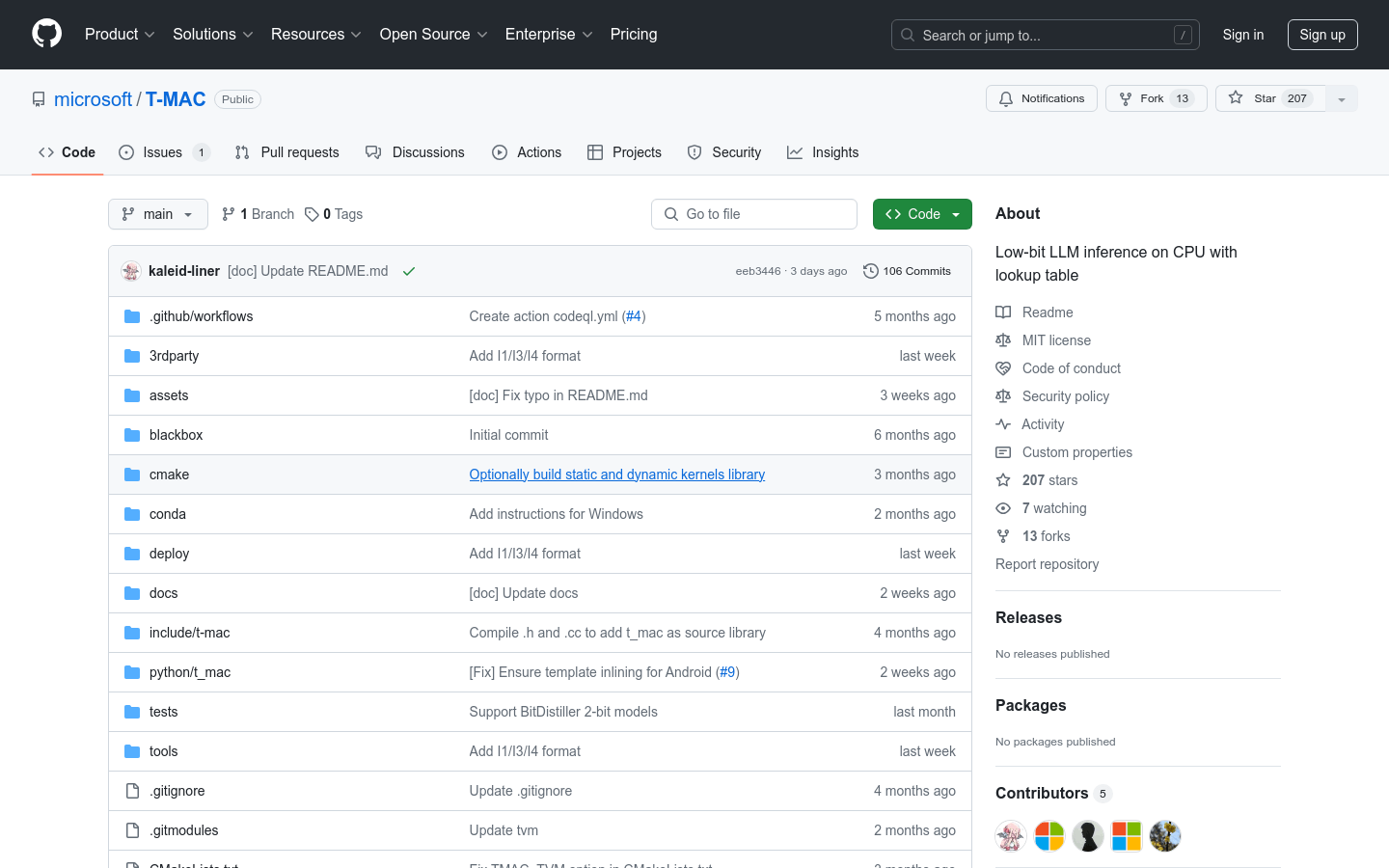

T MAC

Overview :

T-MAC is a kernel library that directly supports mixed-precision matrix multiplication using lookup tables, eliminating the need for quantization operations, aimed at accelerating low-bit large language model inference on CPUs. It supports various low-bit models including W4A16 for GPTQ/gguf, W2A16 for BitDistiller/EfficientQAT, and BitNet W1(.58)A8 on ARM/Intel CPUs across OSX/Linux/Windows. T-MAC achieved a token generation throughput of 20 tokens per second on a single core and 48 tokens per second on four cores for 3B BitNet on the Surface Laptop 7, making it 4-5 times faster than existing state-of-the-art low-bit CPU frameworks such as llama.cpp.

Target Users :

T-MAC is primarily designed for developers and enterprises needing large language model inference on CPUs, particularly those looking to achieve real-time or near-real-time inference performance in edge devices. It is suitable for scenarios that require optimization of energy consumption and computational resources, such as mobile devices, embedded systems, or any resource-constrained environment.

Use Cases

Achieved significant speed improvement using T-MAC for 3B BitNet model inference on Surface Laptop 7.

Realized performances comparable to NPU on Snapdragon X Elite chip using T-MAC while reducing model size.

Demonstrated energy efficiency advantages compared to CUDA GPU for specific tasks on Jetson AGX Orin using T-MAC.

Features

Inference support for Llama models with 1/2/3/4-bit quantization in GPTQ format.

On the latest Snapdragon X Elite chip, T-MAC's token generation speed even surpasses that of NPU.

Native deployment support on Windows ARM, showcasing significant 5-fold speedup on Surface Laptop 7.

By utilizing lookup tables, T-MAC significantly reduces CPU core usage while lowering power consumption.

Compared to NPU, T-MAC outperforms Qualcomm's Snapdragon Neural Processing Engine (NPE) on Snapdragon X Elite chip.

On Jetson AGX Orin, T-MAC's 2-bit mpGEMM performance is comparable to that of CUDA GPUs.

How to Use

Install a Python environment ensuring version 3.8 to meet TVM requirements.

Depending on your operating system, install cmake>=3.22 and other dependencies.

Use pip to install T-MAC within a virtual environment and activate the corresponding environment variables.

Utilize the provided tool scripts for end-to-end inference or integrate with llama.cpp for task-specific inference.

Adjust parameters as needed, for example, use -fa to enable fast aggregation for an additional speed boost.

Featured AI Tools

Gemini

Gemini is the latest generation of AI system developed by Google DeepMind. It excels in multimodal reasoning, enabling seamless interaction between text, images, videos, audio, and code. Gemini surpasses previous models in language understanding, reasoning, mathematics, programming, and other fields, becoming one of the most powerful AI systems to date. It comes in three different scales to meet various needs from edge computing to cloud computing. Gemini can be widely applied in creative design, writing assistance, question answering, code generation, and more.

AI Model

11.4M

Chinese Picks

Liblibai

LiblibAI is a leading Chinese AI creative platform offering powerful AI creative tools to help creators bring their imagination to life. The platform provides a vast library of free AI creative models, allowing users to search and utilize these models for image, text, and audio creations. Users can also train their own AI models on the platform. Focused on the diverse needs of creators, LiblibAI is committed to creating inclusive conditions and serving the creative industry, ensuring that everyone can enjoy the joy of creation.

AI Model

6.9M